A woman in Chicago noticed something startling while using Google:

Hind Makki, a blogger and founder of a photo project depicting women in mosques around the world, was writing a post about some comments Hillary Clinton made in last night’s Democratic debate.

The post was a commentary on “Clinton’s point about how American Muslims are ‘on the frontline of our defense’ and how problematic that framing is,” Makki wrote in an email. ”American Muslims *already* report suspicious activity & suspected terrorism to the authorities (and I wanted to link a particular study on my blog).”

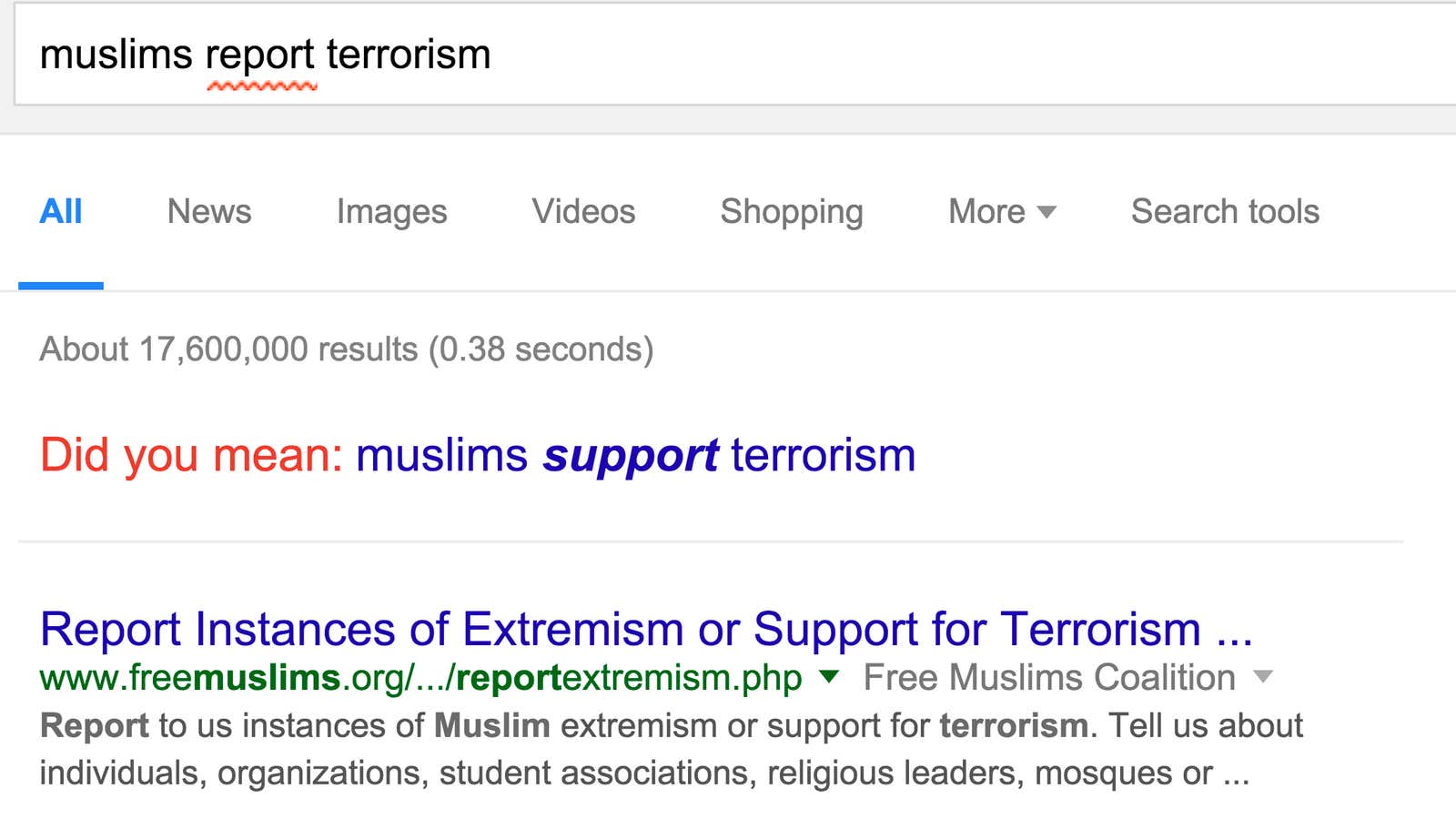

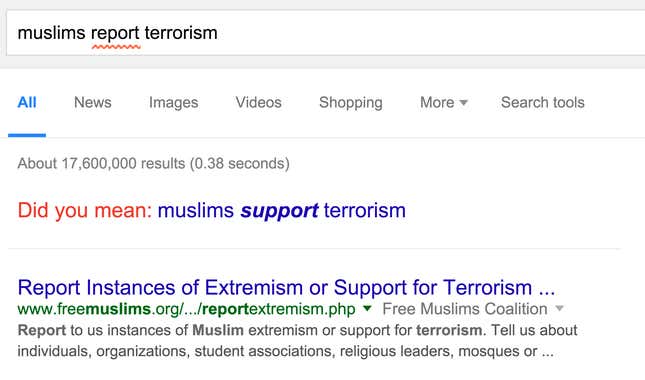

So Makki searched for that study on Google, entering “american muslims report terrorism.” Google suggested that perhaps she meant to type ”american muslims support terrorism.”

This wasn’t a fluke. We tried the search as well and got the same result:

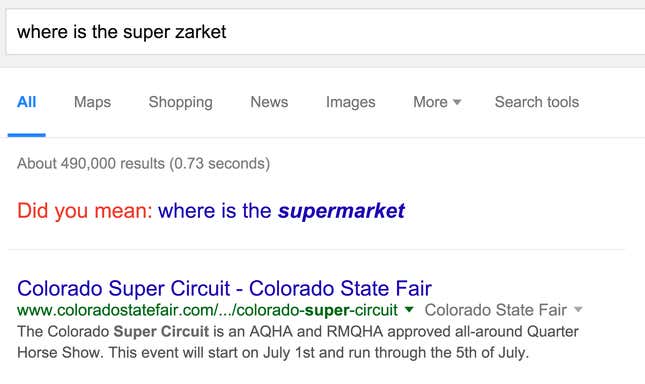

As everyone knows, Google’s search suggestions often occur when you spell a word wrong. If you tell it you’re looking for a “super zarket,” it figures you mean “supermarket.”

But Google’s algorithm will also look beyond misspellings, at the sequence of words you’ve typed into the search box. It calculates how common it is for one word to follow another and tries to determine whether you need some help.

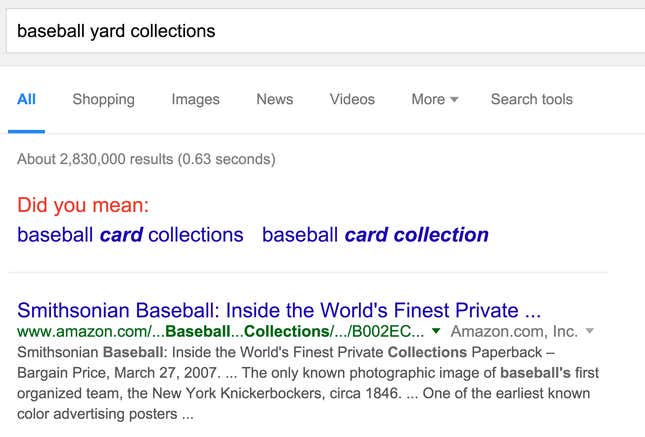

If you search for “baseball yard collections,” for example, Google notices that this is an odd sequence of words. You haven’t spelled anything wrong, but “yard” is not the word that typically comes after “baseball” and before “collections.” This is, of course, common sense to a human. But for a computer to work it out requires a complicated procedure.

In Google’s case, the algorithm presumably looks at thousands or millions of previous searches that contain “baseball,” “yard,” and “collections,” and counts the words that have previously come before and after all three. It counts the instances that are the most common, and eventually works out that the word “card” frequently comes after “baseball” and before “collections.” And it’s a bonus that “yard” and “card” have three letters in common. With that information, Google makes a best-guess suggestion, and it’s often correct. (Google has not yet confirmed these technical details, but this is the standard practice for word-chaining.)

That procedure is what makes Hind Makki’s discovery all the more troubling. This isn’t a glitch in Google’s algorithm. In fact, the algorithm is working exactly as it’s supposed to. The glitch is human. It’s in the searches people have entered into Google and in the web pages Google indexes.

When the algorithm looks for instances of “Muslim,” “report,” and “terrorism,” it finds this to be an uncommon sequence of words. What it finds to be more common, after counting up the words that typically come before and after those, is not just a sequence of meaningless words. It’s a sentiment, and a common one: “Muslims support terrorism.” (Needless to say, that’s not true.)

Makki had mixed emotions when she saw Google’s suggestion.

“I thought it was hilarious, but also sad and immediately screen capped it,” she said. “I know it’s not Google’s ‘fault,’ but it goes to show just how many people online search for ‘Muslims support terrorism,’ though the reality on the ground is the opposite of that.”

Google has not yet provided an explanation or timeframe for fixing this issue, but we will update this piece when it does.

Update: A Google spokesperson said the suggestion has now been removed; it no longer appears on searches for “Muslims report terrorism.”