“It’s not a human move.”

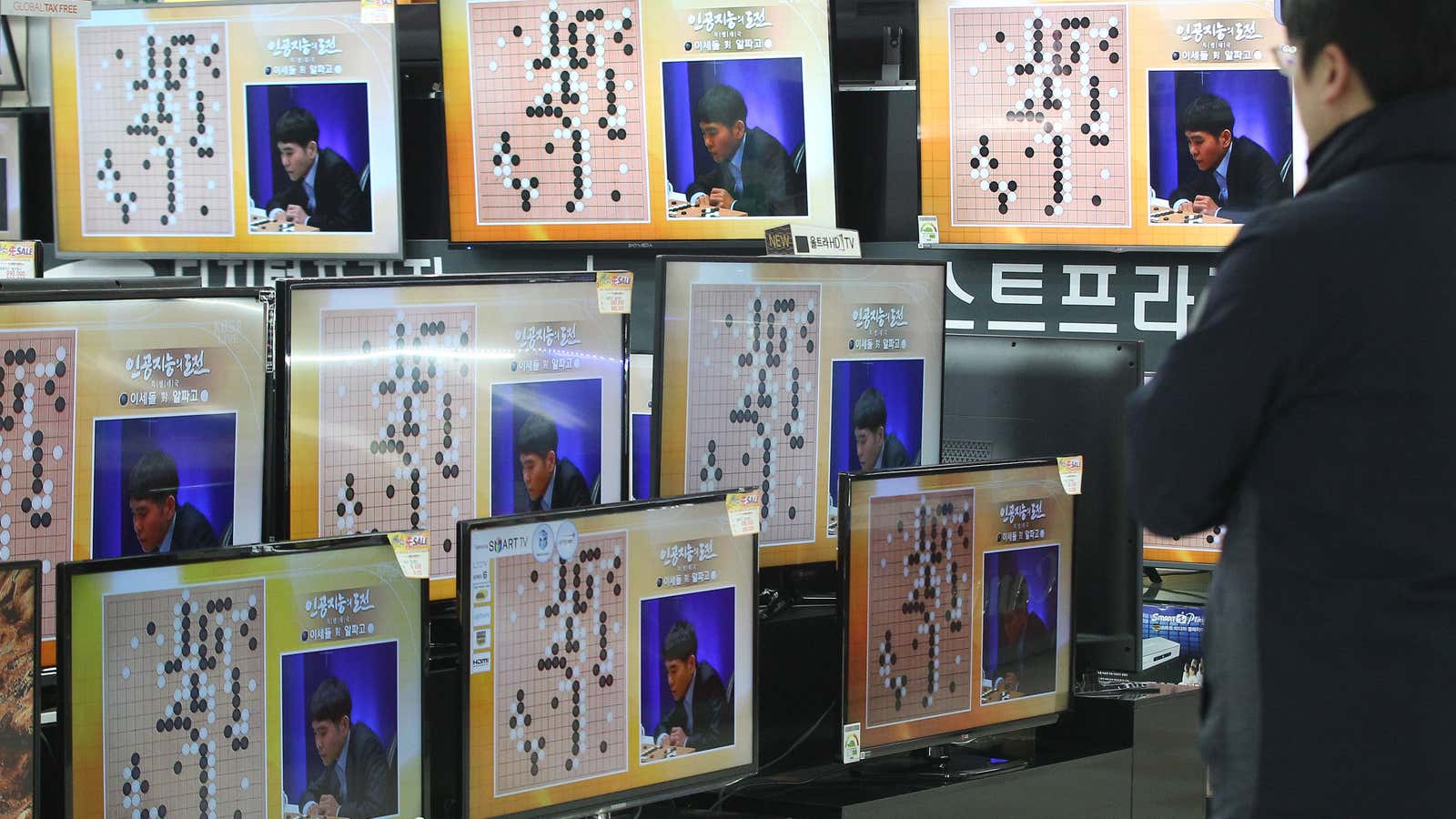

What shocked the grandmasters watching Lee Sedol, one of the world’s top Go players, lose to a computer on Thursday was not that the computer won, but how it won. A pivotal move by AlphaGo, a project of Google AI subsidiary DeepMind, was so unexpected, so at odds with 2,500 years of Go history and wisdom, that some thought it must be a glitch.

Lee’s third game against AlphaGo was today. Even if man had recovered to beat the machine, what we would have remembered was that moment of bewilderment. Go is much more complex than chess; to play it, as DeepMind’s CEO explained, AlphaGo needs the computer equivalent of intuition. And as Sedol discovered, that intuition is not of the human kind.

A classic fear about AI is that the machines we build to serve us will destroy us instead, not because they become sentient and malicious, but because they devise unforeseen and catastrophic ways to reach the goals we set them. Worse, if they do become sentient and malicious, then—like Ava, the android in the movie Ex Machina—we may not even realize until it’s too late, because the way they think will be unrecognizable to us. What we call common sense and logic will be revealed as small-minded prejudices, baked in by aeons of biological and social evolution, which trap us in a tiny corner of the possible intellectual universe.

But there is a rosier view: that the machines, sentient or not, could help us break our intellectual bonds and see solutions—whether to Go, or to bigger problems—that we couldn’t imagine otherwise. “So beautiful,” as one grandmaster said of AlphaGo’s game. “So beautiful.”

This was published as part of the Quartz Weekend Brief. Sign up for our newsletters here, tailored for morning delivery in Asia, Europe & Africa, and the Americas.