By June or July of this year, according to Andy Price of Green Revolution Cooling, something strange will be announced by at least two of the companies that own the hundreds of thousands of computers that make the internet possible. In out-of-the-way locations, these companies—whose identities Price won’t reveal but, he says, are on a par with Facebook, Amazon and AT&T—are doing bizarre things to their infrastructure. Specifically, to their servers, the high-powered PCs that store, retrieve and process all the data on the internet and comprise the physical structure of the “cloud.”

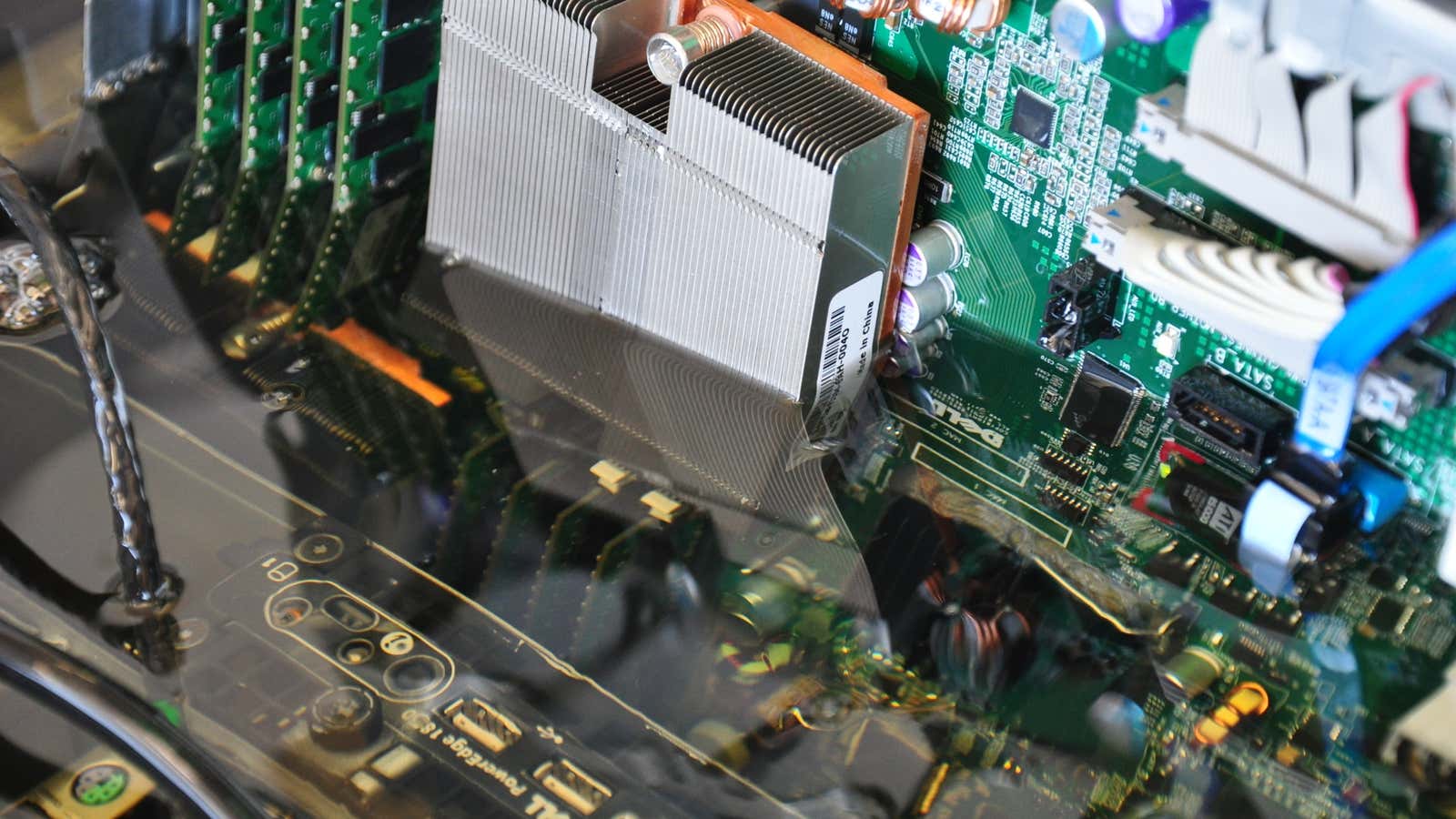

In an un-air-conditioned shed in a location Price will not disclose, alongside bags of salt used to run a water softening system, sit waist-high tanks full of mineral oil. In their depths are tiny lights blinking like bioluminescent creatures from the abyss, and something even more unexpected: row after row of PC motherboards, craggy with RAM and CPUs and hard drives and cables. Each one is more or less straight off the rack—the same hardware that, in any other data center, would be cooled by an air-moving infrastructure that begins with gigantic air-conditioning systems and ends in palm-sized fans attached directly to the motherboards themselves.

This shift to a new way to build the cloud, says Price, is already in progress. “We’re on the cusp of explosive growth,” he says. “We’re in evaluation stage with household-name cloud providers, but we’re bound by [non-disclosure agreements].”

There are only so many “household name” cloud providers out there—like VMWare, Microsoft, Salesforce, Rackspace, Amazon and IBM. Price says Green Revolution is also testing with household names in social media (Facebook? Twitter?) and telcos (AT&T? Verizon Terremark?).

If what he says is true, then the fact that companies of this scale are seriously evaluating a technology long restricted to only the most demanding applications—places where price hardly matters, like military supercomputing clusters and Wall Street data centers where thousandths of a second can be worth millions of dollars—says a great deal about just how unsustainable current data center infrastructure has become. And also how badly companies need to have data centers in ever more places, not just the sealed, ventilated, customized buildings in which they are currently housed.

A climate-controlled cave for delicate silicon flowers

The modern data center is like an incubator for some exotic species of plant that can only tolerate a narrow range of temperatures and is allergic to dust. Row upon row of “racks” of severs, which are just high-performance PCs stripped down to their bare essence, stand side by side, with just enough space between them to allow for airflow. The space housing the servers is positively pressurized to keep out dust.

When microchips are operating, almost all the energy pumped into them is transformed into heat by the process of computation itself. Heat has become such a problem that half of the cost of a new data center is now the specializations required to make it suitable for air-cooling servers. The other half of the cost is the servers themselves, which have become ever cheaper even as they become ever harder to cool. In some data centers, the noise of the fans and air-conditioners needed to move air over the servers fast enough to prevent them from shutting down is so deafening that technicians must wear ear protection. Data centers already consume 3% of all electricity in the US and 50 times as much electricity as an office building of comparable size.

Cutting construction costs in half, and cooling costs by 80%

A number of companies sell liquid cooling systems, including Green Revolution, Iceotope, Liquid Cool, Asetek, and Coolcentric. What they have in common is basic physics. Liquids are denser than air, and therefore better at picking up heat and taking it away. What varies is the liquid of choice—anything from water or mineral oil to more exotic chemicals—and the methods for moving the liquid and keeping it apart from the electronics where necessary.

Asetek’s system, for example, uses “cold plates,” or slabs of metal that attach directly to the hot parts of a server, with water circulating over them to draw heat away. That means connecting plumbing as well as power to a rack of servers, and the whole system must be highly engineered to prevent leaks.

Iceotope’s servers are in sealed aluminum bricks filled with Novec, a liquid coolant from 3M. Novec has low viscosity, so as it heats up it naturally forms convection currents—that is, it flows throughout the server’s sealed case, transferring heat to water pipes on the outside of the case. Those water pipes, in turn, run to a radiator or cooling tower. Novec boils at relatively low temperatures, which means that if the sealed case develops a crack, the liquid would quickly evaporate out. (Its low boiling point has led 3M to also experiment with using Novec to cool computers by evaporation and re-condensation, like in a fridge.)

Green Revolution’s solution is relatively low-tech by contrast. All it requires are stock motherboards, big tanks, a pump for mineral oil, and a heat exchanger. The oil doesn’t harm the computers—it’s a poor conductor of electricity, so it can wash right over the circuitry—so the only real point of failure is the pump.

For all these firms to grow, they must overcome the traditional barrier to liquid cooling—cost. Asetek has probably shipped more liquid-cooled computers than any other company—it claims 1.3 million—thanks to early success selling liquid-cooled gaming PCs and desktop workstations. Now that the company has expanded into servers, it claims that its customers can pay off their investment in an Asetek liquid cooling system within 12 months, thanks to energy savings. (Of course, such calculations depend heavily on the cost of energy, which varies tremendously between, for example, the US and Europe.)

But though Asetek may be the market leader, its main customers are, to date, research facilities and high-performance (i.e., supercomputer) labs and the US Department of Defense. A company representative said Asetek is not yet selling to companies like Amazon, but that its goal is to bring down the cost of its systems so that some day it could.

That’s what makes Green Revolution’s announcement interesting. The giants of cloud computing are companies with large data centers, plenty of space, and a ruthless devotion to cost management. If they’re adopting Green Revolution’s systems before anyone else’s—and based on conversations with competitors, academics and engineers in related fields, that appears to be the case—they must think it’s going to save them real money.

Price says Green Revolution can cut the initial cost of building a data center in half by removing the need for air conditioning and specialized construction, like raised floors. But initial cost aside, all liquid cooling systems can save customers money on energy. Recent trials by engineers at the University of Leeds of a system built by Iceotope estimated that it required 80%-97% less energy than air-cooling a comparable number of servers. With liquid cooling, data centers can also be smaller, because the servers don’t need as much space between them for air to circulate.

A future of ubiquitous, liquid-cooled computing?

Some of Green Revolution’s installations in odd places are already public. At the Texas Advanced Computing Center, for example, they’re operating in a dirty, dusty loading dock. Inside the building, meanwhile, conventional high-performance computers occupy a typically pristine and air-conditioned space. “The point is all we really need is a flat floor, a roof over our head and electrical utilities,” says Price.

This portends some exotic futures for the infrastructure of the cloud. For example, companies have been working for a number of years on what is essentially a “data center in a box”—a cluster of computers housed in a shipping container, which can be moved easily by truck, rail or ship and hooked up anywhere there’s power and a fast internet connection. Price says Green Revolution is the first company that can use standard, virtually unmodified shipping containers. ”You can get them anywhere in the world,” says Price. “We’re just cutting them up for access and plumbing and to pick up the floor, to bolt down our racks.”

Of course, this is still very much a nascent industry. Despite its giant potential customers, Green Revolution still has only 13 full time employees, and outsources the assembly of all major components. If the company decides to license its designs instead of selling hardware, that might not matter. For Google, Facebook and Amazon in particular, the custom design of their data centers and even servers is an important part of their competitive advantage. Cloud giants might prefer to move to this technology on their own terms—and simply pay the inventors of it for the privilege.

But in the long run, the fact that liquid cooling could move the data center out of specialized, expensive buildings will have bigger implications than merely saving money for the giants of the cloud. Not only is it greener; it lets any company anywhere have powerful computing on demand, without having to rely on the Amazons of this world to supply it.