Videos are a great medium for audio-visual experiences but interacting with characters and objects behind the screen has been a fantasy—until now.

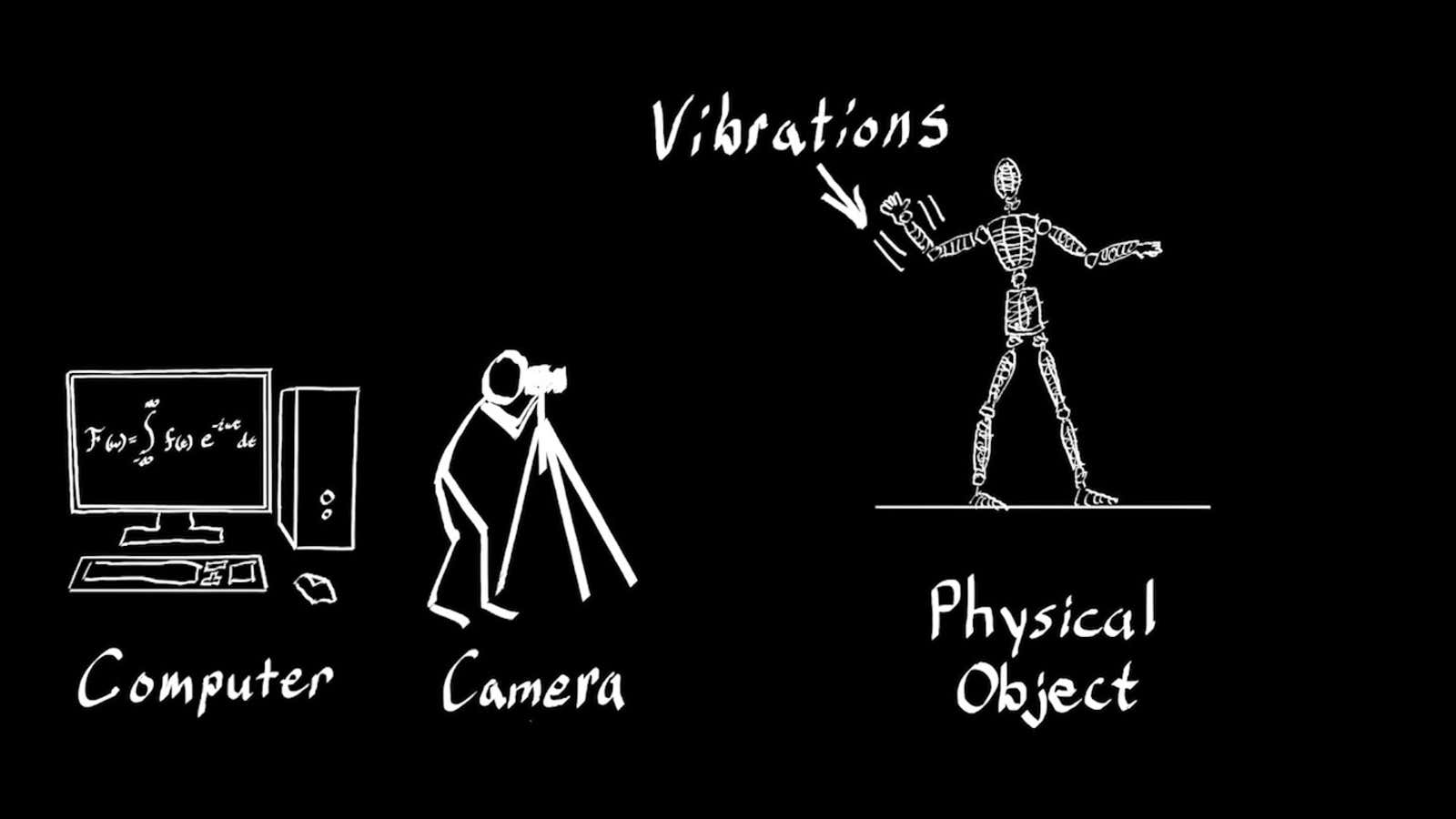

Researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have found a way to let people reach in and push, pull, poke, and prod objects in videos. Going a step beyond augmented reality, the Interactive Dynamic Video (IDV) technique captures video with a traditional camera.

While filming a stationary object, the environment around it is used to create vibrations. For instance, to move an object placed on a table, you just need to bang on the surface a few times. To capture the video of a bush, which would be costly and difficult to capture via 3D modeling, the researchers filmed a minute-long video of the bush in the breeze. Then, algorithms analyze these tiny, almost invisible vibrations of an object and create video simulations. Users can then interact with the video using a cursor to pull and push an object in different directions and watch it react.

After recording, the footage is analyzed to find different ways an object can move. As a result, it is possible to identify shapes and frequencies of the movement and predict the object’s behavior in the future. “If you want to model how an object behaves and responds to different forces, we show that you can observe the object respond to existing forces and assume that it will respond in a consistent way to new ones,”Abe Davis, CSAIL PhD student and the lead author on the research, told MIT News.

This development opens up new possibilities in engineering and art. For instance, architects can check the structural health of an old building or a bridge. Low-budget filmmakers can also trade in green screens and complex computer graphics for IDV.

“We’re not so good things in getting real things and virtual thinks interacting with each other,” Abe says in the video. Think floating Pokémons in AR game Pokemon Pokémon Go. Dropping virtual characters into real-world environments can be enhanced by actually enabling them to realistically interact with their environment. Davis demonstrated how the technique could be used to let Pikachu bounce around on a bush or a jungle gym.

Davis, who found that the technique also works on existing YouTube videos, published a paper about IDV earlier this year. The research will also be included in his dissertation to be published at the end of this month.