In artificial intelligence research, free code garners goodwill from the community, talent, and bragging rights. So it’s no surprise that many of the companies investing in AI, like Facebook and Google, are racing to make their code open source early and often.

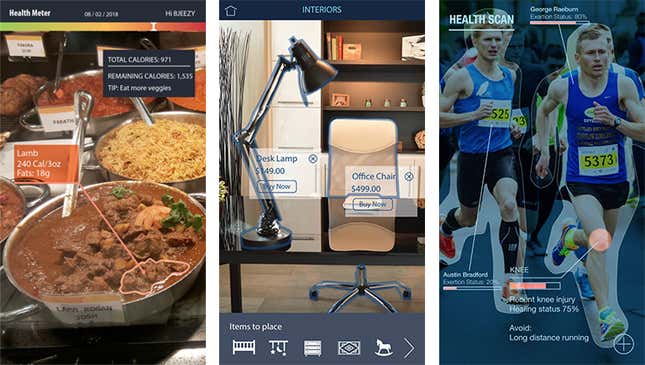

On Aug. 25, Facebook announced that it would make public three tools integral to its image recognition software—the same AI that automatically tags photos and helps read the content of images to visually impaired users of its site. The social media company says that this kind technology could allow Facebook users to search for photos based on what they depict, without relying on the tags others had assigned to the images. Facebook also claims it could be used to identify the nutritional value of food just by taking a picture of it.

The tools are powerful; they provide the entire framework to pick apart the elements of a photo and label the separate parts. The official reason for making them open source is to expedite the field of machine learning by allowing others to potentially improve the code, according to a Facebook blog post.

Organizations releasing their code is an undoubtedly good thing—research thrives in the open. But it’s also a way for these companies to compete with each other.

The traditional way to measure the impact of new code is to track and count citations of the academic papers published alongside it. The more papers that have cited a particular piece of research, generally the more impactful it is in the field. ”If your paper is cited, that means someone read it and believes it is relevant to their own work,” Olivier Breuleux, an early contributor to deep learning library Theano, wrote in an email. “That’s a very good measure of success, because that’s precisely what research papers aim to do.”

Facebook points to its 2014 “Memory Networks” as a paper with high citations in the recent past, which on Google Scholar has 134 citations. Google and Magic Leap’s “Going Deeper with Convolutions,” also published in 2014, has more than 1,400. (It’s worth nothing that Google’s work in that paper is predicated on original research by Yann Lecun, director of Facebook’s AI research department.)

But by making the code open source, you introduce a new a way to gauge popularity and impact among peers: you can start to see how many people are interested and experimenting with a research organization’s code.

“You look at GitHub, you look at the number of stars that the projects have, you look at the number of forks, and that tells us whether the community is taking a liking to it,” said Larry Zitnick, a research manager at Facebook AI Research. “We know we’re having impact that way.” To have a popular repository of code means there are others contributors that find use in the work, and further the work by locating bugs and expanding functionality.

A citation might prove influence, but seeing someone else using your code shows objective worth. “Researchers care a lot about the codebase itself and the developer community around it, because they are the users who will need to dig into the source code and modify it for experimental purposes,” says François Chollet, a Google engineer and the author of open source deep learning library Keras.

Judging by this metric, used internally by both Facebook and Google, Google is king. TensorFlow, Google’s library for machine learning tools, is number one in its category with more than 30,000 stars (stars being akin to the Facebook Like), and nearly 13,000 forks (a metric to see how many other developers copied the source code and tinker with it). Torch, Facebook’s version, has 5,200 stars and 1,500 forks. TensorFlow was released in November 2015, and the latest version of Torch was released in September 2015. Another metric provided by GitHub is Issues: questions about the code or errors found, which could indicate an engaged community. TensorFlow has 464 Issues, while Torch has 68.

Both Google and Facebook are extremely willing to share their AI research. On the other hand, Apple publishes the code necessary for building software in their own ecosystem, like its Swift programming language, but their machine learning resources are rarely made public.

Facebook’s most popular official AI tool on GitHub is fastText, a tool to understand the meaning behind text. Since its Aug. 18 launch the project has more than 4,000 stars and almost 500 forks. Though it’s still not as popular as Google’s variant, named Parsey McParseface, (7,000 stars and 1,300 forks), it’s still notable, especially considering Parsey McParseface was released in May.

Given Facebook’s fastText success, and the power of the tools released today, the social network might be catching up. Google’s dominance hasn’t gone unnoticed, either. Competing tech companies have avoided using the free, Google-maintained software for fear the search company would own the future of artificial intelligence, according to Bloomberg.