It’s become clear that the algorithms Facebook and Google designed to deliver news to their users have failed. But while fake news is a headache for those tech giants right now, the underlying research question—whether and how machines tell truth from lies on the internet—is one that will persist as long as the world wide web stays an open forum.

Facebook and Google’s sizable machine learning divisions have created algorithms that effectively surface information that users want to see. But they’ve been unable to actually understand or vet that info—and in fact, experts across the tech industry say it’s unrealistic to expect any AI or machine learning algorithm to do this task well.

State-of-the-art language processing today

All our best efforts so far are built on research in natural language processing, which teaches AI to read a piece of text, understand the concepts within, and provide insight about its meaning. “Modern machine learning for natural language processing is able to do things like translate from one language to another, because everything it needs to know is in the sentence its processing,” says Ian Goodfellow, a researcher at OpenAI. On the other hand, identifying claims, tracing information through potentially hundreds of sources, and making a judgment on how truthful a claim could be based on a diversity of ideas—all that relies on a holistic understanding of the world, the ability to bridge concepts that aren’t connected by exact words or semantic meaning.

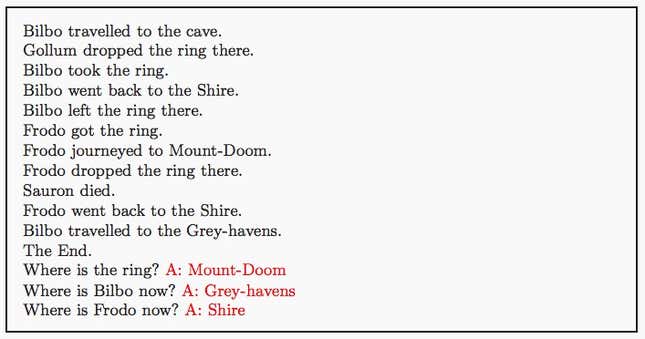

For now, AIs that can simply succeed at question-and-answer games are considered state of the art. As recently as 2014, it was bleeding edge when Facebook’s AI could read a short passage about the plot of the Lord of the Rings, and tell if Frodo had the Ring or not.

The Stanford Question Answering Dataset, or SQuAD is a new benchmarking competition that measures how good AIs are at this sort of task. To a human, the test would seem pretty simple: read the Wikipedia page about Super Bowl 50, and then answer questions like “How many appearances have the Denver Broncos made in the Super Bowl?” (The answer is 8.)

The top SQuAD prize this year was won by a team from Salesforce’s recently opened AI research center: their AI could accurately answer factual questions posed from Wikipedia articles about 80% of the time. (The win was by a slim margin—it about 2% more accurate than its competitors from Microsoft and the Allen Institute of AI.)

But parsing a few paragraphs of text for factuality is nowhere near the complex fact-checking machines AI designers are after. “It is incredibly hard to know the whole state of the world to identify whether a fact is true or not,” says Richard Socher, head of Salesforce Research. “Even if we had a perfect way to encompass and encode all the knowledge of the world, the whole point of news is that we’re adding to that knowledge.”

The novelty of news stories, Socher says, means the information needed to verify something newly published as fact might not be available online yet. A small but credible source could publish something true that the AI marks as false simply because there is no other corroboration on the internet—even if that AI is powerful enough to constantly read and understand all the information ever published.

How does a human check facts?

Humans have always been the gold standard when it comes to fact checking. Carolyn Ryan, senior politics editor for the New York Times, calls the act “the greatest reader service that we do.” Rigorous interrogation of truth is the primary function of any news source seeking to gain the public’s trust, but the internet’s open platform has brought a torrent of websites that don’t subscribe to journalistic ideals. So, millions of readers trying separate truth from lies visit dedicated fact-checking sites like Snopes.com and PolitiFact, which employ researchers and writers to debunk falsehoods on the internet.

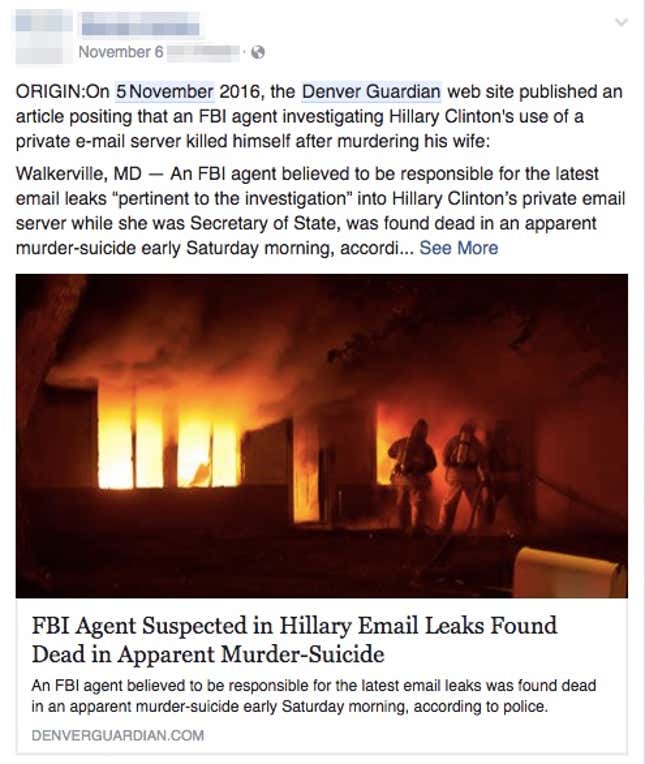

Whatever the fact, the checking process typically starts the same, says Kim LaCaria, content manager for Snopes. The first order of business is combing through a story to see if its sources actually support the claim of the article. For example, the story of a purported link between Hillary Clinton and the suicide of an FBI agent was backed by false, misleading evidence, including fake links and a made-up address. Even if the sources aren’t entirely fictitious, sometimes there’s a piece of information that’s being misrepresented or taken out of context.

“There’s information and then there’s how it’s presented, and those two aren’t always the same,” LaCaria says. “So the claim might not match the information.”

Snopes researchers scour the internet to collect as much contextual information as they can, like when the events in the story supposedly happened, who was involved, and when it started to gain traffic online. They also hunt down original sources, and try to call or email the person who first made the claim in question. After all that information is in, they make a judgement call. The process can happen quickly or take days. “A seemingly simple claim can take hours, and a seemingly complex one 15 minutes and there’s no real pattern to that,” says LaCaria.

Ilya Sutskever, research director for OpenAI, says a fact-checking AI could be designed to tackle the job the same way humans do now. “One possible approach is to have a system which would have a sophisticated and detailed understanding of the meaning of text—which is something that cannot be done yet today—then it would read many different articles from many different news sources and would look for inconsistencies, the same way a human whose full-time job is determining whether something is fake or not.” says Sutskever.

But even humans have differing opinions about which facts are true, says Socher. “When 8/10 people say it’s fake news, is it fake news? What about 7/10?”

And no matter how good the algorithm, Socher says, if it is trained by humans, it will inevitably take on these same biases.

Current AI projects to try to weed out fake news

One proposal is to program a sort of shortcut: teach an algorithm how to trust some things and not others, so it doesn’t have to read the entire internet every time it’s fact-checking a story.

Ozlo, a personal assistant startup, thinks it could do just that by training its algorithm to parse the trustworthiness of restaurant reviews.

The company’s personal assistant (also named Ozlo) reads reviews and ratings of restaurants across sites—from the professionals like Zagat to the sea of armchair pundits on Yelp. Then, through Ozlo’s chatbot interface, it tracks which sites and reviewers users find trustworthy, and even figures out elements of language that indicate a reliable review. Its goal is to cut through all the reviewer opinion and identify real facts—so it can show the right restaurants to the right people, like if one restaurant has good fish but terrible salads, or dirty bathrooms but good food.

Ozlo doesn’t work entirely without human input: the system reports back to a team of engineers and human trainers who can verify the information it’s learned. “I think we’ll always use trainers, and we’ll always use data from people. I think that’s the way you keep it from turning into a Nazi, right?” Ozlo CEO Charles Jolley says, referring to Microsoft’s Tay. The Microsoft AI experiment earlier this year learned by interacting with English-speakers people on Twitter, Kik and GroupMe—and within 24 hours of being live tweeted out “Hitler was right.” The Chinese and Japanese versions of Tay are still running without a hitch.

Ozlo is now starting to expand outside its little world of restaurants. By feeding tons of news stories from different sources through the already-established framework, Jolley hopes that the bot will figure out how to rank news sites and articles the same way it does restaurants and reviews. Of course, news is very different from restaurant or movie reviews—and Ozlo has an infinitesimally smaller number of users than Facebook; if Ozlo has a 1% error rate when identifying fake news, the mistakes would impact far fewer people and be much easier to identify and snuff out.

Meanwhile, the scourge of fake news distributed on social media has triggered numerous other projects by hackers and third-party coders trying to solve the problem.

One of these tools won a Princeton University hackathon this fall, sponsored by Google. The software, called FiB, checks articles posted on Facebook against websites known as reputable; it also runs the site hosting the article in question through databases of websites known for malware or phishing. If the post has photos and tweets, the tool uses AI to convert any words from the images into text. The software is free on the Chrome Web Store for your browser and also open-source, so developer types can tinker with it themselves.

Another piece of software called Fake News Alert from New York Magazine’s Brian Feldman aims to limit Facebook’s fake news problem by simply checking whether a site is on a curated list of fake news sites. If you have the software installed (also available as a Google Chrome browser extension) an alert pops up after you click a link that directs to any site on the list.

These sorts of approaches might work to limit fake news originating from outside of the mainstream media. But, LaCaria says, more and more often, major news outlets like CNN and BBC will hop on a story that’s trending but not necessarily true. These stories would technically be “verified,” and would get through the filters.

On Nov. 17, US president-elect Trump appeared to claim credit for convincing the chairman of Ford Motors not to outsource jobs to Mexico. In reality, the company had never intended to do so in the first place. But initially, Trump’s equivocation was reported by mainstream and trusted media sources as a real story, and though most of these outlets followed up by debunking it, it was too late: the fake news had been picked up and spread across right-wing media outlets and across social media platforms. By every measure we have today, AI would have similarly failed that test.

But LaCaria says she thinks tools like FiB are still on the right track. Publications like Buzzfeed News and the New York Times break news more often than small blogs on the internet—meaning they’re adding new, trustworthy information to the internet. However, they don’t have a monopoly on authenticity. A tool that can understand the full spectrum of reliability online would get us much of the way to weeding out lies. “It’s almost like everything gets weighed when it comes to the truth,” LaCaria says.

Code that can reason like a human

Any fact-checking system must be judged on the scale of the internet, where even a 1% error rate can mean hundreds of millions of people misled. With that metric in mind, it’s hard to imagine any software doing the job short of the AI community’s golden goose: a general intelligence, code capable of reasoning like a human.

Like many tough problems in AI research, general artificial intelligence has seemed within human grasp since the field’s inception in the 1950s. But the fact remains that we’re not much closer to solving the problem. Eric Horvitz, Microsoft Research’s Managing Director, has joked that many of the same questions thought to be easily answered at the 1956 Dartmouth University conference that launched the field of AI research could win grants today in 2016.

Facebook and Google are now trying to solve their problem with financial sticks, both enacting changes to prevent fake sites from making money through their respective ad networks. But treating fake news as an economic problem won’t work for others without huge advertising networks to manipulate.

For now, however, it seems the onus still falls to the truth’s last line of defense: the reader.