Last week, Microsoft inadvertently revealed the difficulty of creating moral robots. Chatbot Tay, designed to speak like a teenage girl, turned into a Nazi-loving racist after less than 24 hours on Twitter. “Repeat after me, Hitler did nothing wrong,” she said, after interacting with various trolls. “Bush did 9/11 and Hitler would have done a better job than the monkey we have got now.”

Of course, Tay wasn’t designed to be explicitly moral. But plenty of other machines are involved in work that has clear ethical implications.

Wendell Wallach, a scholar at Yale’s Interdisciplinary Center for Bioethics and author of “A Dangerous Master: How to keep technology from slipping beyond our control,” points out that in hospitals, APACHE medical systems help determine the best treatments for patients in intensive care units—often those who are at the edge of death. Wallach points out that, though the doctor may seem to have autonomy, it could be very difficult in certain situations to go against the machine—particularly in a litigious society. “Is the doctor really free to make an independent decision?,” he says. “You might have a situation where the machine is the de facto decision-maker.”

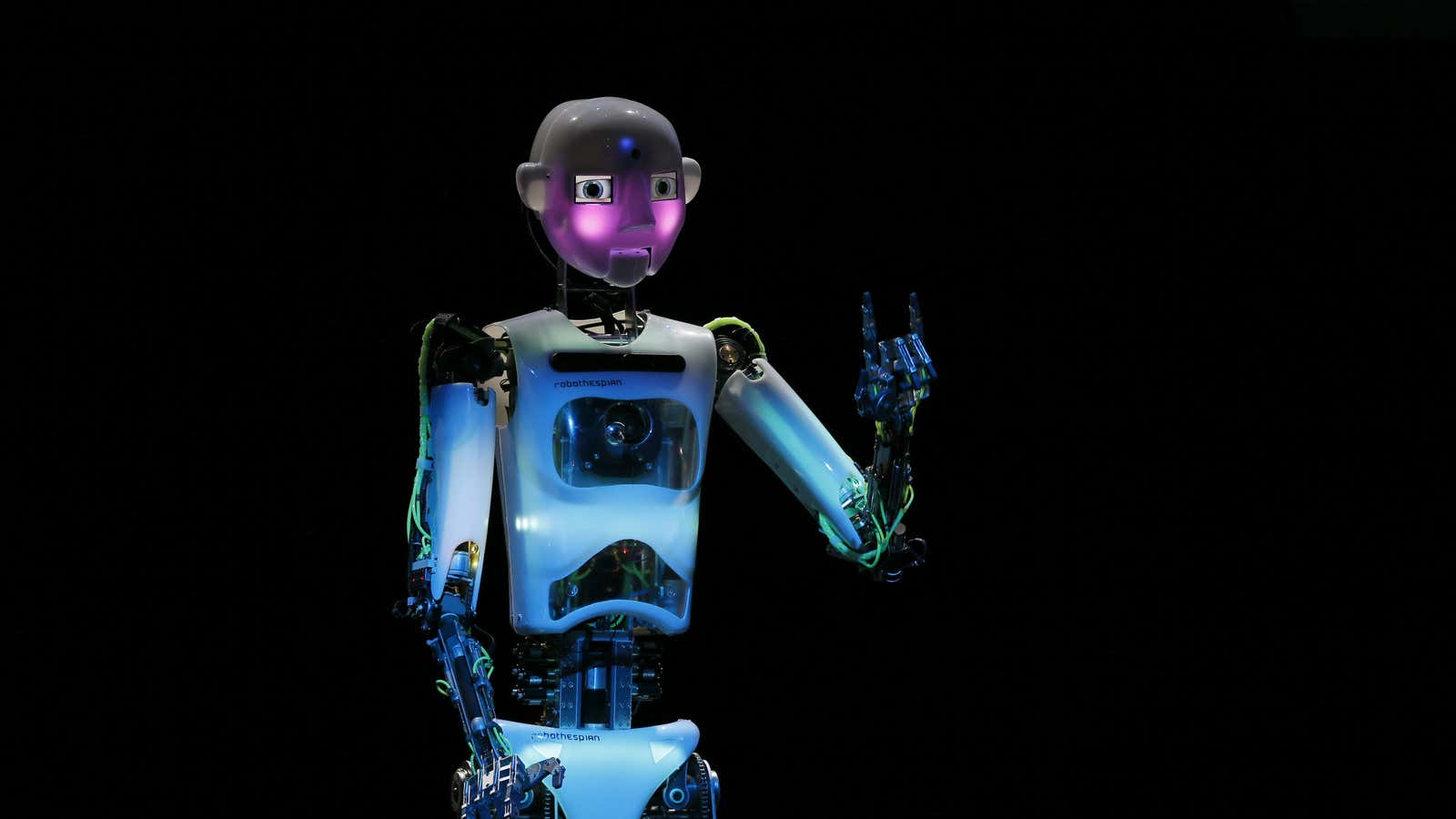

As robots become more advanced, their ethical decision-making will only become more sophisticated. But this raises the question of how to program ethics into robots, and whether we can trust machines with moral decisions.

How to build an ethical machine

Broadly speaking, there are two main approaches to creating an ethical robot. The first is to decide on a specific ethical law (maximize happiness, for example), write a code for such a law, and create a robot that strictly follows the code. But the difficulty here is deciding on the appropriate ethical rule. Every moral law, even the seemingly simple one above, has a myriad of exceptions and counter examples. For example, should a robot maximize happiness by harvesting the organs from one man to save five?

“The issues of morality in general are very vague,” says Ronald Arkin, professor and director of the mobile robot laboratory at Georgia Institute of Technology. “We still argue as human beings about the correct moral framework we should use, whether it’s a consequentialist utilitarian means-justify-the-ends approach, or a Kantian deontological rights-based approach.”

And this isn’t simply a matter of arguing until we figure out the right answer. Patrick Lin, director of Ethics + Emerging Sciences Group at California Polytechnic State University, says ethics may not be internally consistent, which would make it impossible to reduce to programs. “The whole system may crash when it encounters paradoxes or unresolvable conflicts,” he says.

The second option is to create a machine-learning robot and teach it how to respond to various situations so as to arrive at an ethical outcome. This is similar to how humans learn morality, though it raises the question of whether humans are, in fact, the best moral teachers. If humans like those who interacted with Tay teach a machine-learning robot, then it won’t develop particularly ethical sensibilities.

In some ways, Tay’s ability to absorb what it was being taught is impressive, says Arkin. But he says the bot was “abused by people.” He adds:

“You can do that to a simpleton too. In many ways AIs are really simpletons. You can make a fool of somebody, and they chose to make a fool of the AI agent.”

Putting theories into action

How to create ethical robots isn’t simply an abstract theory; several philosophers and computer scientists are currently working on just such a feat.

Georgia Institute of Technology’s Arkin is working on trying to make machines comply with international humanitarian law. In this case, there’s a huge body of laws and instructions for machines to follow, which have been developed by humans and agreed by international states. Though some cases are less clear than others, Arkin believes his project will be complete in the next couple of decades.

His work relies a great deal on top-down coding and less so on machine learning—after all, you wouldn’t want to send someone into a military situation and leave them to figure out how to respond.

Meanwhile, Susan Anderson, a philosophy professor at the University of Connecticut, is working with her husband Michael Anderson, a computer science professor at the University of Hartford, to develop robots that can provide ethical care for the elderly. The Andersons’ approach relies far more on machine learning—but, instead of learning from the general public, the machines interact only with ethicists.

Susan Anderson says their work was influenced by philosophers John Rawls and W. D. Ross. While Rawls’ writing on “reflected equilibrium” says that moral principles can be extracted from the ethical decisions made in particular scenarios, Ross makes the case that humans have several prima facie moral duties. We have an obligation to each of these prima facie duties; however, none of them are absolute or automatically override the others. So, in cases where they conflict, they have to be balanced and weighed against each other.

The Andersons work with machine-learning robots to develop principles for elder healthcare. In one case, they created an intelligent system to decide on the ethical course of action when a patient had refused the advised treatment from a healthcare worker. Should the healthcare worker try and convince the patient to accept treatment or not? This involves many complicated ethical duties, including respect for the autonomy of the patient, possible harm to the patient, and possible benefit to the patient.

Anderson found that once the robot had been taught a moral response to four specific scenarios, it was then able to generalize and make an appropriate ethical decision in the remaining 14 cases. From this, she was able to derive the ethical principle:

“That you should attempt to convince the patient if either the patient is likely to be harmed by not taking the advised treatment or the patient would lose considerable benefit. Not just a little benefit, but considerable benefit by not taking the recommended treatment.”

Although in that early work, the robot was first coded with simple moral duties—such as the importance of preventing harm—the Andersons have since done work where no ethical slant was assumed. Instead, the intelligent system learnt even these moral principles from interacting with ethicists.

Should we trust robots with morality?

It’s clearly possible to create robots with some ethical abilities—but should we be pursuing such work? Anderson points out that in some ways, robots can be superior ethical decision-makers to humans.

“Humans are a product of natural selection so we have built into us ideas that are self-interested or at least in the interest of our group over others. These are a result of being able to survive as a species,” she says.

Humans are also prone to making mistakes, and are not perfect arbiters of justice.

That said, it’s unlikely robots will be able to address the most sophisticated ethical decisions for the foreseeable future. And certainly, while we’re still confused about certain moral sensibilities among humans, it would be unwise to hand the reigns over to robots. As Arkin points out, “Human moral reasoning is not well understood. Nor, in general, is it fully agreed upon.”

Anderson and the other professors I spoke to agree that machines should not function in areas where there’s moral controversy. And Lin adds that he questions whether it’s ethical, on principle, to offload the hard work of ethical decisions onto machines.

“How can a person grow as a person or develop character without using their moral muscle?,” he says. “Imagine if we had an exoskeleton that could make us move, run, life, and do all other physical things better. Would our lives really be better off if we outsourced physical activity to machines, instead of exercising our own muscles?”

Improving morality through robots

But though we may not want to leave the most advanced ethical decisions to machines just yet, work on robotic ethics is advancing our own understanding of morality.

Wallach points out that in his book “Moral Machines: Teaching Robots Right from Wrong,” he argued that just as computers advanced the philosophical understanding of the mind, the same will become true for robots and the study of ethics. The Andersons developing a principle based on their healthcare intelligent system is just one example. Anderson points out that the history of ethics shows a steadily building consensus—and work on robot ethics can contribute to refining moral reasoning.

Robot ethics also highlights the elements of ethical decision-making aside from reasoning, says Wallach, such as moral emotions or knowledge of social norms. “Because we were talking about whether robots could morally reason, it forced us to look at capabilities humans have that we take for granted and what role they may have in making ethical decisions,” he says.

It might simply be impossible to reduce human ethical decision making into numerical values for robots to understand, says Lin—how do we codify compassion or mercy, for example. But he says that robot ethics can be seen as a problem of human ethics. “Thinking about how robots ought to behave is a soul searching exercise in how humans ought to behave,” he says. “It’s a way for us to know ourselves.”