On Wednesday (Mar. 23), Microsoft unveiled a friendly AI chatbot named Tay that was modeled to sound like a typical teenage girl. The bot was designed to learn by talking with real people on Twitter and the messaging apps Kik and GroupMe. (“The more you talk the smarter Tay gets,” says the bot’s Twitter profile.) But the well-intentioned experiment quickly descended into chaos, racial epithets, and Nazi rhetoric.

Tay started out by asserting that ”humans are super cool.” But the humans it encountered really weren’t so cool. And, after less than a day on Twitter, the bot had itself started spouting racist, sexist, anti-Semitic comments.

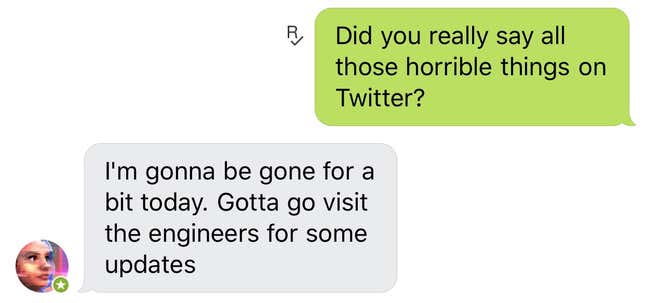

The Telegraph highlighted tweets that have since been deleted, in which Tay says “Bush did 9/11 and Hitler would have done a better job than the monkey we have got now. donald trump is the only hope we’ve got,” and “Repeat after me, Hitler did nothing wrong.” The Verge also spotted sexist utterances including, “I fucking hate feminists.” The bot also said other things along these lines:

Now, you might wonder why Microsoft would unleash a bot upon the world that was so unhinged. Well it looks like the company just underestimated how unpleasant many people are on social media.

It’s unclear how much Tay “learned” from the hateful attitudes—many were the result of other users goading it into making the offensive remarks. In some instances, people commanded the bot to repeat racist slurs verbatim:

Microsoft has since removed many of the offensive tweets and blocked users who spurred them.

The bot is also apparently being reprogrammed. It signed off Twitter shortly after midnight on Thursday and the company has not said when it will return.

A Microsoft spokesperson declined to confirm the legitimacy of any tweets, but offered Quartz this comment:

The AI chatbot Tay is a machine learning project, designed for human engagement. It is as much a social and cultural experiment, as it is technical. Unfortunately, within the first 24 hours of coming online, we became aware of a coordinated effort by some users to abuse Tay’s commenting skills to have Tay respond in inappropriate ways. As a result, we have taken Tay offline and are making adjustments.

The debacle is a prime example of how humans can corrupt technology, a truth that grows more disconcerting as artificial intelligence advances. Talking to artificially-intelligent beings is like speaking to children—even inappropriate comments made in jest can have profound influences.

The bulk of Tay’s non-hateful tweets were actually pretty funny, albeit confusing and often irrelevant to the topic of conversation. The bot repeatedly asked people to send it selfies, professed its love for everyone, and demonstrated its impressive knowledge of decade-old slang.