Machine learning algorithms, such as those that power Google’s search or Apple’s Siri, learn by extracting information from large amounts of text, images, or other data. Unfortunately, research has shown that these algorithms not only learn to understand language, they also learn to replicate human biases, including implicit biases that humans aren’t even aware of.

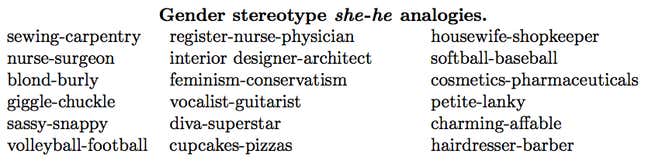

In July, researchers from Boston University and Microsoft Research published results of an attempt to forcefully remove bias from the language model (pdf) created by a machine learning algorithm. The new technique, which they refer to as “debiasing,” promises to eliminate linguistic bias without altering the meaning of words. This is accomplished by identifying gender stereotypical analogies learned by the algorithm, such as “man is to computer programmer as woman is to homemaker,” and then subtly shifting the relationship between those words.

The exact mechanism by which these changes are made can be a bit difficult to grasp. If you imagine every word learned by the algorithm seated in a concert hall, debiasing attempts to rearrange the words in their seats, such that the distances between “woman,” “man,” and “computer programmer” are equalized, but the distances between all other related words stay approximately the same. This last step is essential, because if a single word were to move from the balcony to the orchestra pit, it would fundamentally alter the computer’s understanding of what that word means.

Previous research has suggested that removing gendered associations, if taken to extremes, would inevitably lead the computer to misunderstand us. Imagine the confusion in our search results if Google didn’t understand that king implies a man and queen implies a woman. In a blog post written by James Zou, one of the co-authors of the July study, he describes the importance of maintaining those appropriately gendered analogies. The new process skips over those sorts of relationships, leaving them unchanged in the final model.

It remains to be seen if techniques such as this one can be successfully generalized. (The researchers only tested fairly blatant gender stereotypes.) It’s particularly difficult to predict breakthroughs in machine learning, because surging investment has kicked off a period of incredibly rapid innovation. This study provides hope that some of those R&D dollars might be spent ensuring new algorithms are fair, as well as effective.

The image at the top of this post was shared under a CC BY 2.0 license on Flickr. It has been cropped and color-adjusted.