Facebook said Dec. 15 that it is taking steps to weed out “the worst of the worst” fake news from its platform.

True to Mark Zuckerberg’s belief that Facebook should not become an “arbiter of truth,” these changes are being made cautiously and at arm’s distance. Rather than appoint an internal team to judge the validity of stories, Facebook is partnering with third-party fact-checking organizations—including PoltiFact, Snopes, and the Washington Post—that adhere to standards set by Poynter’s International Fact-Checking Network. And rather than have its employees and algorithms flag content as false, Facebook is making it easier for users to report any posts or stories they believe to be a hoax.

“We believe in giving people a voice and that we cannot become arbiters of truth ourselves, so we’re approaching this problem carefully,” Adam Mosseri, Facebook’s VP of news feed, wrote in an online update. “We’ve focused our efforts on the worst of the worst, on the clear hoaxes spread by spammers for their own gain, and on engaging both our community and third party organizations.”

Facebook has been widely criticized for its role in spreading propaganda, hyperpartisan content, and demonstrably false stories ahead of the US presidential election. After initially shrugging off the idea that fake news could have influenced the election, Zuckerberg vowed to “take misinformation seriously” and outlined several ways the company planned to combat it, including banning publishers of fake news from Facebook’s advertising network.

Still, bogus content on Facebook is arguably a symptom of a bigger problem—the “filter bubbles” created by blogs, social media, and other distribution platforms that help people consume only information that appeals to their existing biases and opinions. These ideological echo chambers thrive on Facebook, where the news feed algorithm is designed to surface the content most relevant and appealing to you. Such personalized news could also undo the tools to curb fake news that Facebook is now rolling out.

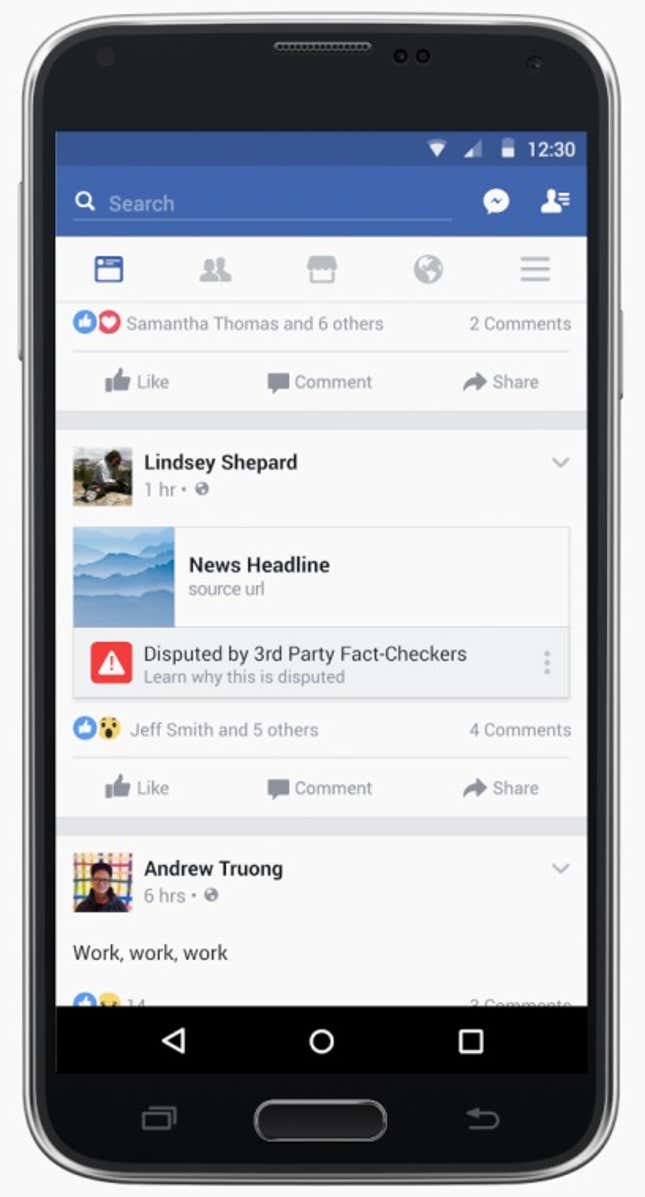

Take user-generated reporting. Facebook showed images of how users will be able to report apparent hoaxes by clicking on the upper-right-hand corner of a post. The company says it will use those reports, “along with other signals,” to send stories to third-party fact-checkers who will work to determine whether those stories are fake. Stories found to be fake by fact checkers will be flagged as “disputed” to users and will lead to a pop-up warning about accuracy before they can be shared. Disputed stories “may also appear lower in News Feed,” Facebook said.

One obvious danger of that approach is that Facebook’s users end up reporting stories not along fact-based lines, but on ideological ones. It’s easy to see that happening when American trust in media is at an all-time low, and partisan sites like Breitbart are quick to declare reporting from established outlets like the New York Times a “sham.”

Or consider the partnership with third-party fact-checkers more specifically. People who don’t trust media outlets to be disinterested observers will recoil at Facebook handing over the “arbiter of truth” role to those sorts of fact-checkers. “The idea that Facebook is going to let a handful of self-interested organizations decide what’s fake is ridiculous,” Aaron Renn, a senior fellow at the Manhattan Institute, a free-market think tank, tweeted shortly after Facebook’s announcement this afternoon.

The matter of fake news is of clear concern to the American public: 64% of US adults say fabricated news stories cause “a great deal of confusion” about basic facts of current events, according to data published yesterday by Pew Research Center. Twenty-three percent say they have shared a made-up news story at some point, and 16% say they shared a story that they only found out later was false.