How Facebook can cut down on fake news without relying on thousands of humans to decide what is true

The world’s most powerful news provider has a fake news problem—and it doesn’t appear to know what to do about it.

The world’s most powerful news provider has a fake news problem—and it doesn’t appear to know what to do about it.

After Donald Trump secured an unexpected victory in the US presidential election, Facebook’s role in enabling his campaign has come under the spotlight. Not only did the social network create an “echo chamber” where users only see information that reinforces their existing biases, it also disseminated information that was patently false, and which often aided Trump. Content creators looking for easy clicks and ad revenue gamed the system, publishing fake but viral stories like “Pope Francis Forbids Catholics From Voting For Hillary!” which were shared hundreds of thousands of times on Facebook.

Now the company seems divided internally about its next steps. Zuckerberg issued a statement alleging that “more than 99% of what people see is authentic” on the social network, but employees disagree. Dozens of staff members have internally formed a secret task force to combat the problem, according to Buzzfeed. The company had created tools to deal with the problem earlier this year, then deliberately did not deploy them, Gizmodo reports, fearing the reaction from conservative outlets which were disproportionately targeted (because they had more fake news).

On Monday (Nov. 14) the company did make one major change, banning fake news publishers from its ad network. But it hasn’t introduced new measures to prevent what they publish from appearing on your Facebook News Feed. While this means these companies may make less money from Facebook, users remain concerned by what shows up in News Feed, not outside of it, the ban may do little to address their grievances.

Zuckerberg framed the issue on Nov. 13 as a problem with truth itself, which isn’t always clear cut, and suggests an influential private company deciding for readers what is true and what is false risks going down a slippery slope.

Identifying the “truth” is complicated. While some hoaxes can be completely debunked, a greater amount of content, including from mainstream sources, often gets the basic idea right but some details wrong or omitted. An even greater volume of stories express an opinion that many will disagree with and flag as incorrect even when factual. I am confident we can find ways for our community to tell us what content is most meaningful, but I believe we must be extremely cautious about becoming arbiters of truth ourselves.

For Facebook, employing a team of human editors to vet for the “truth” might not only be unfeasible given the amount of information it handles, but also undesirable after the company’s Trending News debacle. Here are some concrete, specific measures Facebook could take to make sure links shared on its site don’t spread outright lies, without trespassing into murky ethical issues of restricting freedom of speech.

- Crack down on Facebook pages that make money by spreading lies

This election cycle saw a surge in Facebook pages that manufactured misleading memes, drove users to outside websites with fake news, and then collected revenue from the traffic generation. One conservative-leaning page called Make America Great, was reaching about 1.7 million people daily, by sharing exaggerated or fabricated news stories from other sites, the New York Times reported. In July 2016, the page’s founder earned $30,000 per month in revenue, the paper reported.

Facebook should take measures to de-prioritize results coming from pages its algorithm determines to be unreliable.

One way to do this, according to Azeem Azhar, a writer and investor in artificial intelligence companies, involves examining how long pages have been in existence (the longer, the likely more reliable), where the content they share originates from (Is it a generally-credible mainstream news source? Is it well-linked to elsewhere on the internet?) and the profile of people clicking on it it (Do they read about SpaceX, or space aliens?).

Pages that distribute information with the right “trust signals” for reliability will see their pieces placed accordingly in the News Feed. Pages that don’t have strong trust signals will see their distribution reduced.

“There are certain extensive trust signals generated over time that are like reputation. If we see those signals attached to a piece of content, it tells us a lot about that content,” says Azhar. “Imagine you have a story about some kind of brain cancer. Then, that story is being shared by a lot of neurosurgeons. Would you look a the veracity or the importance of that story because it’s being shared by neurosurgeons, versus a bunch of celebrities?”

- Make its existing community tools to combat hoaxes more effective

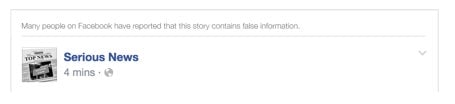

A feature released in 2015 lets users flag stories as potential hoaxes. When an unspecified number of users label a story as a hoax, it gets “reduced distribution” and is less likely to appear in one’s News Feed. In addition, when a specific popular post has been flagged as inaccurate, Facebook puts a disclaimer above the piece that reads “Many people have reported that this story contains false information” in small, faint grey font.

Yet how many times did viewers see this disclaimer over the election cycle? Facebook might consider making this existing system more sensitive to user flags, or making its disclaimers more prominent.

- Link to alternate sources of information in “Related Links”

Facebook is under-utilizing a little-noticed feature in News Feed, says Lokman Tsui, a professor at the School of Journalism and Communication at the Chinese University of Hong Kong. Currently, whenever users click on a story that appears in the feed, a tab called “Related Links” opens up below the space where the original story’s link appeared. When bonafide hoaxes or unverifiable stories surface, Facebook might consider opening up Related Links by default, and link out to sites like Snopes.com or media outlets with opposing viewpoints. This could help keep Facebook users better informed about the likely veracity of the content they see, if they do indeed see information that’s proven false or possibly false.

“You have these fact-checking organizations that verify all kinds of news,” says Tsui. “Facebook could link to the news, and say ‘Here’s the news, now here are some links to credible fact check organizations.”

- Devise and list a thorough procedure for identifying and managing misinformation

This is perhaps the most important step Facebook can take, and its biggest failure to date. Facebook remains a black box in regard to how its algorithm prioritizes not just news or memes, but nearly everything that’s shared in its main feed. With regard to truthfulness however, it’s especially lacking. The social network has entire pages devoted to how it deals with harassment and hate speech, and a transparent way for users to report these things. It also has a page where it publishes the number of requests it has received from governments looking to obtain information about its users.

Facebook’s activities in both these areas have been criticized, but at least they exist. There is no comparable, detailed explanation of how it deals with fake information.

Facebook might also consider allowing third-parties to occasionally review its algorithms and procedures for how effectively they vet hoaxes (or hate speech, or pornography), and then have them release reports on how meet they live up to the standards they set for themselves.

An imperfect but better Facebook

Facebook did not answer questions about the specific measures it has taken to improve its existing technology for detecting hoaxes, or how many people have been devoted to it or will be in the future.

While it clearly needs to do more to wipe off misinformation, no one wants to see Facebook become an “arbiter of truth” the way, for example, heavily censored Chinese social networks remove information that criticizes the government.

The public benefits when they are given as much exposure to an abundance of information and ideas, and left to come to their own conclusions. A myriad of information exists on the internet, some of it good, some of it not good, and it’s all one click away from Facebook.

Just as Facebook has perfected a bias towards showing us information that panders to our political beliefs, it can strive to perfect a bias towards presenting the truth—even if it’s an imperfect or inconsistent bias. Tsui says it’s important Facebook doesn’t become “Big Daddy, keeping what they think is true and removing what they think is not true.” Instead, he believes it “can do a lot more and should do a lot more to help [users] make decisions” about the truthfulness of what they read.