The amazing sorcery of the new iPhone cameras

The camera on the first iPhone in 2007 was nothing revolutionary, even for the time. It was 2 megapixels. You couldn’t zoom in on the photos, not even digitally. There was no flash. There was no editing of pics. And there was a single camera on the back—there was no front-facing camera for selfies, which had not yet been invented. Well, not really (paywall).

The camera on the first iPhone in 2007 was nothing revolutionary, even for the time. It was 2 megapixels. You couldn’t zoom in on the photos, not even digitally. There was no flash. There was no editing of pics. And there was a single camera on the back—there was no front-facing camera for selfies, which had not yet been invented. Well, not really (paywall).

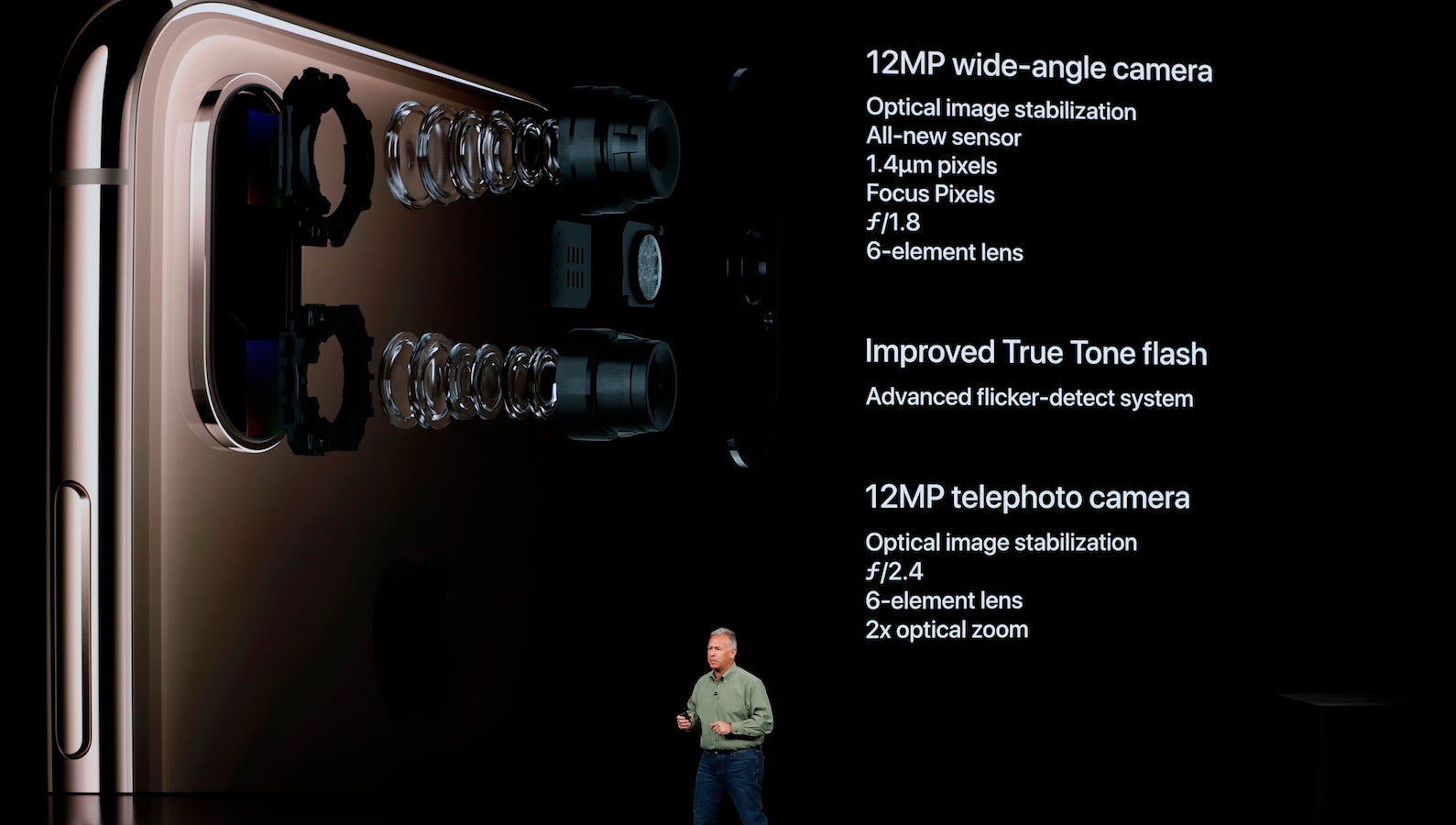

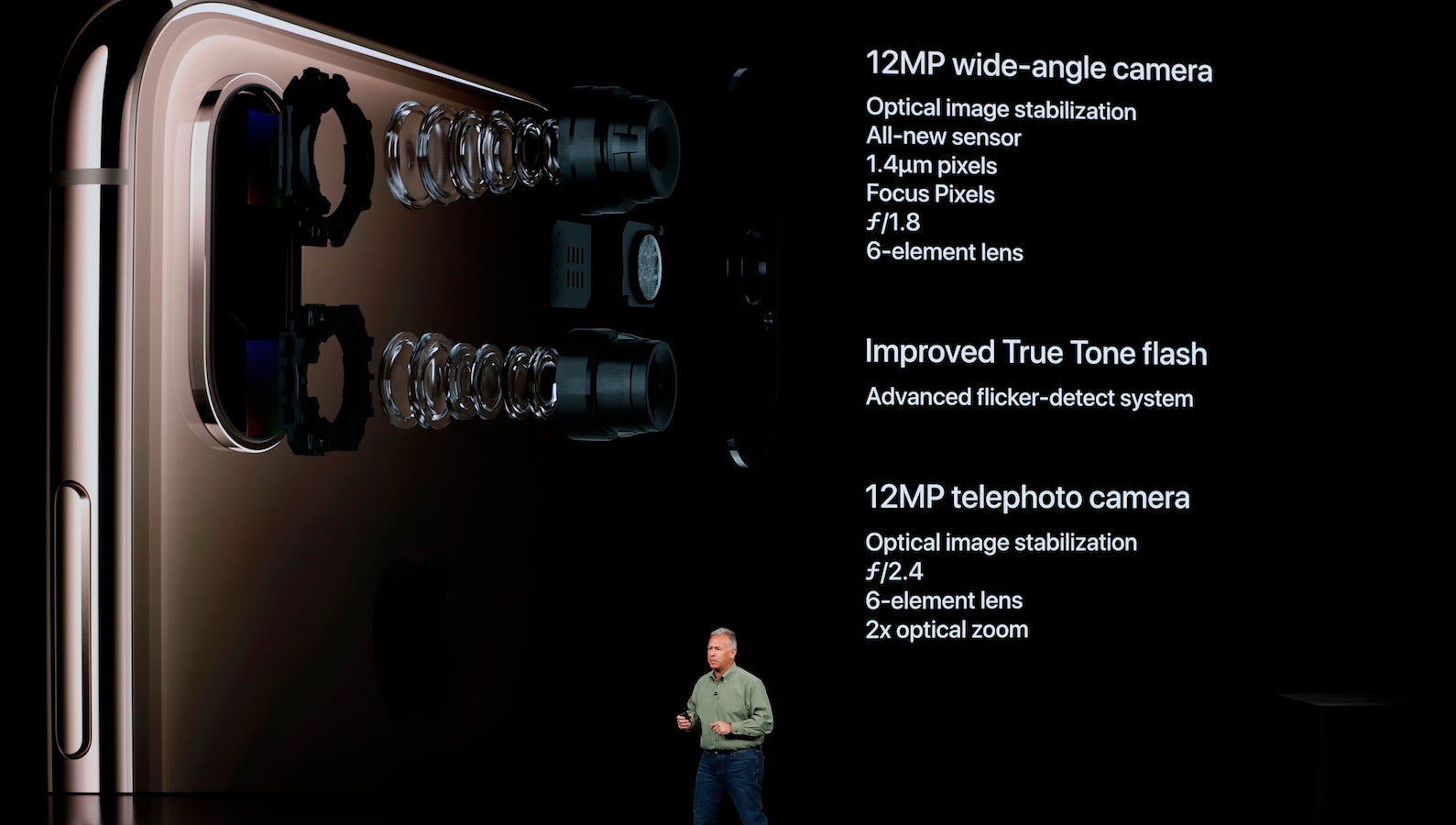

Fast forward 11 years and the iPhone XS has three cameras—two 12-megapixel ones on the back and one that is 7 megapixels on the front. Where there was once no flash, the flash now comes with four LEDs. More impressive is what those cameras can do, and how far they’ve come in relation to traditional photography and DSLR digital cameras.

The glass

The new flagship iPhone XS and XS Max come with dual-lens systems—one wide-angle and one telephoto. The iPhone XS wide-angle lens has an equivalent focal length of 26mm, while the telephoto has the equivalent of a 52mm lens. We say the “equivalent of” because, in real cameras, the focal length is a measure of where the light rays would converge to form an image on a frame of standard 35mm film or on the sensor of a full-frame DSLR.

But because smartphones are much smaller, the actual focal length of the iPhone wide-angle lens is 4.25mm. The telephoto is 6mm. Apple is able to achieve photos that look similar to what full-sized cameras can create through a combination of improving lenses and a better combination of hardware and software.

It was Apple blogger John Gruber, in his review of the new iPhone, who pointed out that the focal length on the iPhone XS has actually been updated from the previous model and was the equivalent of 26mm, not 28mm anymore. (The iPhone X’s wide-angle focal length was 4mm; the telephoto focal length has remained the same as with 2017’s iPhone X.) He noticed the pictures with his new XS seemed wider than with the X, but that the metadata attached to the photos showed a longer focal length.

He contacted Apple and they confirmed that the focal length was longer yet the camera was taking wider pictures, which suggested a much, much larger sensor was being used. “That seemed too good to be true,” Gruber wrote. “But I checked, and Apple confirmed that the iPhone XS wide-angle sensor is in fact 32% larger. That the pixels on the sensor are deeper, too, is what allows this sensor to gather 50% more light.”

The increased sensor size means that, even though the photos are still 12 megapixels (that’s six times the original), more light is reaching the larger megapixels and so each is much richer in information. And still, these sensors are nowhere near the size of what goes into a full-frame DSLR.

That bump

Since the release of the iPhone 6 in 2014, the iPhone has had a “bump” on the back where the camera protrudes rather than sits flush as with previous generations of the phone, which allows the lens to sit further away from the sensor and gives you higher-quality pictures similar to what you get in a proper camera. Apple’s chief designer Jonny Ive once described this (paywall) through what sounds like gritted teeth as “a really very pragmatic optimization.”

Gruber notes that the latest iPhone XS’s bump is the same size as the X, despite the bigger sensor and a longer wide-angle lens, which involved re-architecting the innards of the phone. “Apple managed not only to put a 32% larger sensor in the iPhone XS wide-angle camera, but also moved the sensor deeper into the body of the phone, further from the lens,” he said. (Perhaps this was achieved by the shrinking of the battery.)

From the longer focal length to the larger sensor to maintaining the same-sized body, all those improvements were achieved in a year—and are being produced at scale in the hundreds of millions.

The code

The most impressive leaps in the iPhone come not through the glass on the front, but via the software inside.

Professional photography is often distinctive for the depth of field, usually with the subject being in sharp focus and the background being blurred. That blurry background is often referred to as bokeh, but that’s not correct; it’s the visual quality of the blur that is known as bokeh. Apple has focused a lot on manufacturing good bokeh that is pleasing on the eye, even if its executives sometimes have trouble pronouncing it.

Wide-angle lenses tend to put everything in focus. The iPhone XS’s dual-lens system—sold by Apple since the bigger iPhone 7 Plus was launched in 2016—combines pictures taken by both lenses and uses software to create the depth of field we’re used to from those nice older cameras. The iPhone does this by using its A12 chip and “neural engine” to perform 1 trillion “operations” per photo, like auto exposure, focus, noise reduction, face detection, and so on.

Since last year, the iPhone automatically uses software to adjust the lighting on photos taken after the fact in Portrait mode, mimicking the kind of stylized lighting you see on magazine covers… and in museums. ”If you look at the Dutch Masters and compare them to the paintings that were being done in Asia, stylistically they’re different,” Johnnie Manzari, a designer on Apple’s Human Interface team, told Buzzfeed News last year. “We had some engineers trying to understand the contours of a face and how we could apply lighting to them through software, and we had other silicon engineers just working to make the process super-fast. We really did a lot of work.”

There’s also Smart HDR, which captures three images at different exposures and combines them. And Apple’s new Depth Control feature allows users to dynamically adjust the depth of field—both in real-time in the preview and then even after the photo is taken, allowing you to manipulate the bokeh just so. You can also adjust the depth of field on the front-facing camera, for taking the ultimate selfie.

How good is the iPhone at creating beautiful photography via software? So good that many of those features—like Portrait mode and Depth Control—are coming to its cheaper XR model, despite the fact that the XR only has a single wide-angle camera—just like the original iPhone. Which means that Apple has been able to mimic some of the qualities of a dual-lens system and bring them to a single-lens camera for the first time, purely by using software. Which is quite something.

And we haven’t even talked about the camera in motion.