An artificially intelligent, open-source bionic leg could change the future of prosthetics

The earliest prosthetics were decidedly “dumb” devices: a 3,000-year-old wooden toe, found in Egypt; a knife in the place of a hand in medieval Italy; a 16th-century “Tin Man” arm, fashioned out of iron.

The earliest prosthetics were decidedly “dumb” devices: a 3,000-year-old wooden toe, found in Egypt; a knife in the place of a hand in medieval Italy; a 16th-century “Tin Man” arm, fashioned out of iron.

The future, of course, is smart. The most sophisticated modern prostheses go far beyond replicating the general contours of a missing limb or body part. Instead, they respond to the thoughts of its user, whether she wishes to pick something up or kick a soccer ball.

Open-source projects to develop smart prosthetics for the upper body, such as hands, are well-established parts of the bionic landscape. Now, legs get to join the party, thanks to the efforts of scientists Levi Hargrove and Elliott Rouse at the University of Michigan and Shirley Ryan AbilityLab.

🎧 For more intel on building bionic limbs, listen to the Quartz Obsession podcast episode on prosthetics. Or subscribe via: Apple Podcasts | Spotify | Google | Stitcher.

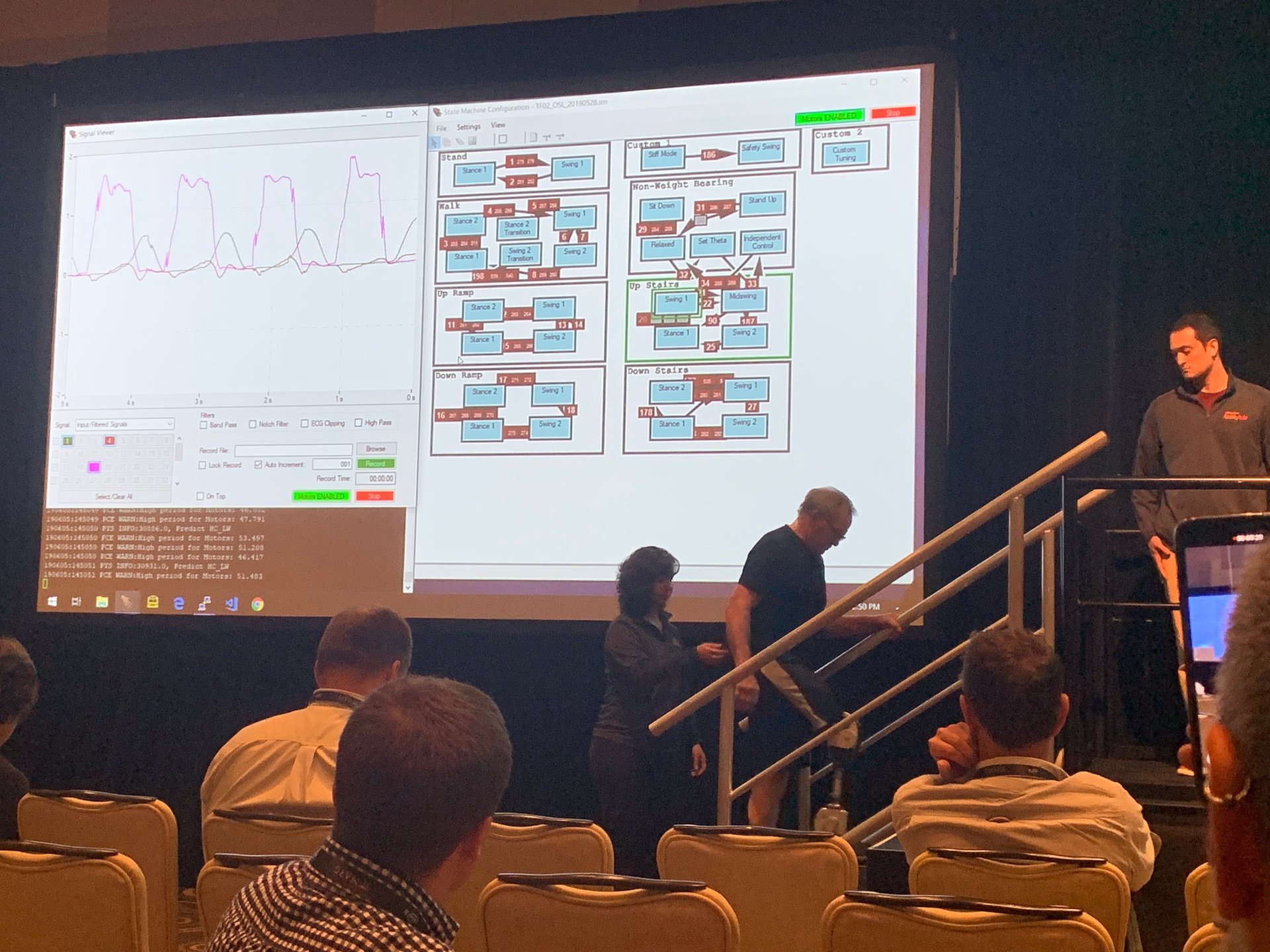

An open-source, artificially intelligent prosthetic leg was unveiled at Amazon’s Re:MARS conference in Las Vegas this afternoon (June 5) ahead of its release to the wider scientific community. It’s hoped that researchers and patients will work collaboratively to improve the leg, via its free-to-copy design and programming. (The current price to build it as specified is $28,500, including the Raspberry Pi that powers its AI; patients are not advised to see it as a “build-at-home solution.”)

Bionic legs are frustratingly tricky. Maintaining balance is hard work; the additional stress of supporting a patient’s body adds still more intricacy to the equation. Then, of course, there are all the things that legs are expected to do in the course of movement: cuts, pivots, turns. The flick of an ankle is far more complicated than it looks. The key to making it work, then, is AI. The Raspberry Pi-powered AI-based control uses a combination of muscle contraction signals and sensor data from within the bionic leg to guess what a user is going to do next, and responds accordingly.

To see the leg in action, however, you’d hardly guess at its complexity. An experimental user, identified as Terry, walked around a Las Vegas conference room to demonstrate how efficiently the leg worked. He was steady on his feet, accompanied as he moved by a vague electronic whooshing sound and the watchful eyes of an accompanying therapist. Later, he described the leg as being like a lover. “You’re intimately involved, your thoughts are the same. That’s what this leg is like. It’s a treasure, and a joy,” he said. “It has the potential to make my life much easier and better.”