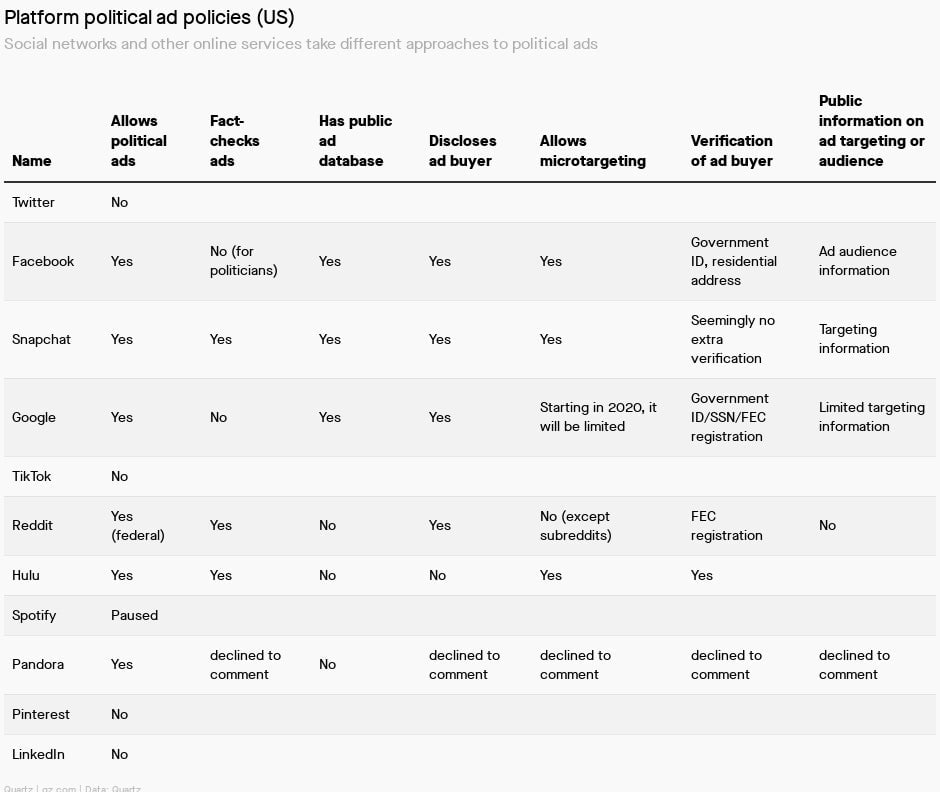

Each platform’s approach to political ads in one table

Leading up to the UK parliamentary elections this week, a staggering number of Facebook campaign ads were deemed misleading by watchdog organizations. This is, in part, due to Facebook’s own policies, which stipulate that the company does not fact-check political advertising.

Leading up to the UK parliamentary elections this week, a staggering number of Facebook campaign ads were deemed misleading by watchdog organizations. This is, in part, due to Facebook’s own policies, which stipulate that the company does not fact-check political advertising.

Facebook made this approach clear recently, with its CEO Mark Zuckerberg saying that private companies should not be censoring politicians. Other major platforms and online services clarified or introduced new political ad policies as well, taking very different tacks.

Some of them, including Twitter, TikTok, and Spotify, have banned or will pause political advertising altogether. In a frank statement to Reuters, a Spotify spokesperson said: “At this point in time, we do not yet have the necessary level of robustness in our processes, systems and tools to responsibly validate and review this content.”

Others, like Snapchat, allow political ads but said they would check the ads’ veracity. Policies also vary depending on the region of the world.

In the United States, traditional media are more strictly regulated when it comes to accepting political ads, and laws actually dictate what media organizations can and can’t do. For example, broadcast media, such as the TV networks NBC or CBS, are obliged to take any ad for a candidate for federal office. But cable companies have more discretion, and can reject ads they deem as false. But US lawmakers have not passed regulations for online political advertising, leaving any rules up to the platforms themselves.

Here’s how the policies on political advertising for major online platforms break down (or view a scrollable version):

Pandora’s spokesperson said the company would “pass” on Quartz’s request for comment.

Full transparency in online political advertising includes disclosing each ads’ content, detailed information on who paid for it, how the buyer’s identity was verified, how the ad was targeted, and who ended up seeing the ad. None of the platforms are completely transparent, although Facebook, which has faced the most criticism for profiting from political manipulation, has the most built-out political ads database. Facebook’s system, however, is far from perfect—in the UK, days before the recent election, thousands of ads disappeared from the archive. Snapchat’s database is just a downloadable spreadsheet, and Google’s archive is quite clunky to use and has a history of omissions of its own.

Some argue that microtargeting, which allows advertisers to show ads to very specific segments of users—such as young mothers or African-American students—while remaining invisible to everyone else, should be banned completely for political ads. Google said it would only allow basic targeting for its political ads starting in 2020, letting advertisers use general factors like geography, age, and gender. Facebook is reportedly considering the idea as well.

Snapchat allows microtargeting, points out the Financial Times, and recently has seen a rapid rise in political advertising, according to the watchdog group Center for Responsive Politics. And political advertisers are expected to find other ways to microtarget audiences on Google by going through special intermediaries that have access to some targeting tools.

Banning political ads completely seemingly eliminates issues with targeting or fact-checking. But there are problems with this solution as well. Twitter prohibited ads related to any issues it defined as political, which in effect banned advocacy organizations from promoting their causes. And, after all, whether a platform allows or bans political ads, and whether it fact-checks them or not, it’s important to remember that politicians can use their own, free accounts, like Donald Trump and his Twitter, to spread their message.

This post and chart were updated December 27, 2019, to include Spotify’s decision to pause political ads for 2020.