Are AI ethicists making any difference?

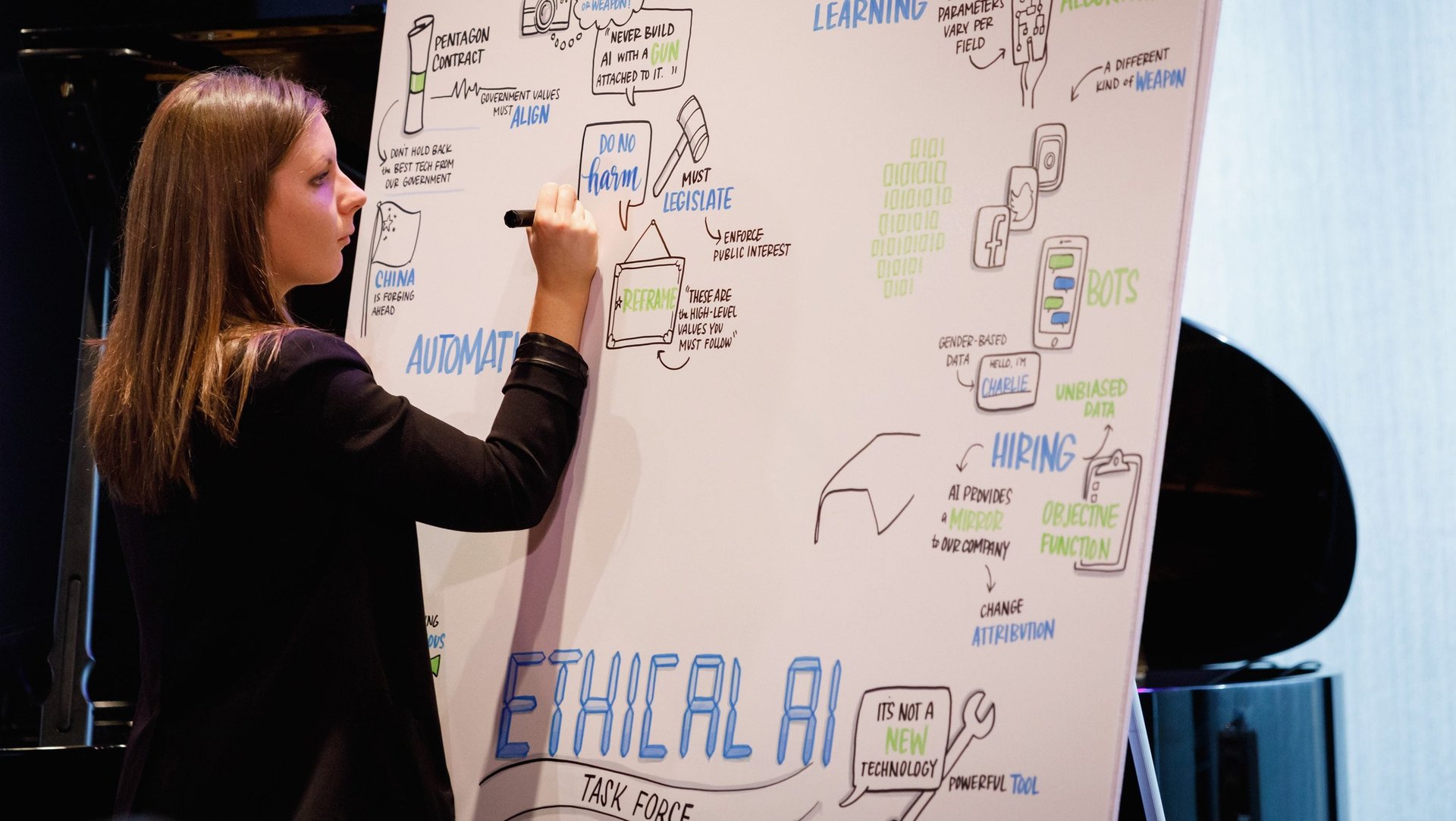

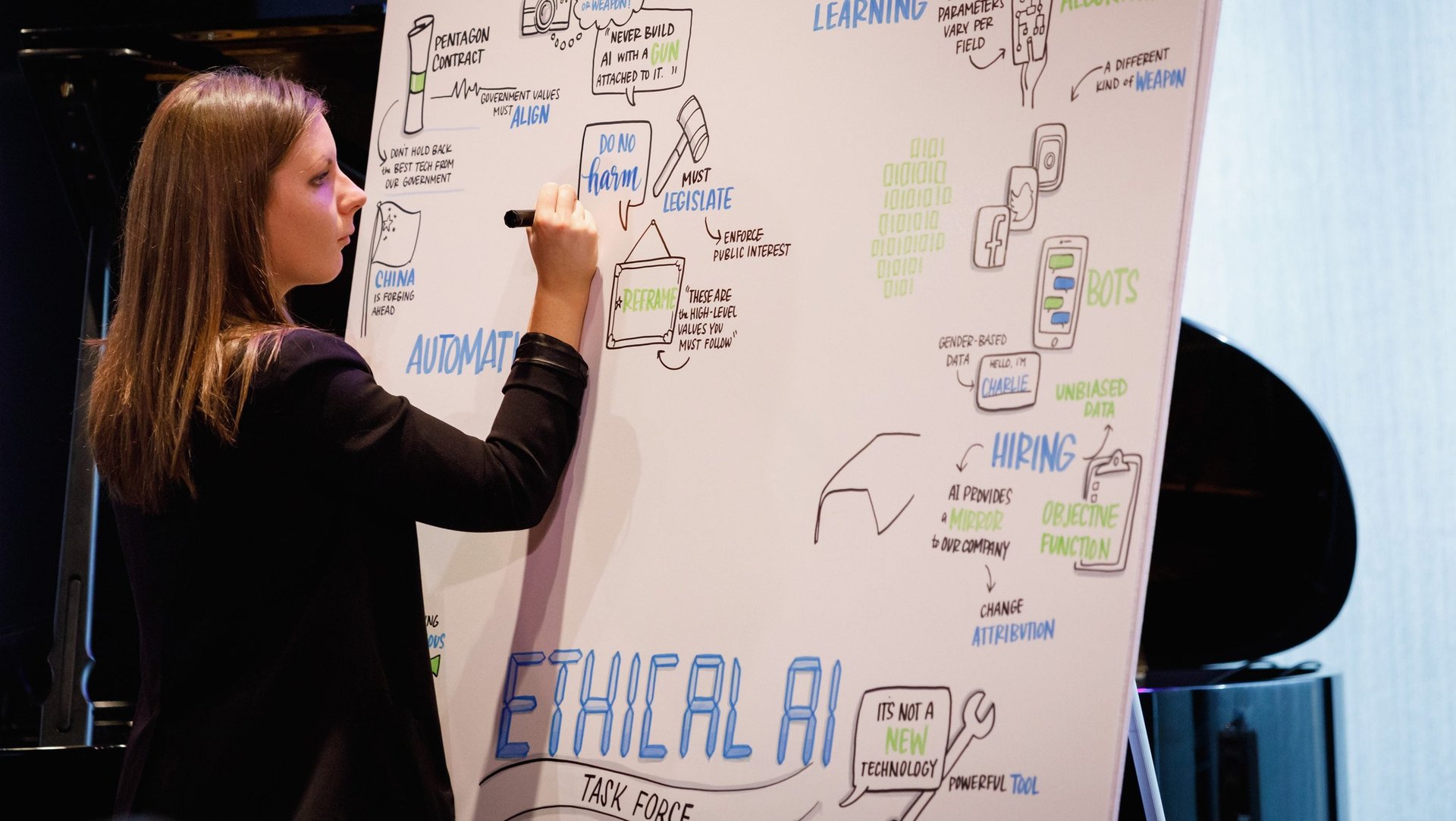

Tech companies, consulting firms, and even the US military are rushing to add ethics boards and hire “AI ethicists.” They’re asking them to think about everything from bias and fairness to the circumstances under which it is acceptable to use autonomous weapons.

Tech companies, consulting firms, and even the US military are rushing to add ethics boards and hire “AI ethicists.” They’re asking them to think about everything from bias and fairness to the circumstances under which it is acceptable to use autonomous weapons.

It’s a welcome acknowledgment of the harm AI can do.

But many people are skeptical about the role of ethicists in technology companies—including both ethicists and technologists. Trained ethicists in particular have bristled at this new role, often because some “AI ethicists” don’t have formal training in ethics and moral philosophy.

Josh Lovejoy, a leading AI ethicist and designer himself, says that big tech “loves to break words.” Corporate speak—or “garbage language”—changes how we use words: agile used to mean nimble and now it’s a software development process. 2020 could be the year that tech breaks “ethics” and “bias,” Lovejoy fears, by turning them into corporate jargon.

Google and other tech companies have already been accused of “ethics washing,” the term used to describe the weaponization of ethics as a defense against regulation. By employing a group of high-status specialists, tech can claim to have AI ethics under control and thereby deflect questions from activists and policymakers bias and other risks of AI.

That skepticism is often warranted, but it introduces an alternative risk. If the pendulum swings too far the other way, good ethical practices and initiatives may suffer from “ethics bashing,” with the result being even less discussion of ethics within tech.

Neither of these extremes are productive. Elettra Bietti, a researcher at Harvard Law School, is an outspoken critic of both the ethics washing and ethics bashing camps. She argues instead that individuals need to see ethics as a mode of inquiry that helps people evaluate competing technology choices—whether they be policy strategies or design choices. Ethics should be measured by how it enables participation because ethics, in practice, often involves redefining boundaries. “It’s important to realize that ethics is something that we all do, all the time,” she says. “And every action can be embedded within a broader framework of justice.”

What this all means for AI ethicists and ethics boards is that they should pay careful attention to how their actions impact key business decisions at key check points. For instance, how is the ethics group constrained by the existing strategy? If an ethics board recommended that the only way to deliver an “ethical AI” was to abandon the entire product line and start again, would they be able to collectively voice this recommendation? Or, if an ethics board made a decision that positively affected the impact of a product in a tiny way, what would be larger: the positive impact on the users of the product or the positive impact on the reputation of the company, by virtue of the board’s presence?

Jacob Metcalf, a researcher at Data and Society, a technology think tank, describes ethics as “the vessel which we use to hold our values.” This is a useful idea because it does allow for ethics to be a process. Maria Axente, AI ethics specialist at PwC, is also constantly challenged with measuring ethics: separating the substantive choices that need to be made in AI design from the process that is required to support it. “How do we distill thinking into action, substance into structure that people can practically implement?” Axente asks. A perennial challenge in AI ethics is moving beyond people’s personal intuitions to talking about real-life problems to be solved.

Lovejoy argues that ethics need to be seen not as some sort of humane or philosophical add-on, “but as ‘just good design’ that works when it’s been validated in the real world.” What’s needed, in this view, aren’t AI ethicists so much as AI designers trained to incorporate ethics in their process. But, he hastens to add, AI design is a new and specialist practice that doesn’t necessarily fit hand-in-glove with a traditional software development process. It’s vital that there is a priority put on developing techniques and practices that extend traditional roles—whether as engineer, program manager, designer, or researcher.

Ultimately, the role of the ethical AI designer is to help people work through the complex, sometimes ambiguous, decisions that are required to design, build, test, and manage an ever-changing intelligent product.

The checklist

AI ethics isn’t all high-minded discussion of existential conflicts, but abstract principles can be difficult to put into practice. Principles can mask the complexity of ethical decisions, which involve different assumptions, interpretations, personal experiences and biases.

This makes it extraordinarily difficult for people to apply principles consistently in the countless small decisions made everyday in the software development process. Checklists can help with a middle ground, providing a scaffold between high-level principles and granular technical tools.

Researchers have found that the most important role of a checklist in AI ethics is to prompt critical conversations. AI ethics efforts are often the result of ad-hoc processes driven by passionate individual advocates. In companies where the priority is fast-paced development and deployment, there can be significant social cost by slowing things down to talk about fairness.

It takes time and effort to involve more people in decisions and to wrestle with competing or ambiguous topics. An AI ethics checklist can act as a “value lever” and make it acceptable to reflect on risks, raise red flags, add extra work and escalate decisions.

Do ethicists matter?

How are AI ethicists in technology actually doing their jobs? And does their presence make a difference?

Data and Society researchers recently investigated this by interviewing “ethics owners” from various Silicon Valley tech companies—people employed to “do ethics” for big tech—for their views on the practical progress of ethics in Silicon Valley.

The researchers found a central dilemma: ethics owners have to try to resolve complex social decisions, which are usually framed (and come under challenge) within the logic of Silicon Valley. One of the most prevailing Silicon Valley mindsets is technology solutionism—the idea that the solution to a “bad” technology outcome is more technology. Because AI is used in social applications, this philosophy is being applied to more and more social problems.

What makes this a unique challenge for ethicists is that these companies can get really big before they are mature. “Ethics owners” become “ethics coordinators,” tasked with making sure ethics doesn’t get in the way of the engineers’ work.

Engineers who make the frontline decisions can position themselves as being able to use their personal judgment. This is one of the primary ways moral judgment gets instantiated inside AI. If engineers are the ones seen to be best positioned to evaluate a hypothetical harm, they also have the power to dismiss the concern as not realistic, not relevant, or not worth bothering about given the probabilities. If engineers lack knowledge of the long term or broader societal consequences, they can lack a sense of accountability for what they do as individuals.

For the ethicists, there is often a disconnect between the technologist’s mind and the moral significance of their work.

The challenge for AI ethicists is to embed values of dignity, agency, and human flourishing in AI design so that these values can be at work up and down the entire development stack and throughout the design and development process. This will mean not only dealing with biased training data but dealing with how the AI ultimately behaves “in the wild.”

The challenge for companies, according to Bietti, is giving their employees space to “think slower, to think with more depth, and more systematically.” Developers need to shield their “thinking from pragmatic pressures,” let intuitions change and enable people to make sense of other problems. The traditional Silicon Valley approach to “move fast and break things” will not suffice.