The little-known AI firms whose facial recognition tech led to a false arrest

Robert Williams went to jail because a computer—and a pair of Detroit police officers—made a mistake.

Robert Williams went to jail because a computer—and a pair of Detroit police officers—made a mistake.

The officers relied on facial recognition software to identify Williams as a suspect in a 15-month-old shoplifting case. They were wrong—making Williams perhaps the first known case of a wrongful arrest resulting from faulty facial recognition.

Earlier this month, IBM, Microsoft, and Amazon swore off or paused their sale of facial recognition tools to US police and called on Congress to regulate the technology. But the software that misidentified Williams wasn’t from a big tech company with a household name. It was sold by police contractor DataWorks Plus, and powered by algorithms from Japanese tech firm NEC and Colorado-based Rank One Computing.

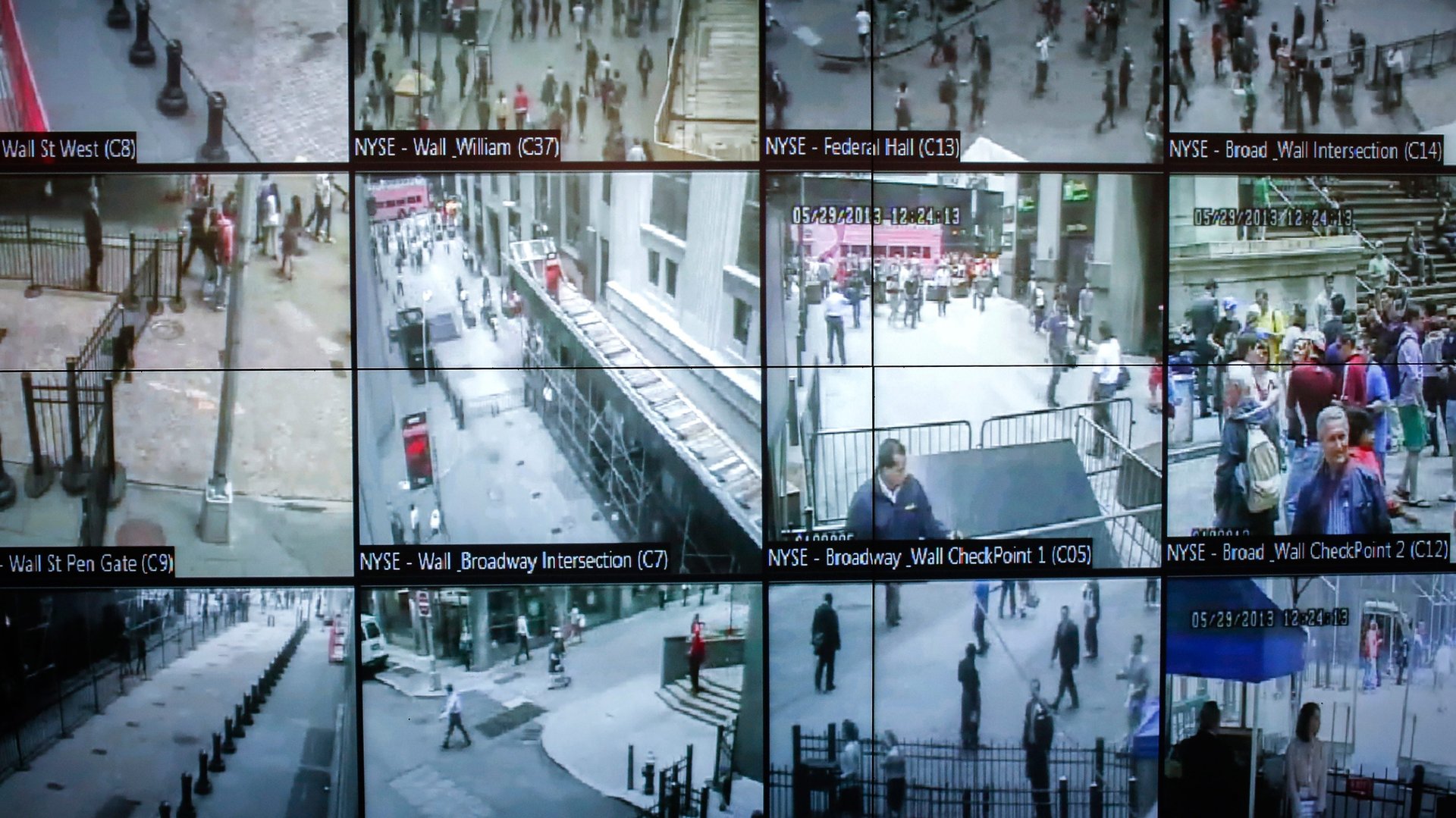

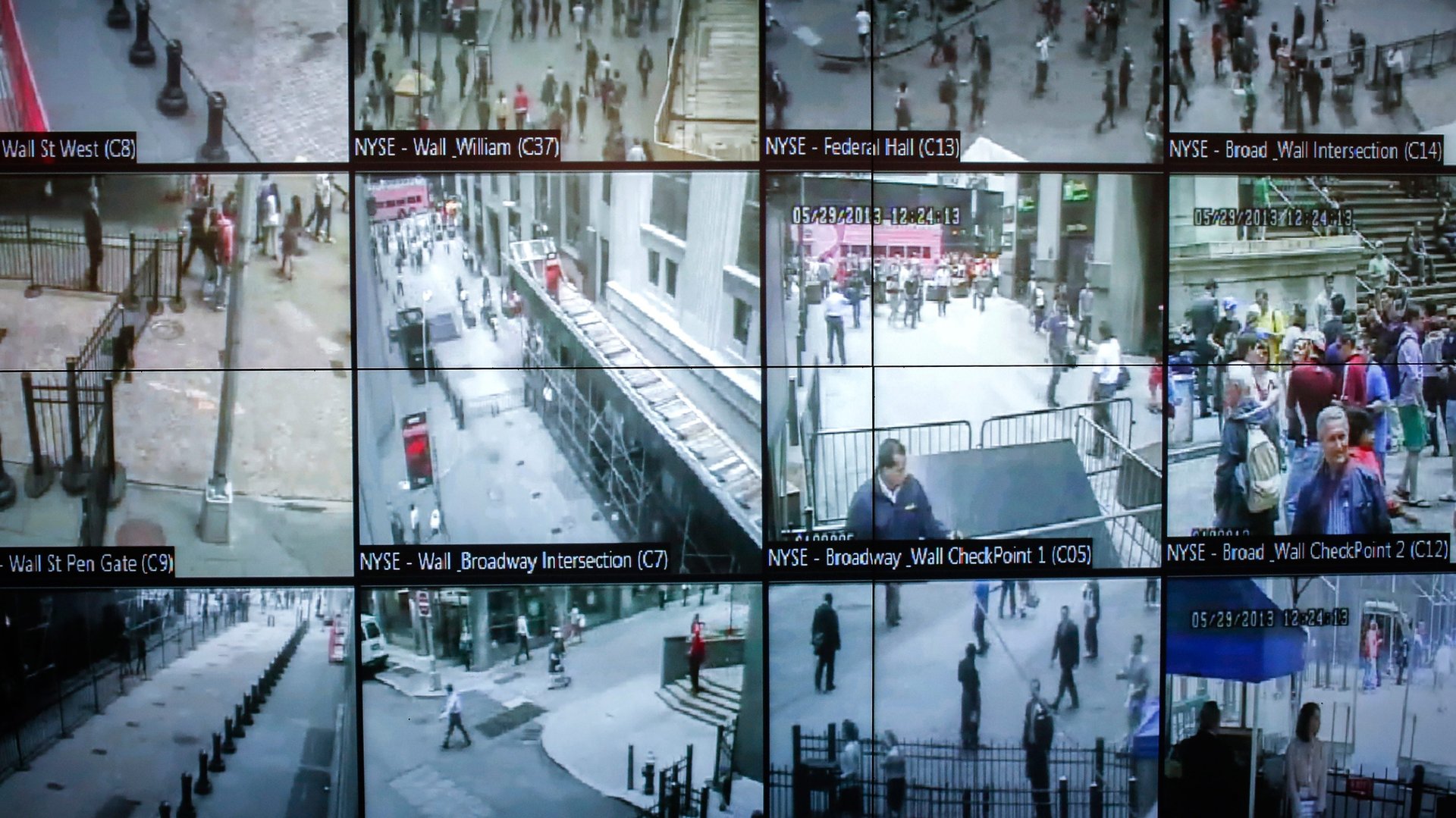

DataWorks Plus is one of the biggest resellers of facial recognition technology to US police departments. It has facial recognition contracts with police in Detroit, Chicago, New York City, Santa Barbara, South Carolina, Pennsylvania, and Maryland. Although it doesn’t build the algorithms itself, the company sells access to tools built by other low-profile firms like NEC, Rank One Computing, and Cognitech.

“The landscape is a little hard to get a very clear sense for because these companies are rebranded and bought and sold very, very frequently,” said Clare Garvie, who researches police use of facial recognition at Georgetown Law School’s Center on Privacy & Technology. In 2016, she published a report estimating that at least one in four US state and local law enforcement agencies have access to facial recognition.

The tools they build are similarly opaque. Most of the information that is publicly available about their accuracy—and how their performance varies across demographic groups—comes from the US National Institute of Standards and Technology, which asks facial recognition firms to submit their models to be tested and ranked against one another. NEC and Rank One Computing both participated in 2018, when NIST found that the algorithms it tested were collectively less accurate for women and people of color.

But participation is voluntary, and the results of the NIST test aren’t a perfect indicator of an algorithm’s performance. “We don’t know which version [of the algorithm] is being deployed [by police] at any given time and whether that’s the one that was tested by NIST,” said Garvie. “It may actually be a different tool even though the provider is the same.”

The rules governing police use facial recognition are also difficult to parse, and open to individual officers’ interpretation. In Williams’ case, officers circumvented a policy designed to prevent an arrest like his from happening.

Detroit police policy explicitly forbids officers from making arrests based solely on facial recognition matches. But after the DataWorks Plus program identified Williams, the officers put his picture in a photo array and presented it to a store security guard, who did not witness the theft and had only seen the same grainy surveillance footage the police had. The guard picked Williams out of the lineup. That was enough for an arrest warrant.

“Many of these facial recognition systems are not audited, meaning that nobody’s checking to see if they’re being used in compliance with any existing policy,” said Garvie. “If nobody bothers to check whether or not it’s followed, it’s as if the policy doesn’t exist.” There’s other evidence to suggest the Detroit Police Department doesn’t always follow its own polices: Department statistics show that it used facial recognition to investigate crimes like arson and threats against police—after it laid out a rule limiting the technology’s use to violent crimes and home invasions.

Aside from these internal department policies, there are almost no laws governing police use of facial recognition. Police can legally run searches on low-quality photos, as in Williams’ case, as well as forensic sketches and even celebrity lookalikes. Garvie said legislators have heard the calls for regulation and that there has been “a lot of movement” both from lawmakers and lobbyists to craft federal legislation. The industry has thrown its support behind uniform national regulation, which is easier to comply with than mismatched local rules.

DataWorks Plus and NEC didn’t respond to requests for comment, but Rank One CEO Brendan Klare wrote in an email that the Detroit Police Department’s use of his facial recognition algorithm in the Williams case “goes against established industry standard best practices, and Rank One unreservedly opposes any misuse of face recognition technology including where a candidate match serves as the source of probable cause for an arrest.” He said he would “continue to work with legislative groups that seek regulation from the US Congress on the use of face recognition by law enforcement.”

“I suspect that anything we see at the federal level is likely to be less stringent than state and local rules,” Garvie said, referring to outright bans enacted in cities like San Francisco and Boston. “Anything short of a ban is going to be more favorable to companies’ ability to continue selling this technology.”