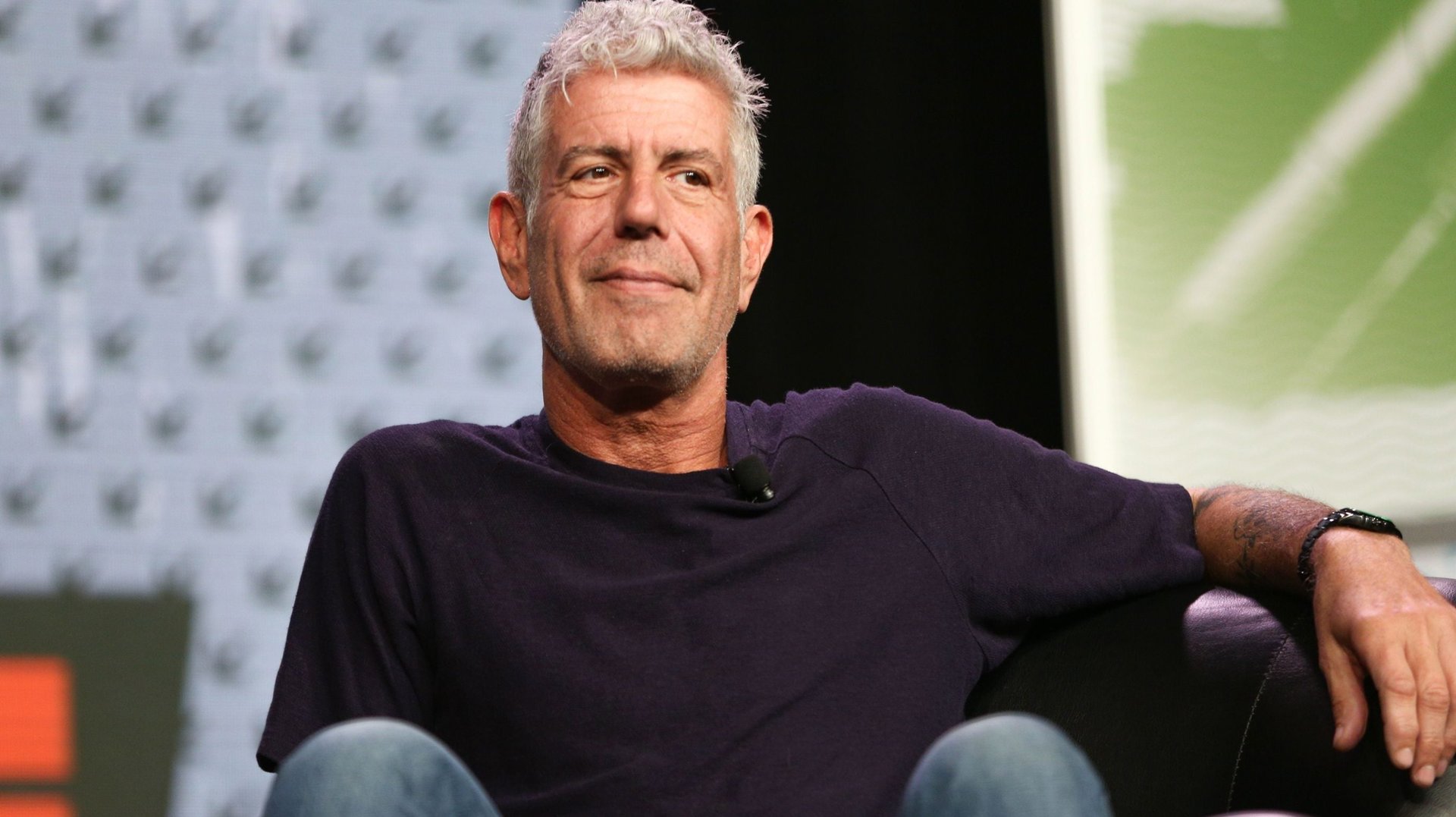

The Anthony Bourdain audio deepfake is forcing a debate about AI in journalism

By now, using machine learning to simulate a dead person on screen is an accepted Hollywood technique. Synthetic media, known widely as “deepfake” (a portmanteau of “deep learning” and “fake”) technology has used been famously on Carrie Fisher in a Star Wars movie and Heath Ledger in “A Knight’s Tale.” In 2019, footage of comedian Jimmy Fallon eerily transformed into Donald Trump demonstrated how advanced the technology has become.

By now, using machine learning to simulate a dead person on screen is an accepted Hollywood technique. Synthetic media, known widely as “deepfake” (a portmanteau of “deep learning” and “fake”) technology has used been famously on Carrie Fisher in a Star Wars movie and Heath Ledger in “A Knight’s Tale.” In 2019, footage of comedian Jimmy Fallon eerily transformed into Donald Trump demonstrated how advanced the technology has become.

But no one was laughing when it was revealed that deepfake technology was used to simulate Anthony Bourdain’s voice in the new documentary Roadrunner: A Film About Anthony Bourdain. In an interview with GQ, film director Morgan Neville revealed he commissioned an AI model of the chef and TV personality’s voice and considered using it to narrate the entire film. In the final cut, Neville told the New Yorker that he used it for three lines in the two-hour production. Among them is a poignant line from an email to artist David Choe, “My life is sort of shit now. You are successful, and I am successful, and I’m wondering: Are you happy?”

Neville uses the AI-generated clip as an artistic touch to heighten Choe’s pathos as he recounts the last email he received before Bourdain took his life in 2018. The audio is convincing, albeit a tad flatter compared to the rest of Bourdain’s narration.

A brewing controversy over manufacturing Bourdain’s voice

Neville, a former journalist, didn’t see the problem with mixing AI-generated soundbytes with actual clips of Bourdain’s voice. “We can have a documentary ethics panel about it later,” he joked in the New Yorker interview.

Neville added that he obtained consent from Bourdain’s estate. “I checked, you know, with his widow and his literary executor, just to make sure people were cool with that. And they were like, ‘Tony would have been cool with that.’ I wasn’t putting words into his mouth. I was just trying to make them come alive,” he explained to GQ. Bourdain’s ex-wife, Ottavia Busia-Bourdain, who appears extensively in the documentary, later contested that she ever gave permission for an audio surrogate.

Meredith Broussard, a New York University journalism professor and author of the book Artificial Unintelligence: How Computers Misunderstand the World, says it’s understandable that many find Bourdain’s audio clone deeply unsettling. “I’m not surprised that his widow doesn’t feel like she gave permission for this,” she says. “It’s such new technology that nobody really expects that it’s going to be used this way.”

Using AI in journalism poses the greater ethical dilemma, Broussard said.”People are more forgiving when we use this kind of technology in fiction as opposed to documentaries,” she explains. “In a documentary, people feel like it’s real and so they feel duped.”

Simulated media and AI in journalism

Roadrunner, which is co-produced by CNN, isn’t the first instance that news organizations have relied on AI. Associated Press, for instance, has been using AI to auto-generate articles about corporate quarterly earnings since 2015. Every auto-generated AP article is appended with a note: “This story was generated by Automated Insights,” referring to the machine learning technology they’re using.

Clearly disclosing instances when AI is used is imperative, Broussard says. The fact that Neville discussed it after the fact is noteworthy although it’s debatable whether that will satisfies his critics.”It’s interesting that documentarians are going to have to think about the ethics of deepfakes,” Broussard says. “They’ve always thought about the ethics of storytelling, just like journalists have, but here’s a whole new realm that we’re going to have to develop ethical norms for.”

The controversy reawakens a longstanding debate about how journalists quote their subjects. Celebrated writer Gay Talese, for instance, reconstructs quotes as he remembers them, believing that the tape recorder is “the death knell of literary reportage.” In her book The Journalist and the Murderer, the New Yorker‘s Janet Malcolm underscored the problem of combining fragments of multiple interviews into single statements, as reporter Joe McGinniss did in covering the murder trial of former doctor Jeffrey MacDonald. “The journalist cannot create his subjects any more than the analyst can create his patients.” she wrote.The late writer herself was embroiled in a decade long legal battle over five quotes she used in a 1983 profile about the Sigmund Freud archives. The libel case was dismissed in Malcolm’s favor.

The ethics of deepfakes

Broussard is unsure where she stands about Neville’s use of deepfake technology. “The thing about ethics is that it’s about context,” she explains. “Three lines in a documentary movie—it’s not the end of the world, but it’s important as a precedent. And it’s important to have a conversation about whether we think this is an appropriate thing to do.”

Ultimately Broussard says the Roadrunner controversy presents another argument for regulating the use of AI overall. “There is an emerging conversation in the machine learning field about the need for ethics and machine learning,” she says, “I am grateful that this conversation has begun because it is long overdue.”