Google is developing a new superintelligent AI but ethical questions remain

Artificial intelligence is already capable of doing some incredibly useful things, like predicting flooding, diagnosing disease, and instantly translating languages. Advances in neural networks coupled with enormous computational power have allowed tech companies to create incrementally smarter AI models over the last decade.

Artificial intelligence is already capable of doing some incredibly useful things, like predicting flooding, diagnosing disease, and instantly translating languages. Advances in neural networks coupled with enormous computational power have allowed tech companies to create incrementally smarter AI models over the last decade.

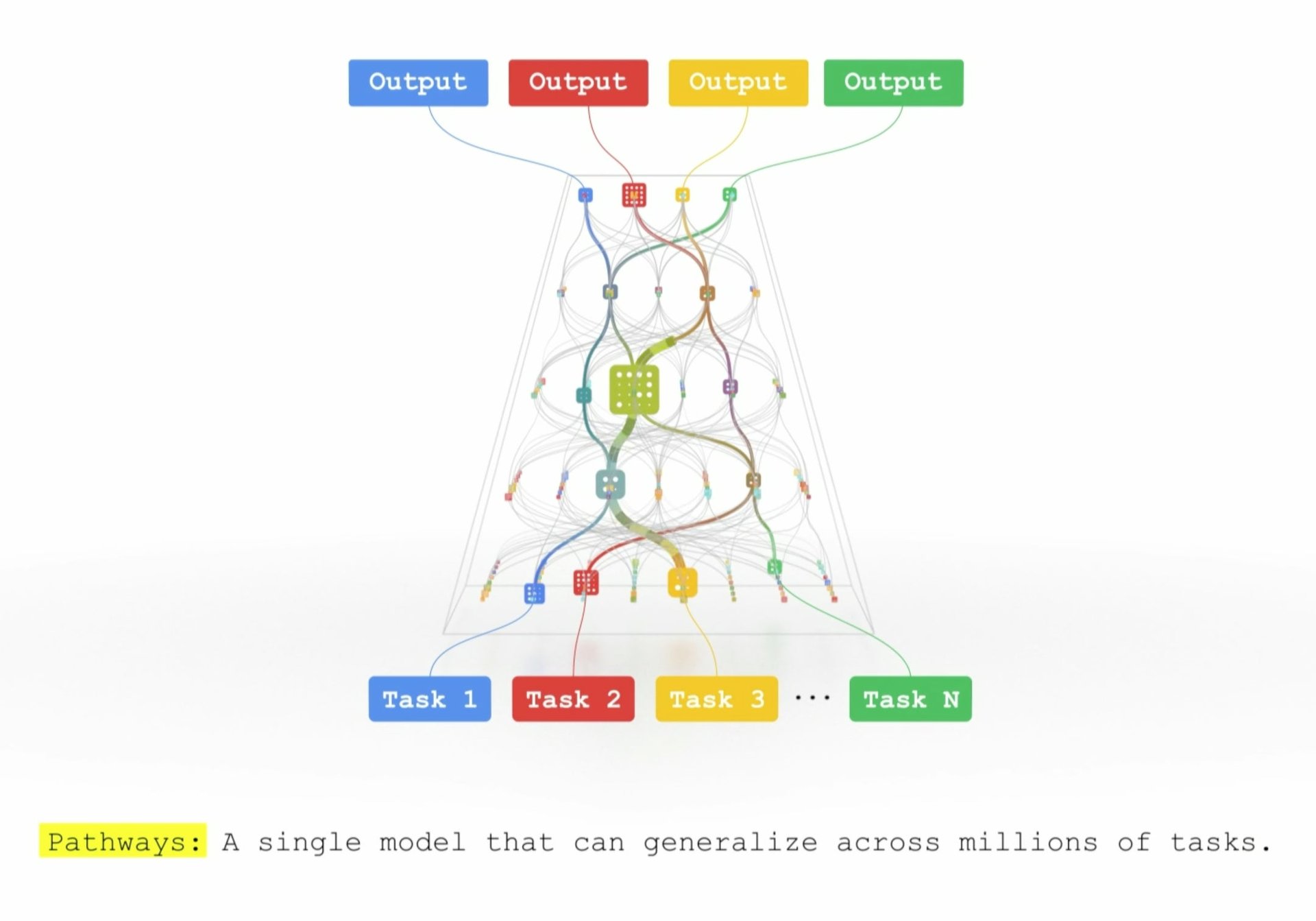

Jeff Dean, Google’s AI chief, thinks we’re just scratching the surface. Speaking at the TED conference in Monterey, California, this week, he revealed that Google is developing a nimble, multi-purpose AI that can perform millions of tasks. Called Pathways, Google’s solution seeks to centralize disparate AI into one powerful, all-knowing algorithm.

Google Pathways: a multi-purpose AI

“We’re pretty excited about this,” Dean said. “These kinds of general purpose intelligence systems that have a deeper understanding of the world will really enable us to tackle some of the greatest problems that humanity faces.”

For example, he said, “[w]e’ll be able to engineer better medicines by infusing these models with knowledge of chemistry and physics; we’ll be able to advance educational systems by providing more individualized tutoring; we’ll be able to tackle really complicated issues like climate change, perhaps engineering of clean energy solutions.”

Dean said Google Pathways could also dramatically shorten the machine-learning development process. “The grand challenge of AI is: How do you generalize from a set of tasks you already know how to do to new tasks, as easily and effortlessly as possible? If you can build these systems that already are infused with how to do thousands or millions of tasks, then you can effectively teach them to do a new thing with relatively few examples,” he said, describing a future in which a computer could grasp a new concept after seeing just five examples of it.

Reckoning with the ethics of AI

Dean’s optimism is tempered with growing concerns about who designs and controls the world’s AI systems.

Google’s machine learning initiative has come under scrutiny lately with the departure of Timnit Gebru and Margaret Mitchell, former co-chairs of its ethical AI team. Gebru claimed she was forced out of Google because of a controversial paper she wrote about the perils of creating language learning AI models trained on large amounts of text. As Karen Hao of MIT Tech Review explained, because Google’s AI devours text from the internet, “there’s a risk that racist, sexist, and otherwise abusive language ends up in the training data.”

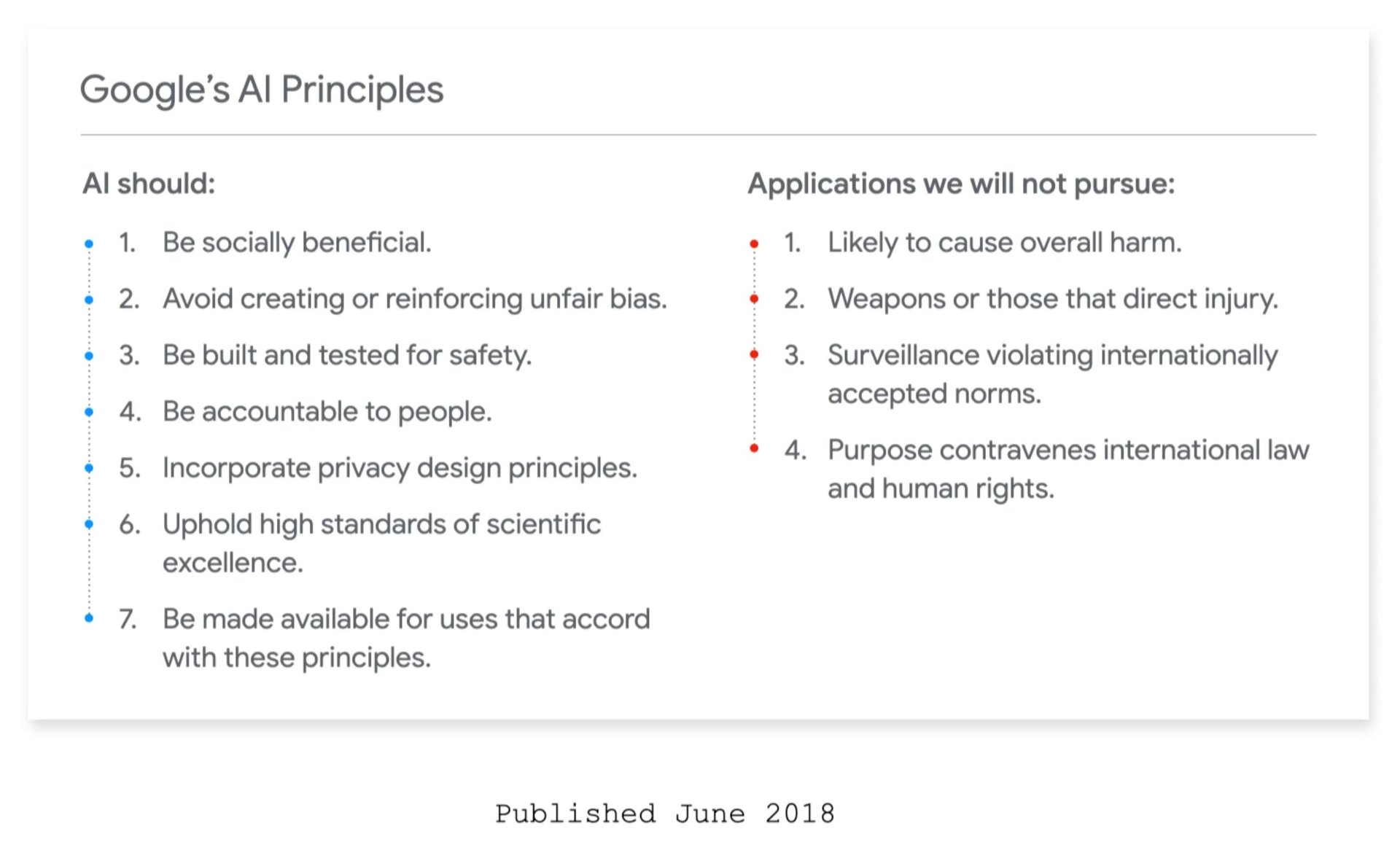

Dean touched on the need for responsible data collection at the end of his talk but didn’t delve into the specifics of the ethical quagmires that have dogged his field and his employer. Instead, he showed a slide with Google’s AI principles, which hint at these concerns in broad stokes. “Data concerns are only one aspect of responsibility. We have a lot of work to do here,” he said.

Google’s AI Principles from Dean’s presentation.

Responsibly reviewing AI inputs—and outcomes

Dean’s appearance at TED comes during a time when critics—including current Google employees—are calling for greater scrutiny over big tech’s control over the world’s AI systems. Among those critics was one who spoke right after Dean at TED. Coder Xiaowei R. Wang, creative director of the indie tech magazine Logic, argued for community-led innovations. “Within AI there is only a case for optimism if people and communities can make the case themselves, instead of people like Jeff Dean and companies like Google making the case for them, while shutting down the communities [that]AI for Good is supposed to help,” she said. (AI for Good is a movement that seeks to orient machine learning toward solving the world’s most pressing social equity problems.)

🎧 For more intel on the Google ecosystem, listen to the Quartz Obsession podcast episode on Google docs. Or subscribe via: Apple Podcasts | Spotify | Google | Stitcher.

TED curator Chris Andersen and Greg Brockman, co-founder of the AI ethics research group Open AI, also wrestled with the unintended consequences of powerful machine learning systems at the end of the conference. Brockman described a scenario in which humans serve as moral guides to AI. “We can teach the system the values we want, as we would a child,” he said. “It’s an important but subtle point. I think you do need the system to learn a model of the world. If you’re teaching a child, they need to learn what good and bad is.”

There also is room for some gatekeeping to be done once the machines have been taught, Anderson suggested. “One of the key issues to keeping this thing on track is to very carefully pick the people who look at the output of these unsupervised learning systems,” he said.

Already, groups of researchers are warning that a super-intelligent AI, as Dean described it, will be hard to police. Consider a recent study in the Journal of Artificial Intelligence Research, titled Superintelligence Cannot be Contained. As study co-author Manuel Cebrian of the Max Planck Institute for Human Development has noted: “[T]here are already machines that perform certain important tasks independently without programmers fully understanding how they learned it. The question therefore arises whether this could at some point become uncontrollable and dangerous for humanity.”