Google’s new Lens feature addresses a lingering conundrum of online shopping

Online sales exploded during the pandemic, with American shoppers flocking to the internet to buy everything from groceries to high-end jewelry.

Online sales exploded during the pandemic, with American shoppers flocking to the internet to buy everything from groceries to high-end jewelry.

But ecommerce, while good at delivering what consumers already know they want, has never been ideal for sparking the sense discovery of browsing through store shelves. Google Lens multisearch, which launched Thursday, is designed to help address that, moving consumers away from the shopping checklist.

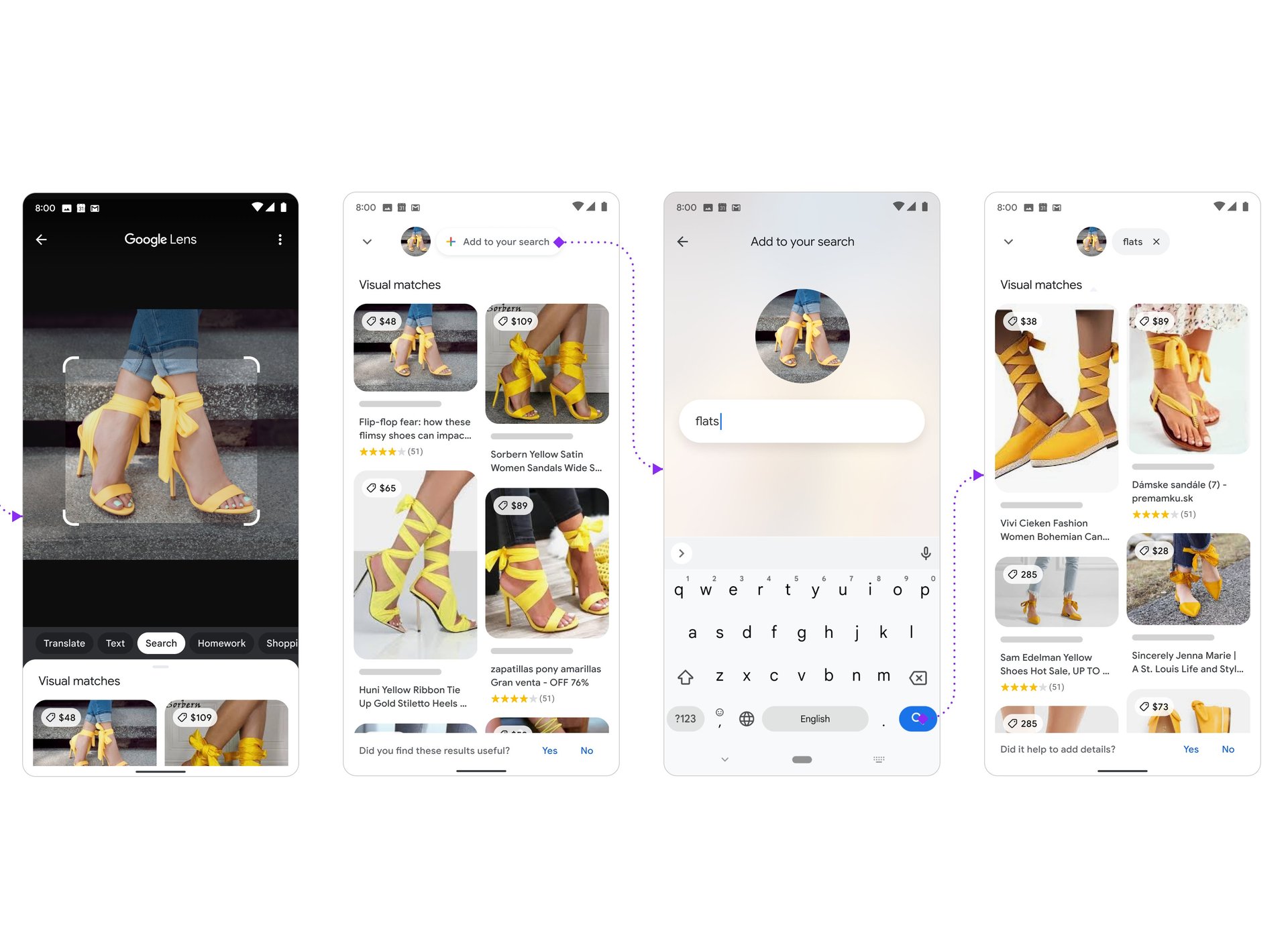

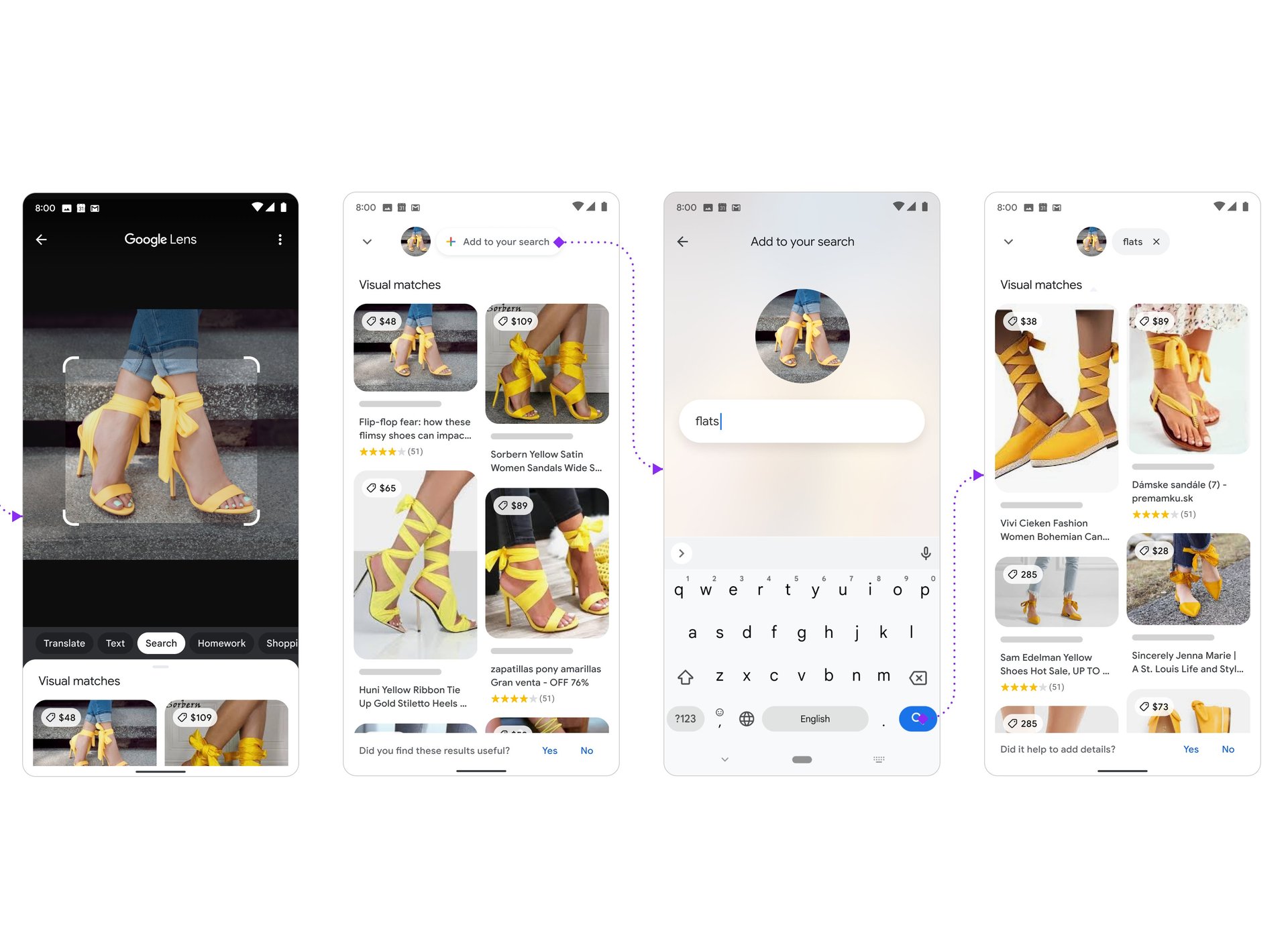

The new technology allows users to combine both images and text to look for items to buy. Google envisions shoppers using it to search for product based on snapshots of items they spot as they go about their everyday life. For example, you spot an orange pattern you love on a woman’s dress, but want it for a couch. You can take a picture of her outfit, search it on Google Lens along with the keyword “sofa”, and get results that marry both.

It not only solves the problem of people not knowing how to describe a product, but also captures the purchasing intent right at the moment it forms. The bet is that any space where shoppers have access to the internet will turn into a market, making the impulsive buy more organic to ecommerce.

Finding exactly what you want

Lens will also make it easier for shoppers to unearth exactly what they want. In the past, if you saw an amazing mustard yellow dress but wanted it in another color, you would have to type in enough detail about the silhouette, the fabric, and other components, and then add on the desired shade. Now you can upload a photo of the yellow dress and add the word “green” to find similar products in the right color.

This helps solve the obstacle of people not having the right vocabulary to describe what they want. They may not know that cropped tees paired with blinged out logos is known Y2K revival fashion, or that a circular hat with a downward-sloping brim is called a bucket hat, both of which are real search trends Google has noticed recently.

Multisearch is the latest component to be added to Google Lens, which also allows people to translate text into different languages, identify plants and animals, and even help solve math equations. (Google also has a separate function that can identify tunes that users hum into their phones.)

For now, multisearch is only available in the US, but Google says it expects to extend it to other regions too.