How making microchips 3D could unleash an age of “cognitive computing”

When it comes to the never-ending struggle to innovate in microchips, Hector Ruiz is a veteran. He was for 10 years the CEO of AMD—the David to Intel’s Goliath in the battle for marketshare of the chips that powered a generation of PCs. He’s just written a not-at-all-subtle book about those experiences—Slingshot: AMD’s Fight to Free an Industry from the Ruthless Grip of Intel—and now he’s thinking about his next chapter. One in which he hopes to lead a transformation of our most basic ideas about what a microchip can be.

When it comes to the never-ending struggle to innovate in microchips, Hector Ruiz is a veteran. He was for 10 years the CEO of AMD—the David to Intel’s Goliath in the battle for marketshare of the chips that powered a generation of PCs. He’s just written a not-at-all-subtle book about those experiences—Slingshot: AMD’s Fight to Free an Industry from the Ruthless Grip of Intel—and now he’s thinking about his next chapter. One in which he hopes to lead a transformation of our most basic ideas about what a microchip can be.

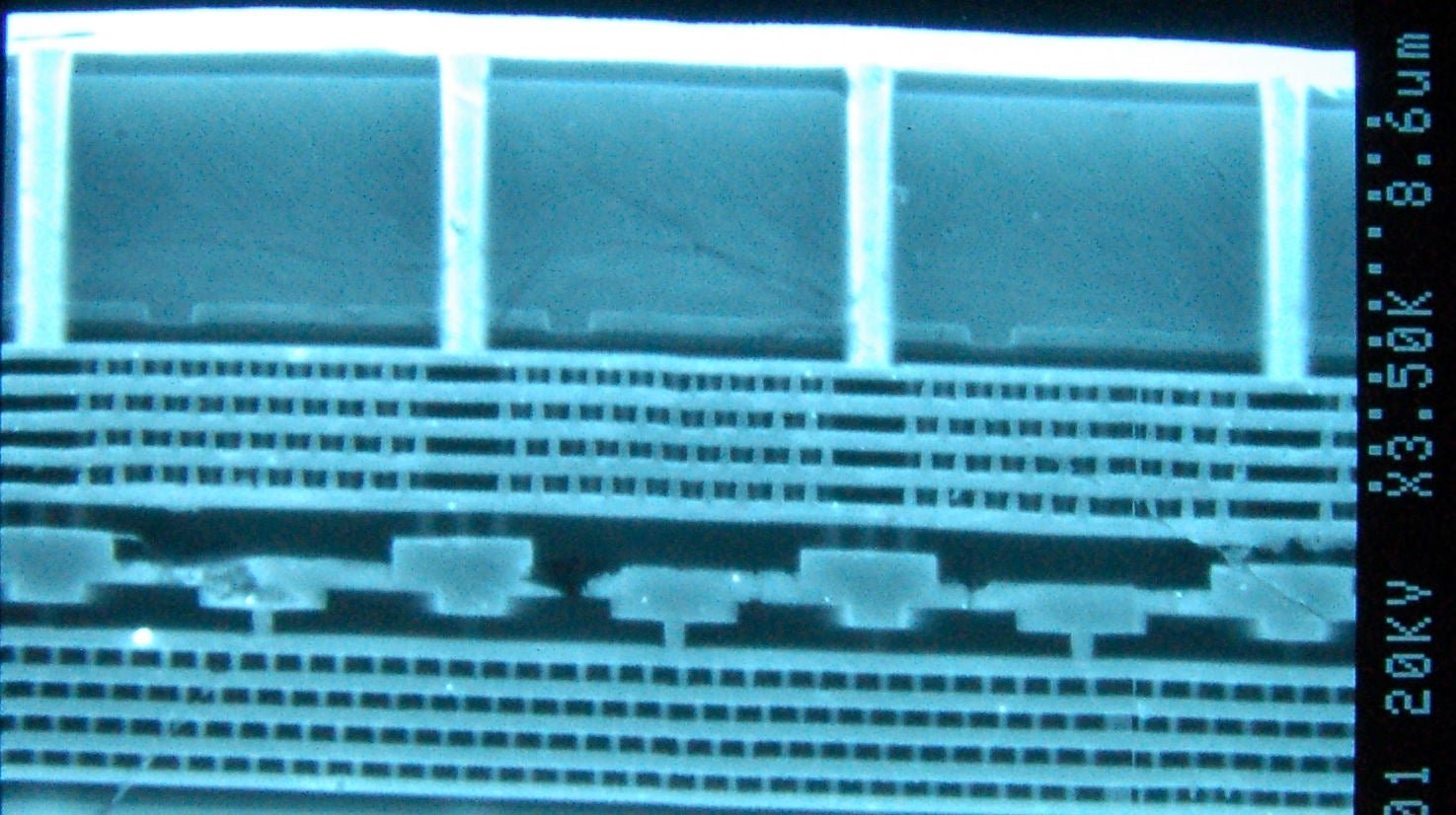

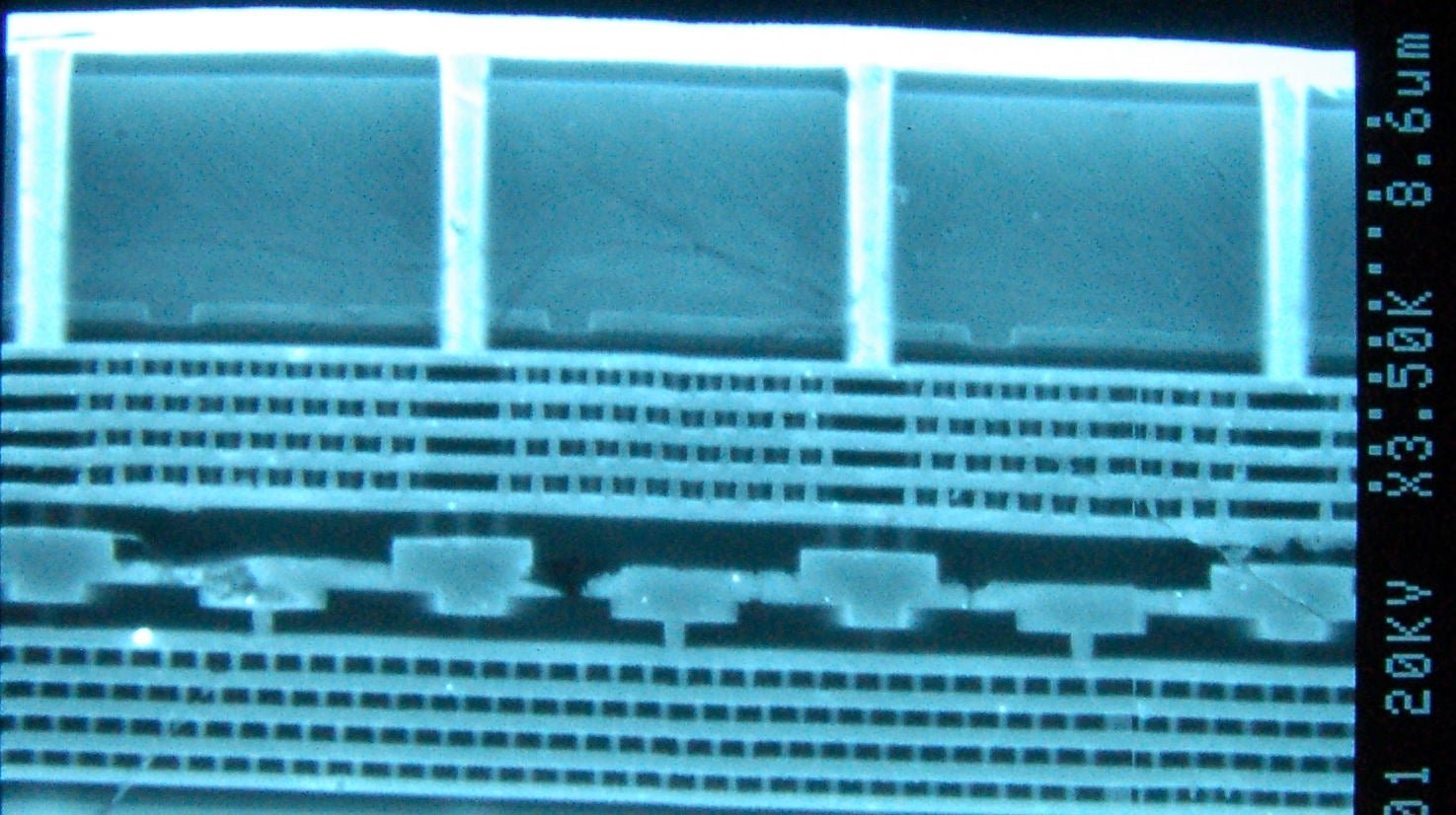

By definition, microchips are flat. Hence the name—”chip.” But what if we could stack circuits one on top of the other? Then signals could travel shorter distances than if the entire circuit were rolled out flat. And that would be more like the human brain.

For his next act, Ruiz is working on a startup called Advanced Nanotechnology Solutions, which has a deceptively simple goal: Make it possible to connect microchips stacked vertically. Do this enough times, and what you have is no longer a chip, but a slab, or a cube, or some other shape so dense with transistors that it could be orders of magnitude more powerful than the chips in our devices today.

10x performance for 10% of the cost

The technology that could enable this is called 3D interconnect. It’s long been promised but has yet to be delivered in a way that’s useful—but thanks to Ruiz’s efforts, in the next few years that may change.

The theoretical limits of the technology are impressive. “If you take a normal PC system, say a Dell or HP laptop,” says Ruiz, “without any other changes to your technology, [building a chip in 3D] would give that system one-tenth the cost, one-tenth the space, one-tenth the power [use] and ten times the performance.”

The basic reason is simply that it’s inefficient to shuttle data—i.e., electricity—from one side of a chip to the other. Stacking elements of a chip on top of another drastically shortens the distance between them, as well as creating multiple pathways for information to move.

“The result will be ‘cognitive computing,'” says Ruiz—microchips powerful enough to mimic the cognitive processes of the brain. He says applications of artificial intelligence, like the voice recognition on modern smartphones, are rudimentary compared to what’s coming. One application would be a kind of “minder” that watches how you live your life and has access to your calendar, so that it can automatically alert friends when, for example, it’s clear you’ll be late for a meeting. The same technology could be used to shape our own behavior and achieve goals that are difficult when left to our limited capacity to plan and remember—everything from losing weight to learning a new language.

The problem of standards

Achieving that level of performance won’t be easy. It will require basic changes to the way chips are fabricated, and a chip fab is a clean-room factory costing billions of dollars. Just as challenging: Getting manufacturers who are frenemies at best to agree on a common standard for connectivity.

“Think of it as Legos,” says Ruiz. Lego pieces are all different, but they all connect. A common standard would allow different chip makers—ones that specialize in memory, or CPUs, or chips to handle analog signal—to mix and match whichever chip parts they like.

The knowledge required to make 3D interconnects work is not widespread, says Ruiz. And “about 80%” of it resides in a single complex of research labs, at the College of Nanoscale Science and Engineering, in Albany, New York. Other, boutique companies have been working on 3D chips for some time. Tezzaron, based in Chicago, Illinois, built what it claims is the world’s first prototype of a 3D processor in 2004.

But if 3D chips are so fantastic, why does it take a former AMD executive to cat-herd major microchip manufacturers into building them? The simple reason is we don’t need them badly enough yet. Ordinary chips are still improving in line with Moore’s Law, which notes that for the past few decades, the transistors in a microchip have become about twice as dense and half as expensive about every 18 months. But the limits imposed by the laws of physics are on the horizon, and that will force manufacturers to start exploring alternatives to the conventional printed circuit. Three-dimensional chips, with so much basic research done already, are an obvious one.