We need battery-free wireless computers to power the internet of things. Now we have one.

The Internet of Things (IoT) envisions a technological utopia of objects that link our homes, offices and factories, allowing them to talk to us and each other through embedded computers and telemetry. We’ve been promised a world of a trillion sensors.

The Internet of Things (IoT) envisions a technological utopia of objects that link our homes, offices and factories, allowing them to talk to us and each other through embedded computers and telemetry. We’ve been promised a world of a trillion sensors.

But for now we’re stuck with $700 wi-fi enabled juicers.

Powering and updating IoT devices is hard. No one wants to change a billion batteries in wearable electronics, or upload devices hanging from bridges (San Francisco’s Golden Gate Bridge has its own sensor network). Problems like these, along with weak security and interoperability, have limited the IoT to pedestrian gadgets like light bulbs, wireless speakers and cameras.

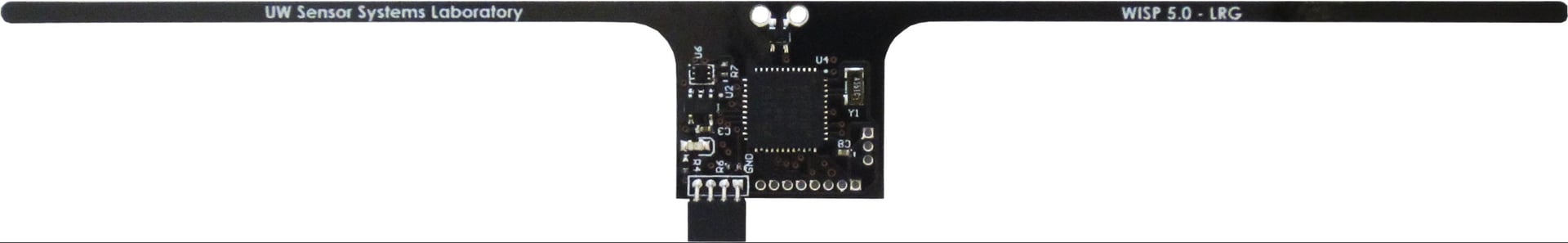

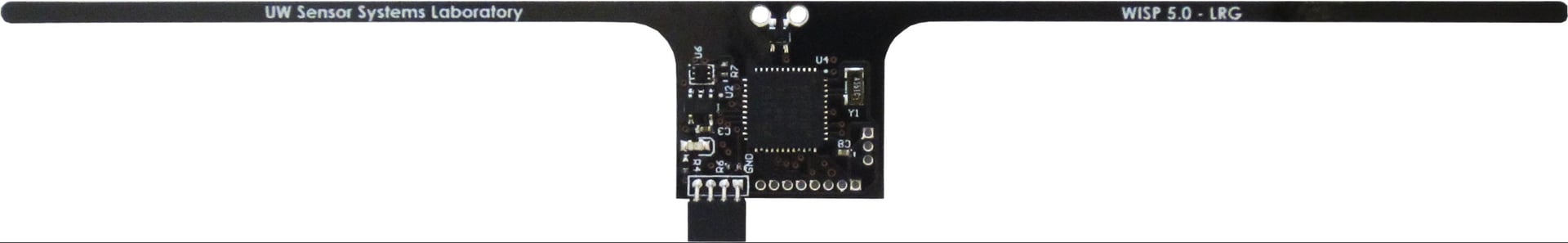

University of Washington’s Sensor Lab has just knocked down a major barrier with a new battery-free computer that can be powered and reprogrammed wirelessly. The team built on decades of research to construct a quarter-sized computer sensor that uses a modified communication protocol from radio frequency identification (RFID) tags, those tags often seen on labels attached to clothes, electronics and other items. Software can be updated just like it is on your phone. The sensor powers its processor by harvesting radio signals and turning them into electricity.

Before now, sensors have been single-purpose and immutable. Updating them meant getting at the hardware, sometimes located in inconvenient places like a heart patient’s chest cavity.

Aaron Parks, one of the researchers at the Sensor Lab, worked with a team at Delft University of Technology in The Netherlands to build the new communications protocol for what it is calling WISP. ”Wireless reprogramming brings [these devices] into the same computing spectrum as conventional computers by permitting them to run apps in much the same way as a smartphone or wearable,” says Parks. “Now computable RFIDs can be part of the ecosystem of modern (modular, general-purpose) computing.”

Two breakthroughs made this possible.

Computers’ energy efficiency is increasing exponentially. The corollary to Moore’s law says that the energy needed to transmit information also falls as we shrink the size of chips because information has to travel a shorter distance. Since 1946, the number of computations possible per unit of energy has roughly doubled every 1.5 years. This enables useful computing to be done with tiny bursts of energy harvested from the vibrations, heat or the wireless signals around us.

Secondly, new stable memory chips known as Ferro-electric RAM can be reliably reprogrammed despite weak, intermittent power sources.

Such developments open up new possibilities for autonomous, reprogrammable computers that stay permanently embedded in objects without onboard power or maintenance. The first applications are likely to be pacemakers and other energy-constrained biomedical implants. Eventually, vast, cheap sensor networks are expected to blanket roads or farms with millions of individual computers embedded in trees or concrete.

Parks and his team presented their work at a communications technology conference in San Francisco this April as open-source designs for hardware and software.