Neuroscientists know how to read our minds

Forget physical strength, invisibility, and the ability to fly—no superhero ability is more tantalizing than the prospect of reading someone else’s thoughts. Thanks to advances in neuroscience and computer coding, mind reading is no longer the province of X-Men‘s Professor X alone.

Forget physical strength, invisibility, and the ability to fly—no superhero ability is more tantalizing than the prospect of reading someone else’s thoughts. Thanks to advances in neuroscience and computer coding, mind reading is no longer the province of X-Men‘s Professor X alone.

Compared with science-fiction, the mind-reading skills of Frank Tong are still pretty basic. The cognitive neuroscientist and professor at Vanderbilt University can determine what object people are looking at from the neural signals in their brain, or what object they’re thinking about in their visual working memory. He has successfully read people’s minds while they were looking at or remembering animals, faces, and paintings.

These are major steps in understanding our brains and how to program human-like skills in robots.

“Vision makes up a huge component of how we understand the world,” says Tong, who gave a lecture on visual cognition at the annual meeting of the Association for the Scientific Study of Consciousness, held June 14 to June 18 in Buenos Aires. “If computers could see as well as we can—and we’re just beginning to see the beginnings of that—then they could drive cars, prepare meals. It could do basically any visually guided task that humans can do.”

The potential applications of mind-reading are spooky. Some researchers are working on how to decode whether subjects are telling the truth or lying. “What’s being decoded isn’t the lie, per se, but the mental processes that are likely to accompany lying behavior,” says Tong.

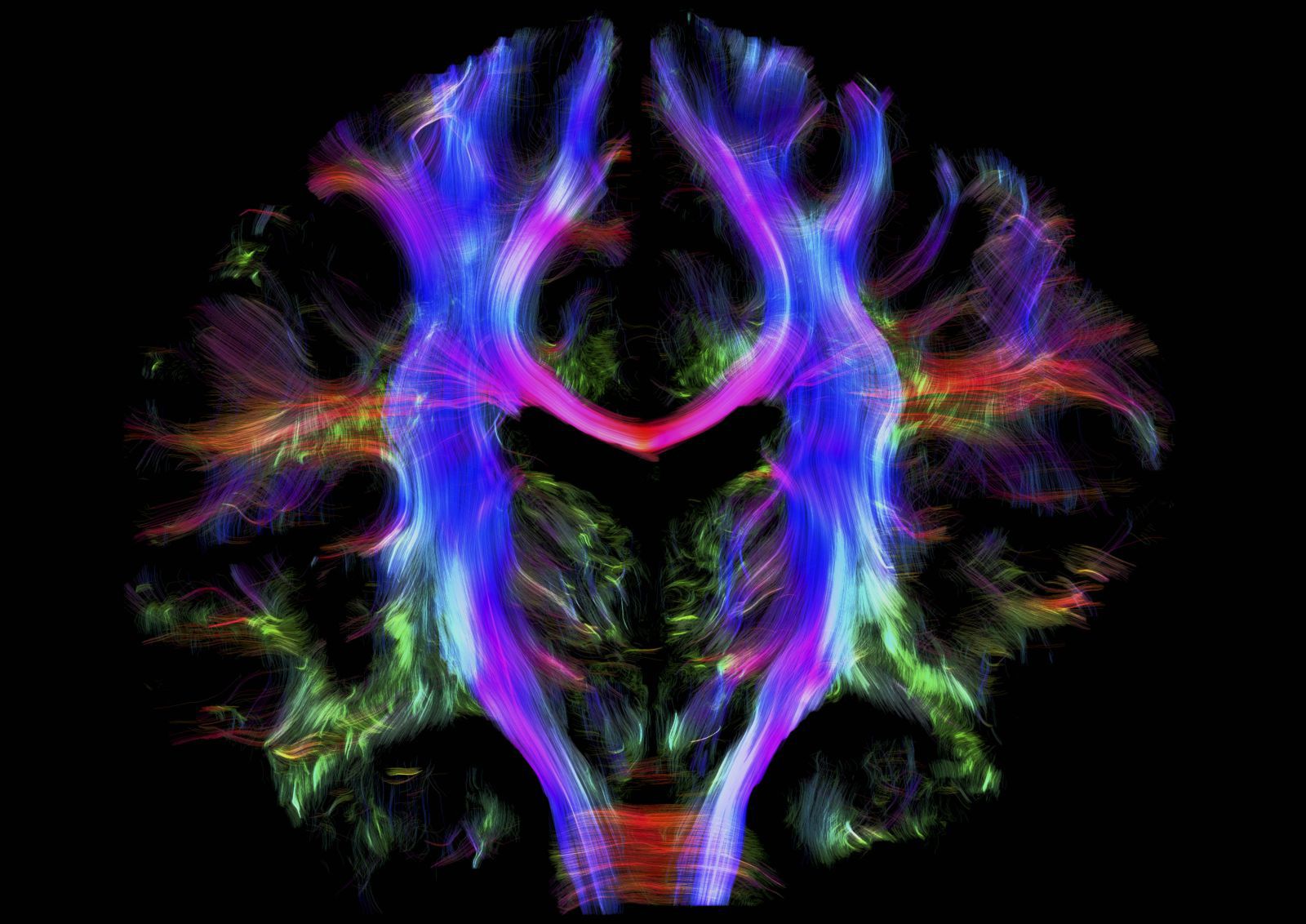

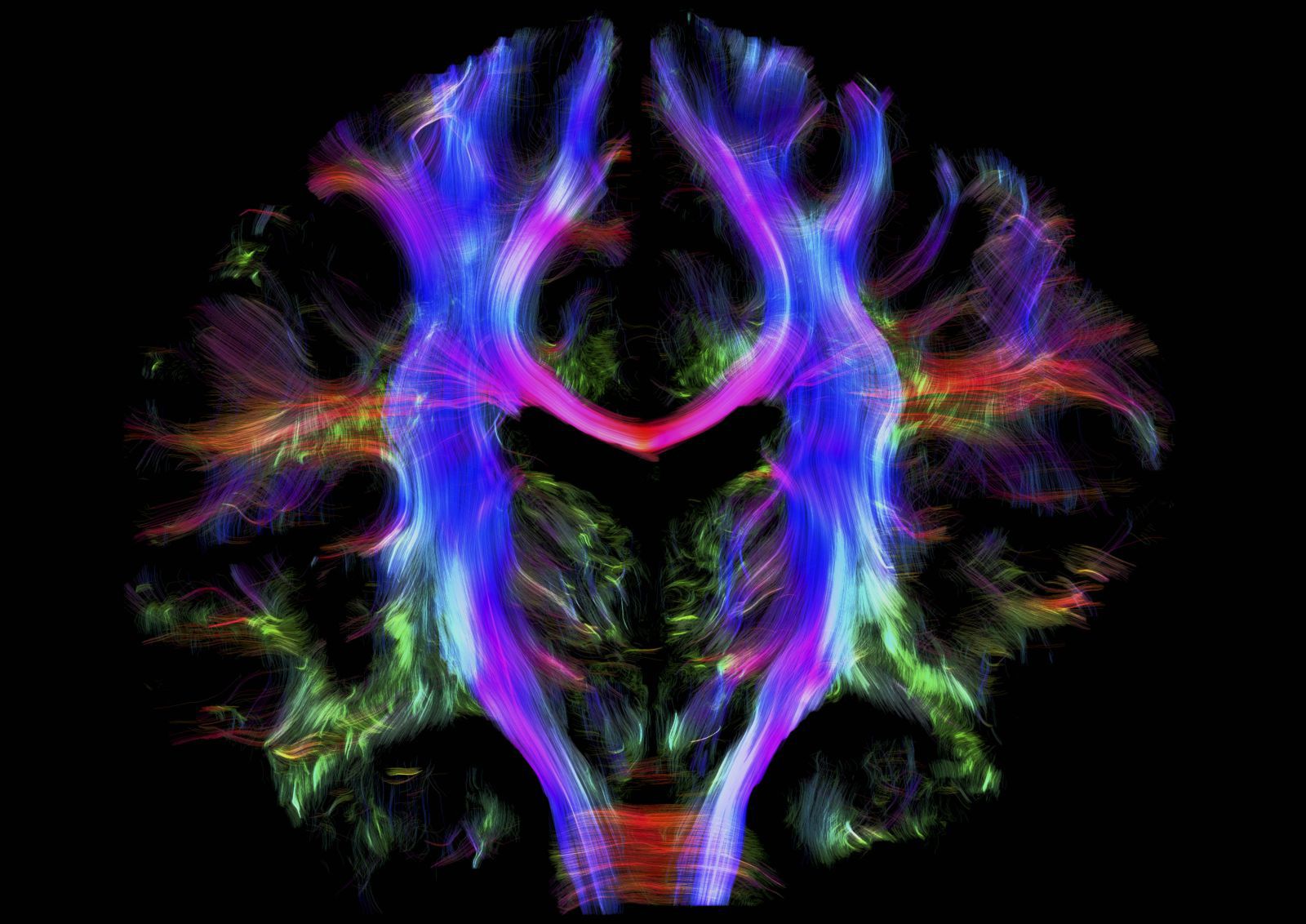

Tong relies on functional magnetic resonance imaging (fMRI) to read the brain. His lab uses statistical or machine-learning algorithms to extract key information from brain data—and it turns out there’s far more information in this data than scientists previously realized.

Starting with the eye, patterns of light are encoded by neurons to represent the information about whatever we’re looking at. When it comes to decoding, Tong looks at patterns of activity across neurons to read out the original visual information that the eye was focused on.

“At each stage of neural processing, the code gets a little different,” Tong says.

We can’t yet create a detailed picture from mind-reading; as Tong says, we can’t yet “scan someone’s brain and reconstruct the face of the perpetrator from their imagination.” But neuroscientists can decode someone’s particular focus. So, if a subject was looking at a plaid shirt, Tong could decode whether they were focused on the horizontal or vertical stripes. Or, in the case of Rubin’s Vase (the image that looks like both a vase and two faces simultaneously), whether the subject was looking at the vase or the two faces.

Our ability to understand and decode the brain is likely to improve as computer scientists and neuroscientists converge on this topic. Tong says computer-based approaches to artificial vision have built off a neuroscience understanding of how our own vision works. And neuroscientists may well benefit in turn from comparing human vision artificial networks.

But ultimately, Tong says he’s less interested in applications of his work and more focused on simply trying to understand the brain. “The goal,” he says, “is to really understand how cognition works.”