Tesla thinks it can prevent the next car crash by seeing the world with radar instead of cameras

Tesla’s CEO Elon Musk announced the latest major upgrade to its Autopilot system on Sunday (Sept 11). It marks a strategic shift from reliance on cameras to ”see” the road much like human eyes do, to using radar that can detect far more objects in the environment and, hopefully, avoid collisions. The end result, wrote Tesla in a post on its website, is that the new Autopilot “should almost always” avoid a collision in any situation, detecting objects as far as two cars ahead.

Tesla’s CEO Elon Musk announced the latest major upgrade to its Autopilot system on Sunday (Sept 11). It marks a strategic shift from reliance on cameras to ”see” the road much like human eyes do, to using radar that can detect far more objects in the environment and, hopefully, avoid collisions. The end result, wrote Tesla in a post on its website, is that the new Autopilot “should almost always” avoid a collision in any situation, detecting objects as far as two cars ahead.

As Tesla has done for years, it is harvesting massive datasets from its cars using the new Autopilot to continuously improve performance and predict the safest reactions in any environment. Wade Trappe, an electrical and computer engineering professor at Rutgers University, told Quartz that the new system should prevent the earlier detection problem. “The key enabler for this advancement is not so much the radar but the clever realization that they can use the collection of observations learned by other vehicles to boost the accuracy of an individual car’s assessment of the environment,” he said. But, he added, it could give rise to other problems, especially if it is vulnerable to hackers.

Tesla’s new system

“Perfect safety is impossible,” Musk said during a Sept. 11 conference call, but he claims this new system would “very likely” have prevented the fatal Florida accident in which Joshua Brown’s Tesla Model S slammed into a tractor trailer in May. The car’s Autopilot may have failed to distinguish between a white truck and the bright sky behind it. Neither the autopilot nor the driver braked before hitting the tractor trailer at high speed, Tesla said. Brown also may not have been holding the steering wheel, despite Tesla’s requirement that drivers “keep your hands on the steering wheel at all times.”

The Autopilot update is designed to address problems highlighted by the May crash, while being more accurate than other radar.

Before now, metallic objects as small as a soda can could trigger false alarms in a radar system, even though radio waves see easily through fog, dust, rain, snow, wood, and plastic. The new Autopilot software can reportedly detect six times as many radar objects as Tesla’s earlier software, and access far more information for each one.

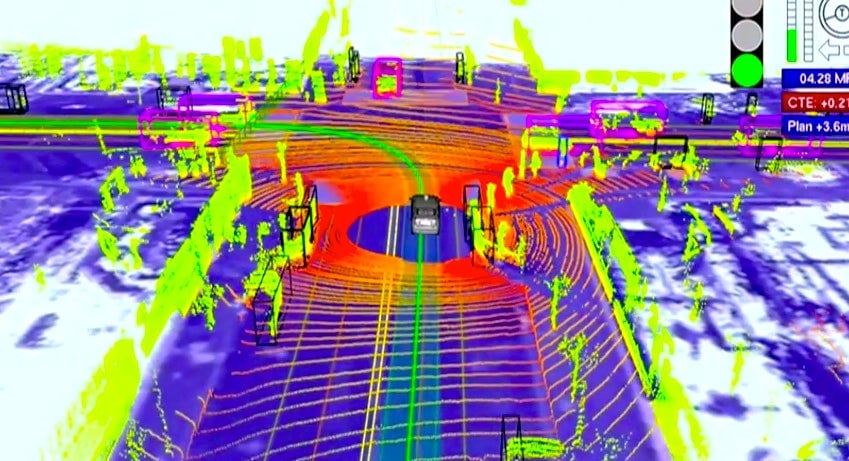

From this, Tesla assembles a vivid 3D “picture” of the world using radar snapshots collected every tenth of a second. Tesla then applies “fleet learning,” data pouring in from thousands of cars, to learn how human drivers react as they pass road signs, bridges and other stationary objects. Tesla’s database can create a location-tagged whitelist that lets cars drive safely past “approved” radar objects, while breaking slowly when anomalous objects appear. If the system determines there is a 99.99% chance of a collision, it fully brakes the car.

Trappe, an IEEE Signal Processing Society member, says Tesla’s plan is a clever combination of cameras, radar, and fleet learning that may make the new Autopilot a significant improvement, although he worried about false radar signals from a deliberate hack of automated vehicles, citing research published recently by IEEE. ”What will be important, then, is to see how well ‘fleet learning and sharing of data’ can be tuned to filter out anomalous signals,” he wrote by email.

To test on the road, or not

The key words in Tesla’s Autopilot statement are ”should” and “almost” regarding avoiding future accidents. Despite the uncertainty, Tesla is confidently deploying Autopilot as a beta product because, statistically, machines should be better drivers than humans. Of the more than 33,000 motor vehicle deaths in the US each year, about 94% are due to human error. Computers will bring that down significantly, Tesla says.

Today’s prototype autonomous cars still crashed 24% less frequently than human drivers (3.2 times per million miles compared with 4.2 times for humans) in a Google-commissioned study of its vehicles by Virginia Tech University. Numbers like that will only get better, Musk insists, eventually winning over the public and government regulators.

But we’re not there yet. Companies like Ford, and Alphabet’s Google are even taking the opposite approach. Instead of incremental, semi-autonomous progress toward self driving cars, that relies on customers use to fine-tune it, those companies are going directly into fully autonomous cars that will require no human oversight. Ford plans to have its self-driving cars on the road by 2021, along with Volvo, Audi, Google, and others in a similar time period. The companies share much of Tesla’s technology, as well as other approaches including laser sensors, or Lidar.

Learn by driving

Tesla has decided to just run the experiment. Tesla’s Autopilot is explicitly in “beta,” referring to its incomplete status (Google famously kept Gmail in beta for more than five years). While Tesla has said “we do not and will not release any features or capabilities that have not been robustly validated in-house,” the final steps of its quality control program are in the hands of its drivers.

It has no lack of volunteers. Tesla says its has close to 1 billion miles of on-the-road data, with more than 100 million involving Autopilot. Many of Tesla’s customers are classic early adopters who don’t seem to mind the chance to perfect a nascent technology, even at 75mph. Despite its share of critics, Tesla’s customer base, about 400,000 of whom paid $1,000 for a spot on the waiting list to buy a Model 3, does not seem deterred.

Tesla’s strategy shows it’s taking one more step towards trusting machines more than humans. It is enforcing rules that, until now, have only been a warning to drivers. Tesla’s “Autosteer” feature will now warn drivers to place their hands back the steering wheel if removed when Autopilot is engaged. If drivers ignore the warnings, the car will disengage autopilot and need to be parked before self-driving mode can be turned back on.