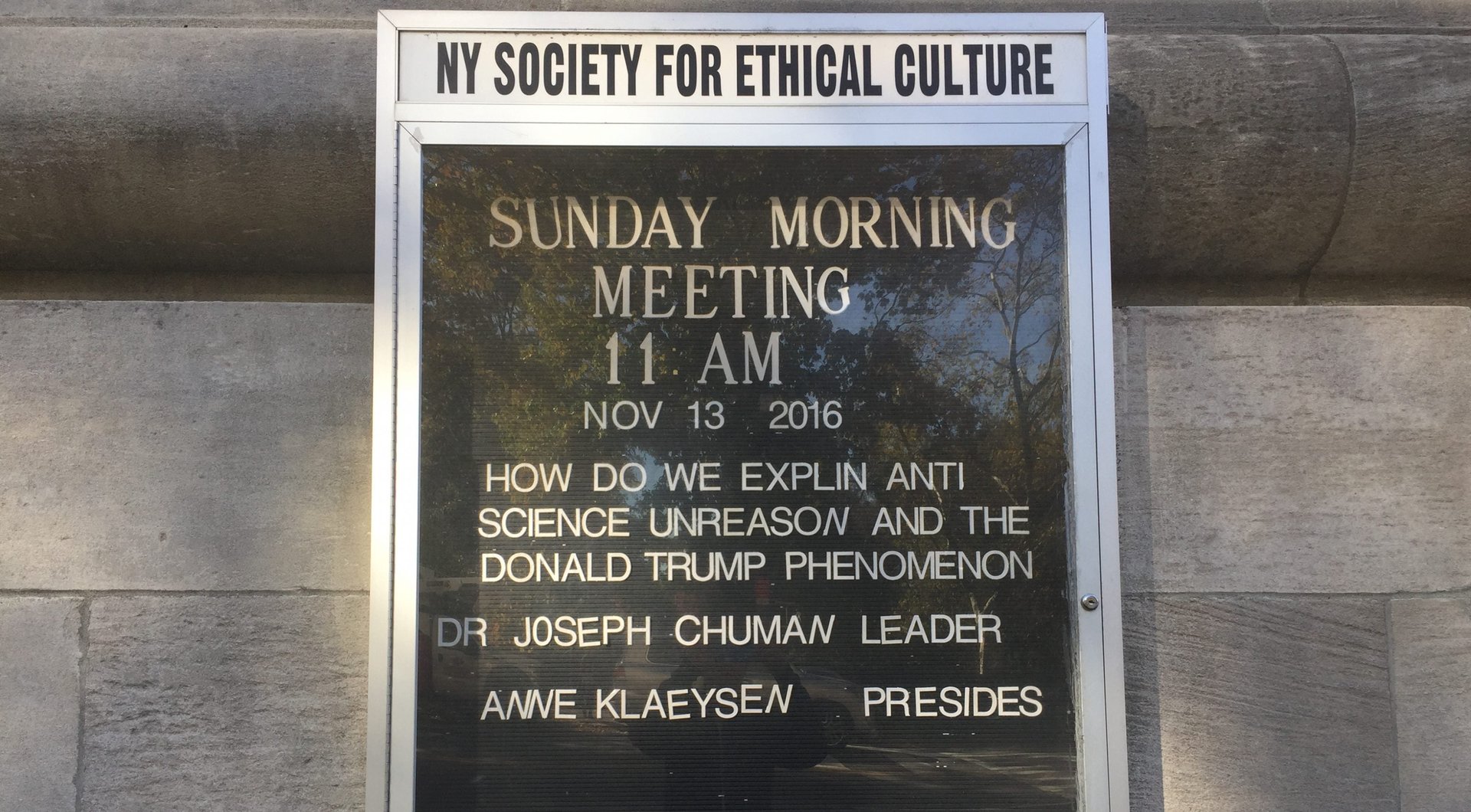

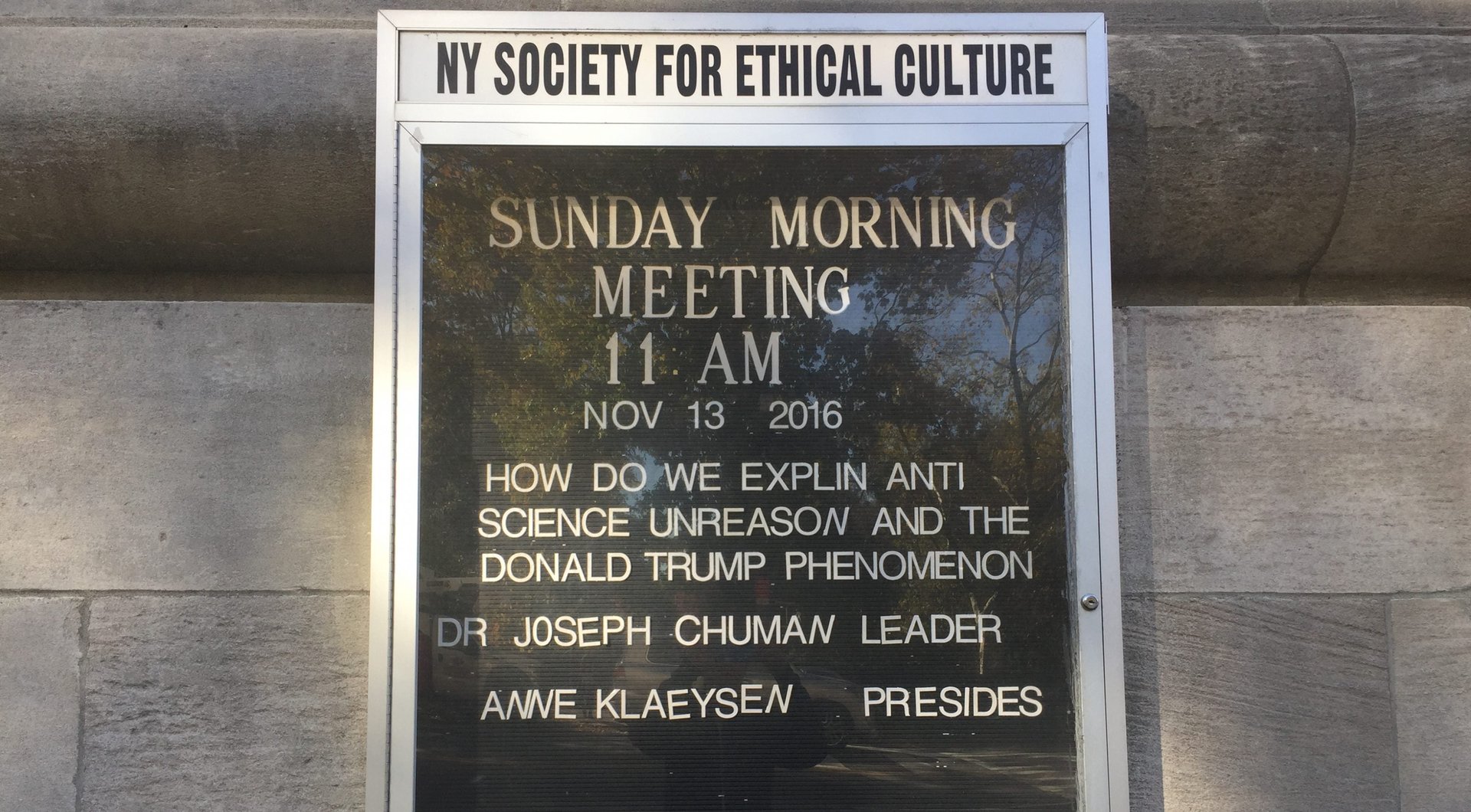

The trouble is not with polling but with the limits to human interpretation of data

When the US presidential election was called, even Republican strategist Mike Murphy declared data dead. Others have said it’s the end of polling.

When the US presidential election was called, even Republican strategist Mike Murphy declared data dead. Others have said it’s the end of polling.

To those who felt a Hillary Clinton victory was all but certain, Donald Trump’s success at the polls might undermine faith in big data. But this sentiment misunderstands statistics. Data is impartial and accurate; when things go wrong, it’s usually when we try to interpret it.

How different people assess risk and make decisions often comes down to how we perceive probabilities. Assigning a probability to an uncertain outcome is part art and science. The most scientific way is to use data—in this case, polling numbers. This time, election forecasts based on polling data were spectacularly inaccurate. They predicted an easy Clinton victory, and assumed that women and college-educated voters would turn out for her in large numbers. In fact, according to exit polls, 42% of women voted for Trump, including 45% of white women with college degrees.

Forecasts also predicted hardly any minority voters would consider Trump. But they did. Minority groups voted more for Obama than Clinton. A non-trivial number, nearly one third of Hispanics and Asians, voted for Trump.

What seems like a failure of polling data, though, is really our inability to approach the data objectively.

Polls predicting a Clinton win may not have been adequately scrutinized

Election forecasting seems straightforward. You take a poll that asks people how they plan to vote and extrapolate the results. But it’s not that simple. Polls only reach a small subset of voters; projecting the results on the rest of the population requires both carefully choosing your sample and making the right adjustments.

Election forecasts must supplement the raw polling data with guesses about how many people will vote and what undecided voters will do, while separating out meaningless blips in the data. Many models use historical data to make these sorts of inferences. These models assume that past patterns of turnout, demographics, economic conditions, and party loyalty can predict how all of these things will play out in the future.

Using historical data requires taking a thoughtful stand on what span of history is most appropriate—if at all. This election proved over and over that old relationships no longer applied. But it was tempting to ignore this consideration because many forecasters approached the question—”who will you vote for”—with a prior belief that a Clinton win was inevitable. Any estimation confirming that assumption didn’t get much scrutiny. It may explain why so many forecasts assured a Clinton win.

Trump’s big win was not unforeseeable

In hindsight, there was decent evidence a Trump victory was more than just remotely plausible. What’s more, some people may have lied when asked who they were voting for. The fact that internet surveys (as opposed to phone surveys) had Trump ahead should have been a clue. Other models took a different approach and predicted a Trump win based on the state of the economy. The FiveThiryEight forecast estimated its data differently, but also produced higher odds on Trump.

Personal biases don’t just distort election forecasts. They influence what forecasts we choose to believe. It was easy to be suspicious, or even hostile, to models that strayed from the pack if they violated the conviction that a Clinton win was the logical outcome. If we were more open, perhaps a Trump victory never would have been classified as a tail event. Maybe there would have been a different outcome.

The Trump victory has many unknown consequences. But it shouldn’t make us distrustful of data. Data remains the best tool we have to understand the world around us and make informed decisions.

But we also must accept that data interpretation is fallible, subject to our own biases and our inability to see beyond our own bubble.