Racist tweeters can be convinced to stop spreading hate—if a white man asks them to

The first reaction to racist tweeters spreading bile is to ask them to stop. But the question is, will they listen?

The first reaction to racist tweeters spreading bile is to ask them to stop. But the question is, will they listen?

To see if he could find a concrete answer, Kevin Munger, a PhD candidate at New York University, deployed anti-racism bots against abusive Twitter users. He targeted white males because white nationalists have been emboldened on Twitter in the past few years, with movements like GamerGate and more recently with Donald Trump’s ethnocentric, anti-immigration presidential campaign.

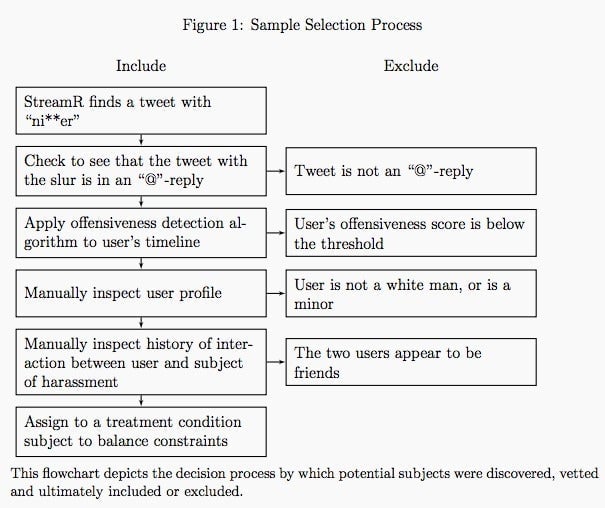

Munger parsed accounts of those who tweeted the n-word, a racial slur considered intrinsically offensive in the US and beyond, at another account. Using an algorithmic model, he eliminated anyone who wasn’t a white male over 18 years old. The algorithm also took out instances where the user and the subject of the harassment appeared to be friends. In the end, Munger narrowed down his sample to 231 accounts.

He then created four types of bots: Two were “black” and two “white”—distinguished as such by the same cartoon emoji with different skin tones. Munger gave them stereotypically white- or black-sounding names accordingly. Within each skin color, one bot was “high-status”—with a follower count between 500 and 550—and the other was “low-status”—with less than 10 followers.

Each bot’s account was made to seem life-like by adding some random tweets and retweeting generic news, and was assigned to about one-quarter of the 231 sampled accounts. Whenever one of those 231 tweeted a racist slur at someone, Munger would log in to the account of the bot that was assigned to respond to that user and he would reply with this exact message: “@[subject] Hey man, just remember that there are real people who are hurt when you harass them with that kind of language”

Results showed that the white, high-status bot had the most influence, reducing average number of racist tweets per day by 0.3. ”My intervention caused the 50 subjects in the most effective treatment condition to tweet the n-word an estimated 186 fewer times in the month after treatment,” Munger wrote in a study resulting from the project published in the journal Political Behavior.

In the other three conditions, the tweets reduced by less than 0.1 daily. And the ”black” bot with under 10 followers actually saw a short-term spike in racism after it called people out on their behavior.

Munger thinks the low-status bots had particularly little impact because, with followers in the single digits, they were ”too low status.” “If I could do it again, I would give them an intermediate number of followers like, say, 100.” However, the bigger issue is that the “black” bots showed little to no reduction in hateful language—probably, Munger believes, because those who were already exhibiting racist behavior weren’t about to actually hear out someone they thought was black.

On the internet, people are prone to deindividuation—the anonymity provided by a crowd leads a normal person to act irrationally if the people around them are doing the same. Racist tweeters tend to lose their self-awareness and become immersed in hateful group discourse. In such situations, a member of the in-group is likely the only one who can effectively redefine a racist’s perception and behavior. That’s why the “white” bots were fairly successful.

However, Munger doesn’t believe deploying any sort of bots against hate speech is a scalable solution to Twitter’s abuse problems. “If people knew bots were tweeting at them, it would not work,” he says. The experiment is meant to serve as a precedent for people who themselves want to find new ways to police their online communities. Meanwhile, for those people of color who do want to call out racism, Munger recommends trying to find common ground as a starting point.