Pacemakers have thousands of vulnerabilities hackers can exploit, study finds

There are a lot of devices that don’t need to be connected to the internet. Toasters don’t need to be connected to the internet. Neither do juicers, door locks, or rectal thermometers. Pacemakers were around for at least 40 years before someone got the bright idea to expose them to the information superhighway, a move that does indeed help doctors assist patients, but it comes with great risk.

There are a lot of devices that don’t need to be connected to the internet. Toasters don’t need to be connected to the internet. Neither do juicers, door locks, or rectal thermometers. Pacemakers were around for at least 40 years before someone got the bright idea to expose them to the information superhighway, a move that does indeed help doctors assist patients, but it comes with great risk.

In a recent review of seven pacemaker products made by four different vendors, security researchers identified 19 security vulnerabilities that most of them shared. Among those was the use of third-party components, which alone comprised 8,600 security flaws. Some of the weaknesses are simple software bugs, and others are vulnerabilities that could be life-threatening under certain conditions.

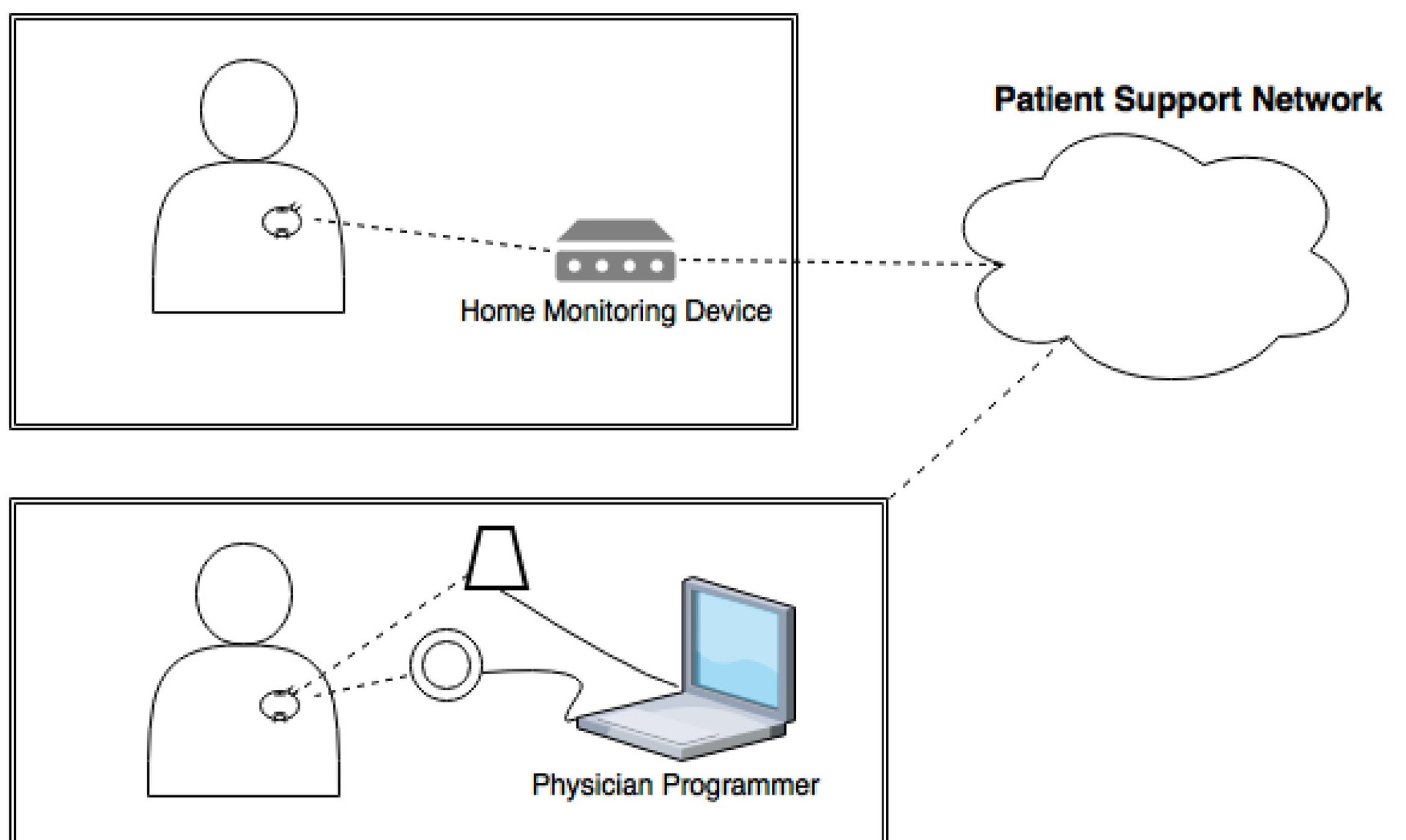

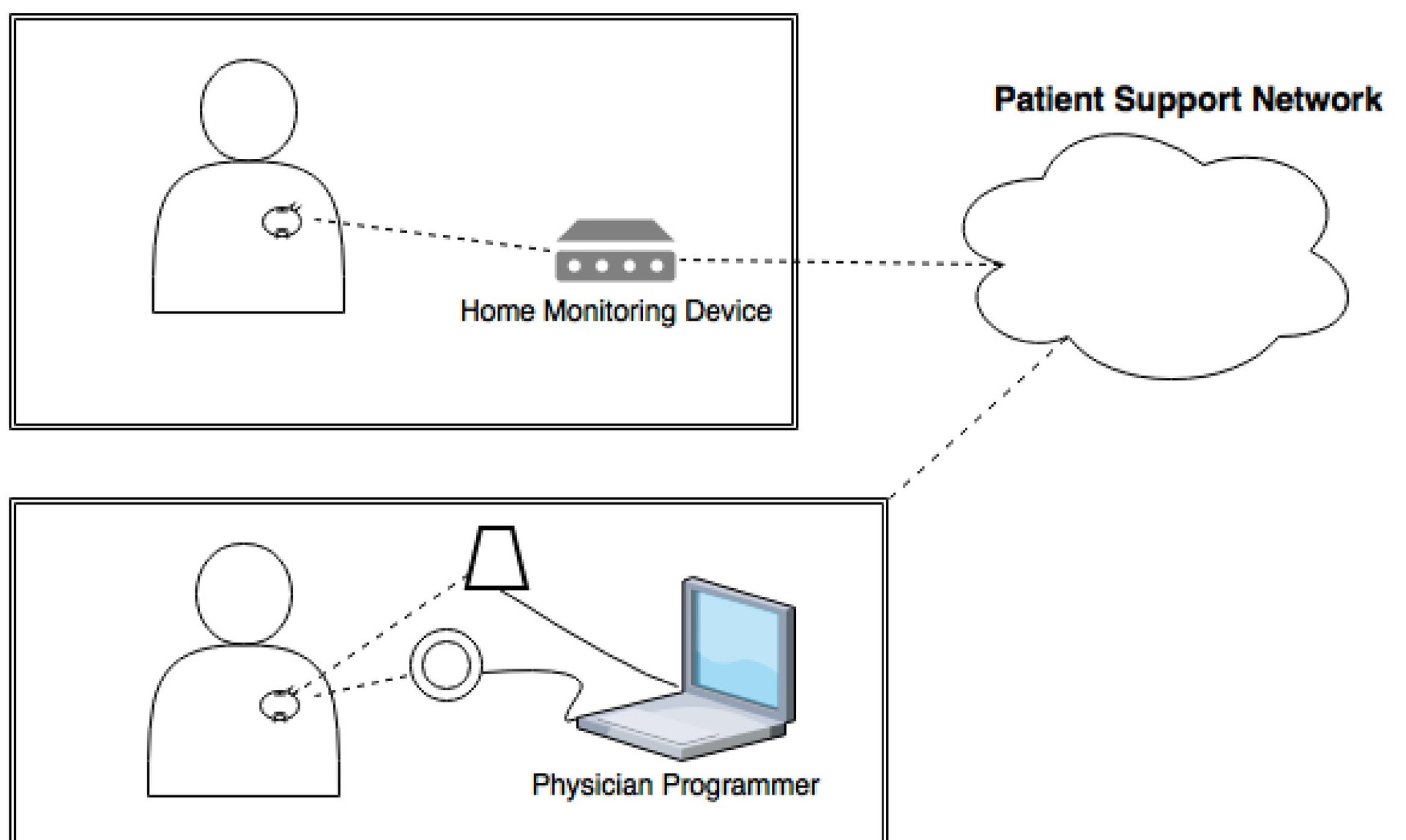

The review, conducted by security research firm WhiteScope, found many similarities between the pacemaker systems it examined. (The report did not specify which systems those were.) All of the pacemakers communicate with external monitoring devices, which send vital data over the internet for doctors to review. On the other end, doctors use pacemaker programming devices to control and make adjustments to the implants.

At every point in that infrastructure, the researchers identified serious issues. If hackers got their hands on an external monitoring device, for example, they could use it to connect to any pacemaker it supports using a universal authentication token that the vendors built into the devices. Once connected to a pacemaker, hackers could potentially receive the data the pacemaker transmits and block its transmission to its own monitoring device.

Even more concerning, this flaw could be paired with a vulnerability in the pacemaker itself, and used to turn a monitoring device into a pacemaker programmer, which only a patient’s physician is meant to have access to. The monitoring device could then potentially be used to connect to a pacemaker and adjust its settings in such a way that could harm or kill the person who has the implant.

That sort of hack would be possible in pacemakers that don’t block unwanted adjustments, the report says. The implants should have a “whitelist” functionality that only allows certain adjustments, and only accept those adjustments from one unique programming device. Otherwise, an attacker could use a hijacked monitoring device or custom-made hardware to “maliciously program” the pacemaker, according to the report.

Another issue the researchers identified is that pacemaker systems lack authentication processes. When doctors connect to a pacemaker, that is, they don’t have to enter a password. As Matthew Green, a computer science professor at Johns Hopkins University pointed out on Twitter, however, adding authentication to the process could create an impediment to patient care.

“If you require doctors to log into a device with a password, you will end up with a post-it note on the device listing the password,” Green said.

One of the most troubling discoveries to come out of the review was not the research itself, but how the researchers procured the devices to perform their security check. The manufacturers of pacemaker devices are supposed to carefully control their distribution, but the researchers bought all of the equipment they tested on eBay.

“All manufacturers have devices that are available on auction websites,” the researchers wrote in a blog post about the study. “Programmers can cost anywhere from $500-$3000, home monitoring equipment from $15-$300, and pacemaker devices $200-$3000.”

Below is the full list of the vulnerabilities the researchers discovered, and whether the vulnerability was found in devices made by each of the four vendors they reviewed.