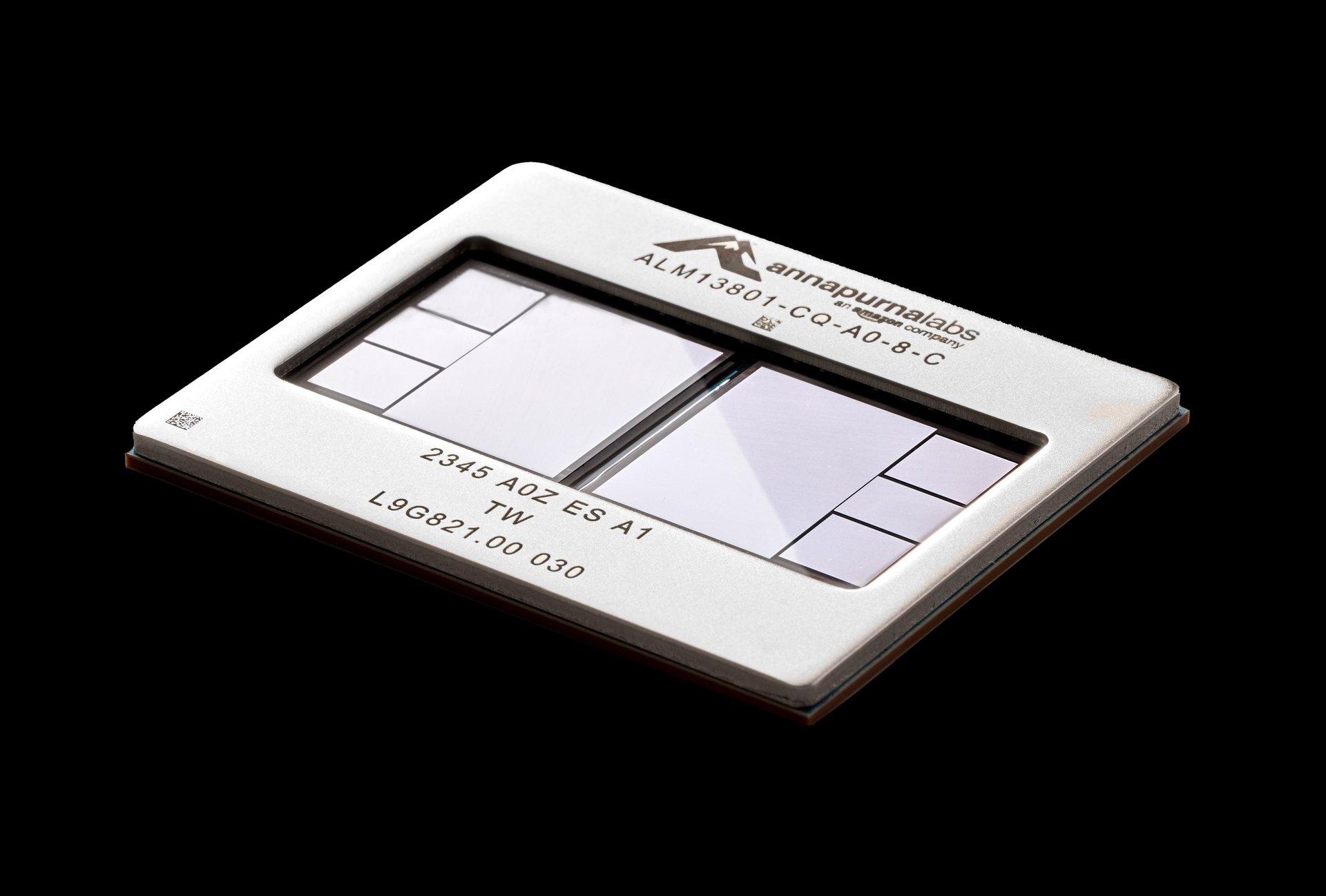

Amazon's next-generation AI training chip is here

Amazon Web Services unveiled Trainium3 and announced general availability of its Trainium2 chips

Amazon Web Services (AMZN) unveiled its next-generation artificial intelligence training chip that it says is faster and expected to use less energy.

Suggested Reading

Trainium3 is the first chip from AWS built with the 3-nanometer process — so far the most advanced semiconductor technology — that allows for better performance and power efficiency. The first Trainium3 chips are expected to be available late next year, AWS announced at its re:Invent conference on Tuesday.

Related Content

UltraServers powered with Trainium3 are expected to perform four times better than those powered by its Trainium2 chips, AWS said, “allowing customers to iterate even faster when building models and deliver superior real-time performance when deploying them.”

The cloud giant’s Trainium2 chips, which are four times faster than its predecessor, are now generally available, AWS said. The Trainium2-powered Amazon Elastic Compute Cloud (Amazon EC2) instances allow for 30% to 40% better price performance than current chips, and feature 16 Trainium2 chips. The new Amazon EC2 instances are “ideal for training and deploying LLMs with billions of parameters,” AWS said.

The cloud giant said it is building an EC2 UltraCluster of Trainium2-powered UltraServers with AI startup Anthropic called Project Rainier. In November, AWS announced it was following up a previous $4 billion investment in the AI startup with another $4 billion. In the next phase of the partnership, Anthropic will use AWS as its primary AI training partner.

“Trainium2 is purpose built to support the largest, most cutting-edge generative AI workloads, for both training and inference, and to deliver the best price performance on AWS,” David Brown, vice president of Compute and Networking at AWS, said in a statement. “With models approaching trillions of parameters, we understand customers also need a novel approach to train and run these massive workloads. New Trn2 UltraServers offer the fastest training and inference performance on AWS and help organizations of all sizes to train and deploy the world’s largest models faster and at a lower cost.”

AWS chief executive Matt Garman also announced the next-generation P6 family of instances from Nvidia (NVDA) and AWS, featuring the chipmaker’s new Blackwell chips. Blackwell has 2.5 times faster compute than current generation of graphics processing units, or GPUs, Garman said.