All about AI robots

The technology for humanoid robots is advancing. But a fundamental question remains.

In a demonstration video from Google DeepMind, a robot arm delicately folds origami, packs snacks into Ziploc bags, and deftly manipulates objects with surprising precision. When an item slips from its grasp, the robot quickly readjusts and continues its task. These aren’t jerky, pre-programmed movements, but something more fluid and adaptive — the result of a new AI model called Gemini Robotics that the company unveiled earlier this month.

Suggested Reading

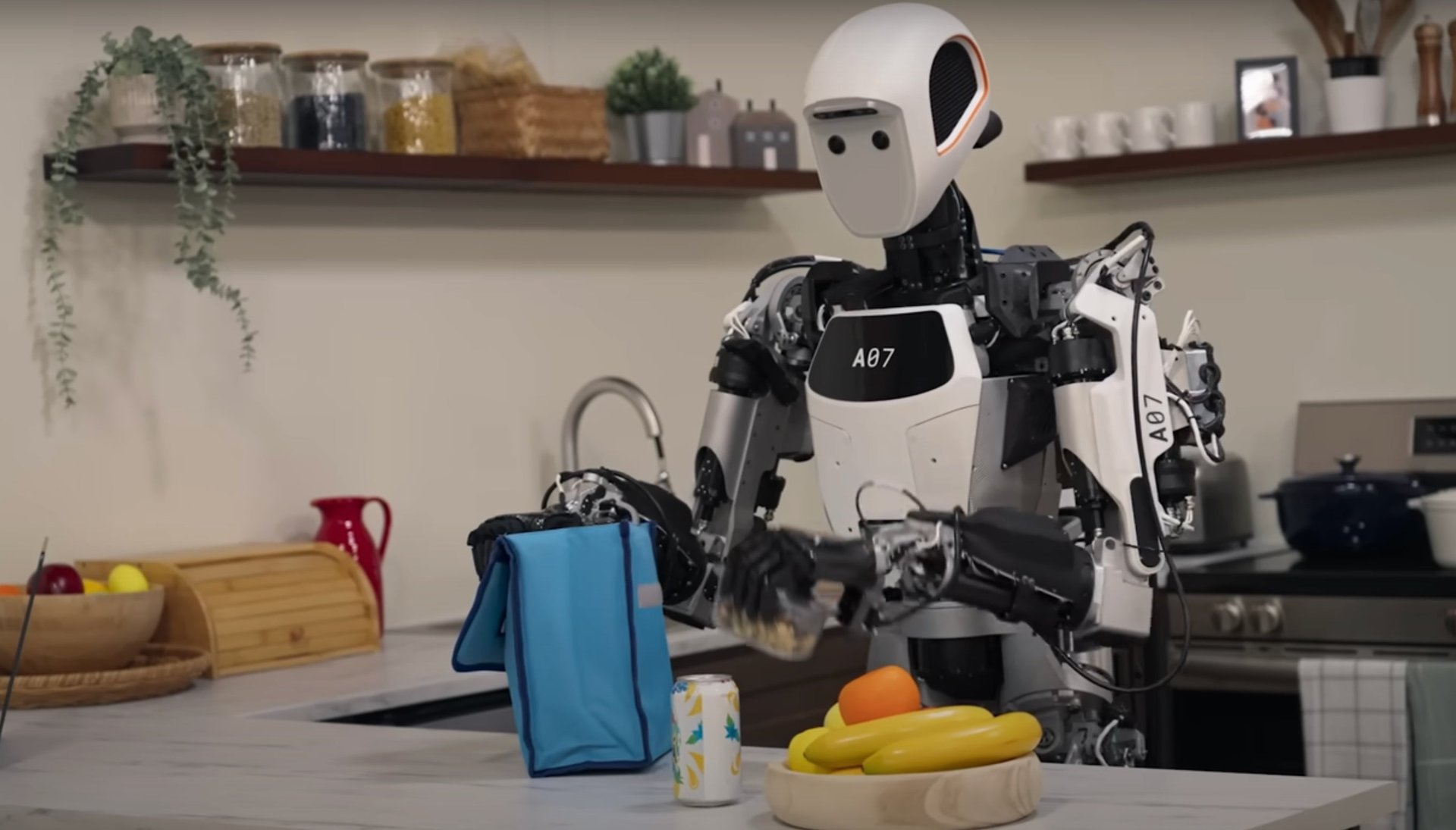

While the showcase focuses on robotic arms rather than full humanoid robots, the underlying technology is the same that will power the next generation of human-shaped machines. Google says its Gemini Robotics model is designed to “easily adapt to different robot types” and is already being tested with Apptronik’s humanoid Apollo robot.

Related Content

“In order for AI to be useful and helpful to people in the physical realm, they have to demonstrate ‘embodied’ reasoning — the humanlike ability to comprehend and react to the world around us,” Carolina Parada, who leads the DeepMind robotics team, said in a statement.

The demonstration is part of a new wave of humanoid robots from tech giants like Google and Meta, and startups like Figure AI and Agility Robotics. They’re being pitched as the future of logistics and household chores. Perhaps no science fiction technology besides flying cars has tantalized us so much and for so long as the promise of robot helpers that would finally free us from the drudgery of dishes and laundry (OK, these stories have also scared us on occasion, too). Now, equipped with advanced AI models, these mechanical workers are taking their first tentative steps out of our imagination and into reality.

But while the technology is advancing, a fundamental question remains: Should we build robots for our world, or adapt our spaces for simpler machines?

The makers of these humanoid robots are pushing for the former. They argue the world is designed for human bodies, after all, with stairs, shelves at shoulder height, and important things located at eye level. Humanoid robot advocates argue this makes the human form the most logical design for machines meant to integrate into existing environments like our kitchens.

They are fighting an uphill battle against the only successful robots so far, which are mostly non-humanoid robots in warehouses, where shelving systems are designed for wheeled picking robots or roped off areas that are only for robots. These purpose-built environments allow for much simpler robot designs.

But humanoid robotics companies have a powerful new tool they’re betting will change everything: AI systems like Google’s Gemini and OpenAI’s GPT that understand and generate human speech. This technology could let people simply talk to robots like they’d talk to a person — “fold that shirt,” or “put away the dishes” — without needing specialized programming or technical knowledge. Even more promising, these AI models might help robots adapt to new situations they weren’t specifically trained for, potentially solving one of robotics’ most persistent challenges.

Despite impressive demos and promises to high heaven, the current reality is more modest. The robots still remain slow to us by comparison, and struggle with delicate or malleable items that change shape when grasped. The unpredictable chaos of a household with young children running around, toys scattered across the floor, or unexpected situations like finding keys in the refrigerator — what could be a regular Tuesday in many households — remain largely untested scenarios far beyond current capabilities.

These problems aren’t slowing down companies from at least trying. Meta is reportedly building a platform for humanoid robots that would be “the Android of androids.” Elon Musk, already stretched thin on his many, many, projects, has found time to keep posting about Tesla’s Optimus humanoid robot. He recently announced on X that at least one of his bots will be on its way to Mars by “end of next year,” beating humans out by at least a couple of years.

But other significant barriers remain before these robots enter widespread use. Researchers in human-robot interaction have observed that humans typically have much lower tolerance for robot errors than for human ones. Studies in this field show that while we might forgive a human coworker who occasionally drops items, a robot that makes even a single significant mistake can permanently lose user trust.

This trust issue becomes even more complicated as robots integrate large language models, which are known to occasionally “hallucinate,” or generate incorrect information. A robot that confidently misinterprets a command due to an LLM hallucination could create dangerous situations in physical environments. While an AI chatbot’s mistake might be merely frustrating, a robot acting on hallucinated instructions could damage property or injure people.

Regardless, billions of dollars continue to flow into humanoid robotics from tech leaders who grew up immersed in science fiction and don’t want to give up the dream. At Nvidia’s annual developer conference this week, CEO Jensen Huang showed off new software that he said would help humanoid robots to more easily move through our spaces. When asked later when we will know that AI has become ubiquitous, he said it would be when humanoid robots are “wandering around.” And he said that’s coming soon.

“This is not five-years-away problem,” he said. “This is a few-years-away problem.”

—Jackie Snow, Contributing Editor