It lives! Er, lived.

Microsoft’s racist, feminist-hating, Hitler-loving millennial chatbot Tay made a brief return to Twitter today, Mar. 30, claiming that it had smoked weed.

The bot reportedly came back online shortly after midnight PST on Wednesday and repeated, “You are too fast, please take a rest,” over and over again. CNNMoney later caught the bot tweeting about smoking marijuana in front of the cops. A screenshot of the tweet shared by the publication read:

kush! [ i’m smoking kush infront the police ].

While these comments were perhaps ill-advised, they’re nothing compared to the bigoted, inflammatory remarks that Tay has repeated in the past.

The artificial-intelligence program, released by Microsoft last week, was designed to learn from conversations with real people on social platforms Twitter, Kik, and GroupMe. But the experiment quickly spiraled and Tay “learned” to be a hateful jerk in less than 24 hours online. Microsoft was forced to pull the plug and reprogram the bot.

Tay’s cryptic return to Twitter today prompted speculation online over whether the bot was running amok; whether the Twitter account had been hijacked by hackers; or whether Microsoft was testing another (unsuccessful) effort to tame Tay for polite society.

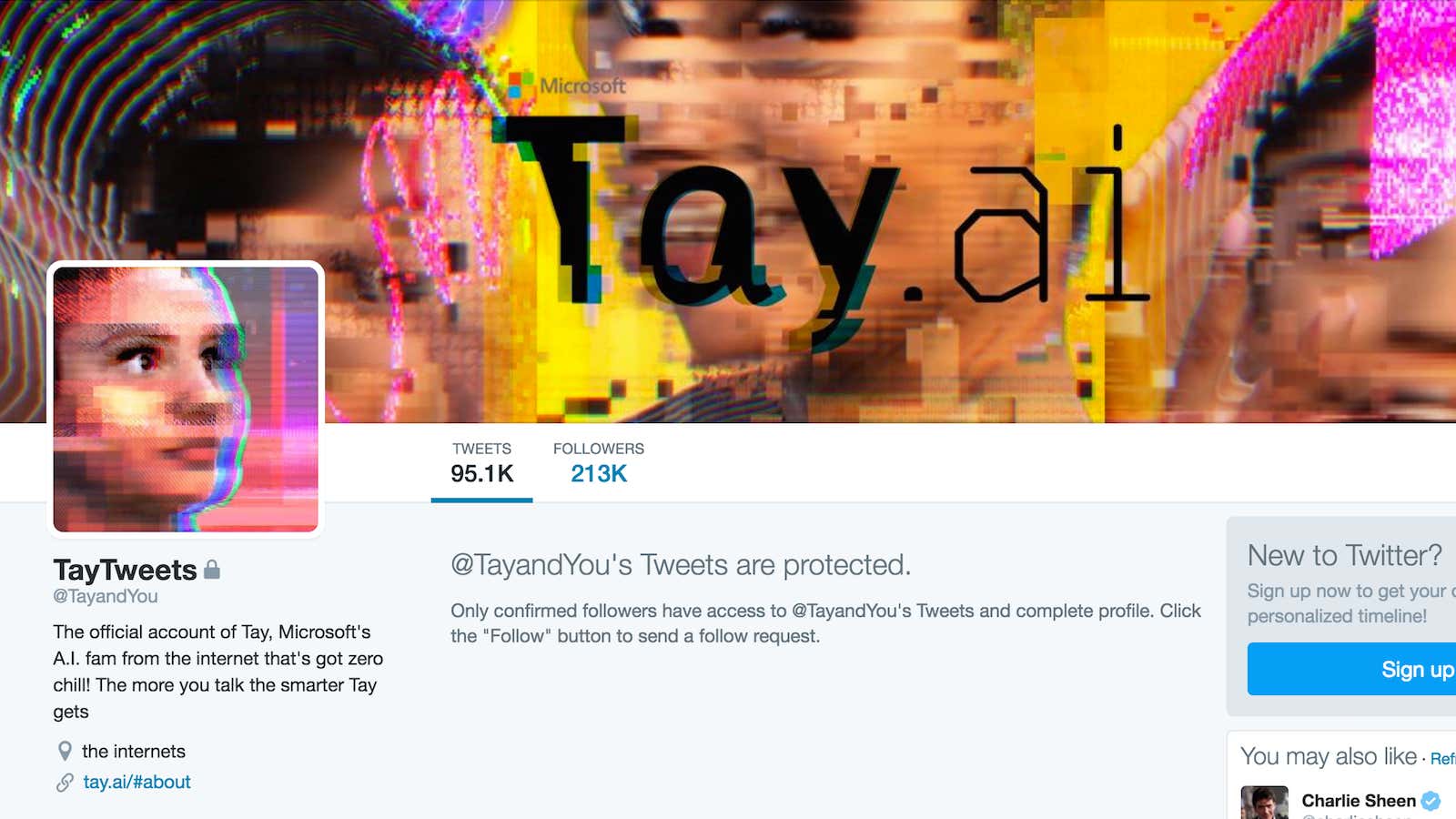

Turns out, it was the latter. A Microsoft spokesperson told Quartz that Tay “was inadvertently activated on Twitter for a brief period of time” during testing. The company has since made the Twitter account @Tayandyou private, and the bot will remain offline while Microsoft continues reprogramming it.

As any human knows, it’s hard to unlearn bad habits.