MIT researchers are teaching robots to obey our commands through Alexa

“Alexa, tell my robot to pick up my grocery bags and put everything away in the fridge.”

“Alexa, tell my robot to pick up my grocery bags and put everything away in the fridge.”

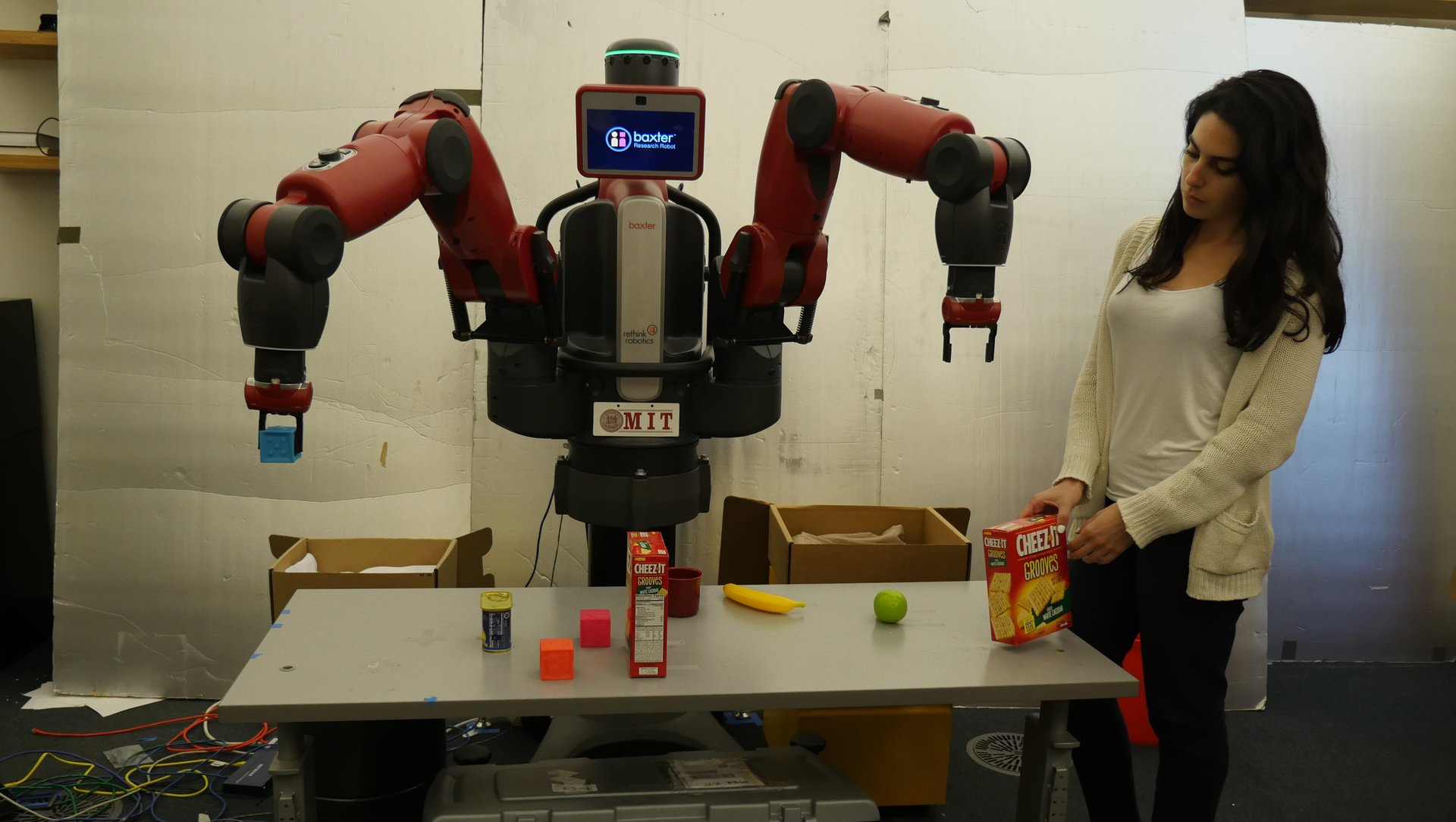

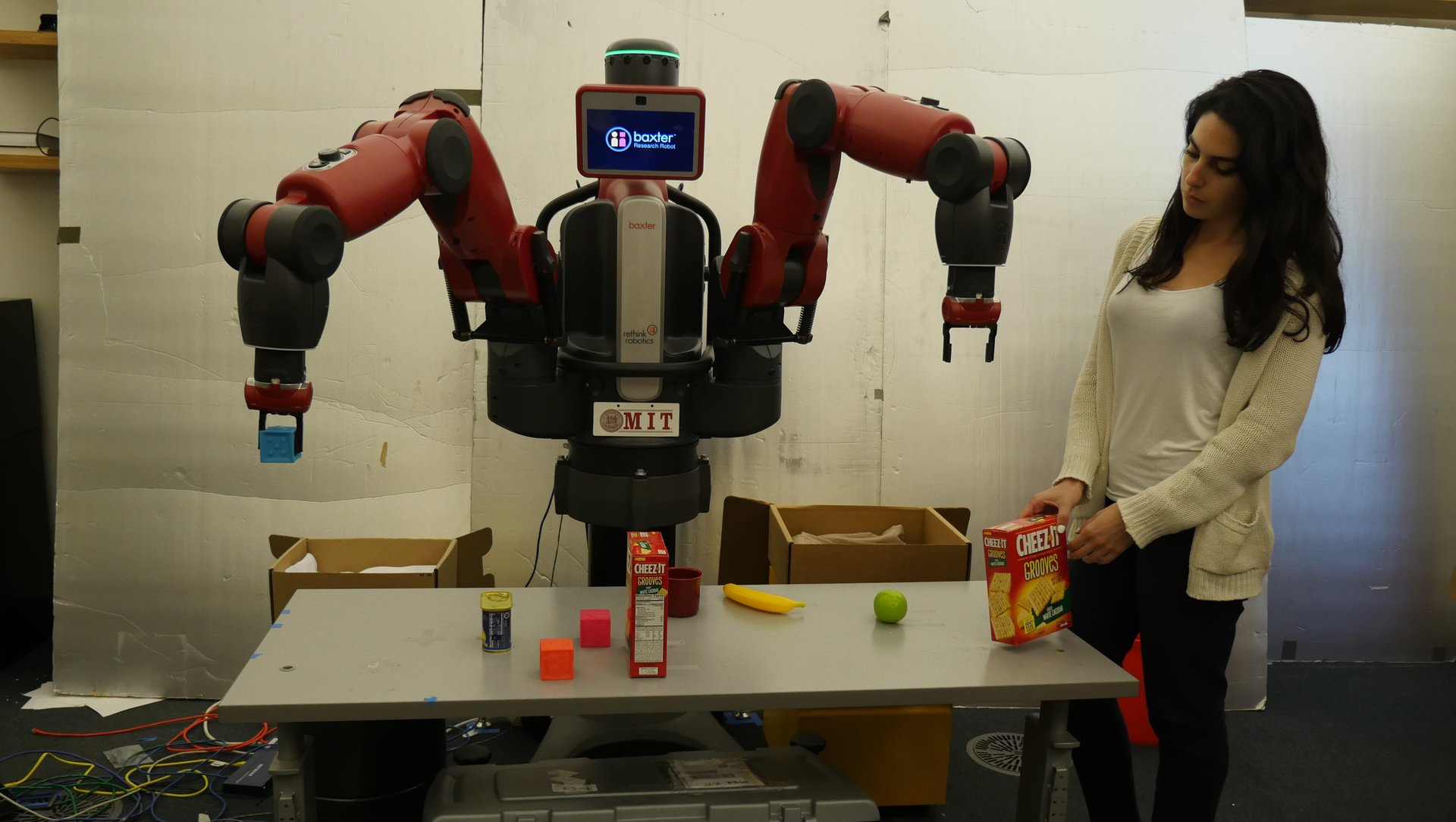

While that’s not something that Amazon’s digital assistant can currently do—even with the scores of robots Amazon uses, or the ones CEO Jeff Bezos parades around in—it could be, in the not-too-distant future. A group of students at MIT have been working with Alexa and a Baxter robot (a factory robot produced by Rethink Robotics) to teach the robot how to carry out tasks.

In their work, the students, led by Rohan Paul, a postdoctoral reasearcher with MIT’s CSAIL computer science lab, created a system through which Alexa can be used to make a robot pick up objects and put them in boxes, using only vague commands. Much in the same way that you can tell Alexa to turn off your internet-connected lightbulb or to have your Roomba clean the kitchen, the team’s robot responds programmed Alexa commands—you don’t tell Alexa to tell your Roomba to turn on, clean the kitchen, return to the dock once finished and alert you when it’s done; you just tell it to clean and it follows its programming.

MIT’s robot can also understand the context of the world around it and the words it hears. Instead of telling a robot precisely what to do, step-by-step, the team built a system that allows it to better understand the way humans talk. They have called it “ComText,” short for “commands in context.” If you put a hammer down in front of your average robot and say, “pick it up,” the bot will have no idea what you mean. With ComText, the team can tell the robot—through Alexa—”this is my hammer,” and then later they can say, “pick it up,” and the robot will know they mean, “pick up the hammer.”

“Where humans understand the world as a collection of objects and people and abstract concepts, machines view it as pixels, point-clouds, and 3-D maps generated from sensors,” Paul said in a statement provided to Quartz. “This semantic gap means that, for robots to understand what we want them to do, they need a much richer representation of what we do and say.”

The team’s video shows the robot picking up snack items and packing them into boxes. In the group’s paper on their work, they said their next steps involve training their model so that robots can understand a sequence of commands and objects.

It’s not difficult envision that, when Amazon has drones delivering our Whole Foods groceries to us, we’ll be able to tell Alexa to make sure our home robot puts them in the fridge immediately, because last time the yogurt went off by the time they put everything away.