There’s a glaring mistake in the way AI looks at the world

As humans, we’re pretty good at knowing what we’re looking at. We might be fleshy and weak compared to computers, but we string context and previous experience together effectively to understand what we see.

As humans, we’re pretty good at knowing what we’re looking at. We might be fleshy and weak compared to computers, but we string context and previous experience together effectively to understand what we see.

Artificial intelligence today doesn’t have that capability. The brain-inspired artificial neural networks that computer scientists have built for companies like Facebook and Google simply learn to recognize complex patterns in images. If it identifies the pattern, say the shape of a cat coupled with details of a cat’s fur, that’s a cat to the algorithm.

But researchers have found that the patterns AI looks for in images can be reverse-engineered and exploited, by using what they call an “adversarial example.” By changing an image of a school bus just 3%, one Google team was able to fool AI into seeing an ostrich. The implications of this attack means any automated computer vision system, whether it be facial recognition, self-driving cars, or even airport security, can be tricked into “seeing” something that’s not actually there. Computer scientists creating this attack say it’s necessary to develop so we can understand what’s possible before someone who means harm can take advantages of the shortcomings of AI.

The latest example of this pushes the idea further than ever. A team from MIT was able to 3D-print a toy turtle with a pattern that fooled Google’s object detection AI into thinking it was seeing a gun with more than 90% accuracy. Before the MIT paper, research focused on two-dimensional images, but success with a three-dimensional figure shows objects themselves can be made to fool machines from any angle.

Earlier work aimed at tricking AI focused on identifying the pixels in an image that an algorithm depends on most to determine whether it’s looking at a specific object or person. It then changed these pixels little by little, careful to not disturb how the image looked, while checking to make sure it’s confusing the machine slightly more each time, until the machine completely mistakes one object for another.

But since those changes are so slight, when one of these altered images is printed and exists in the real world, it’s less effective because of external factors like different lighting conditions or camera quality, says Anish Athalye, co-author on the MIT paper.

His new algorithm takes the adversarial example and simulates all the possible ways to view it—every angle and distance. It then combines all those potential viewpoints into a single pattern.

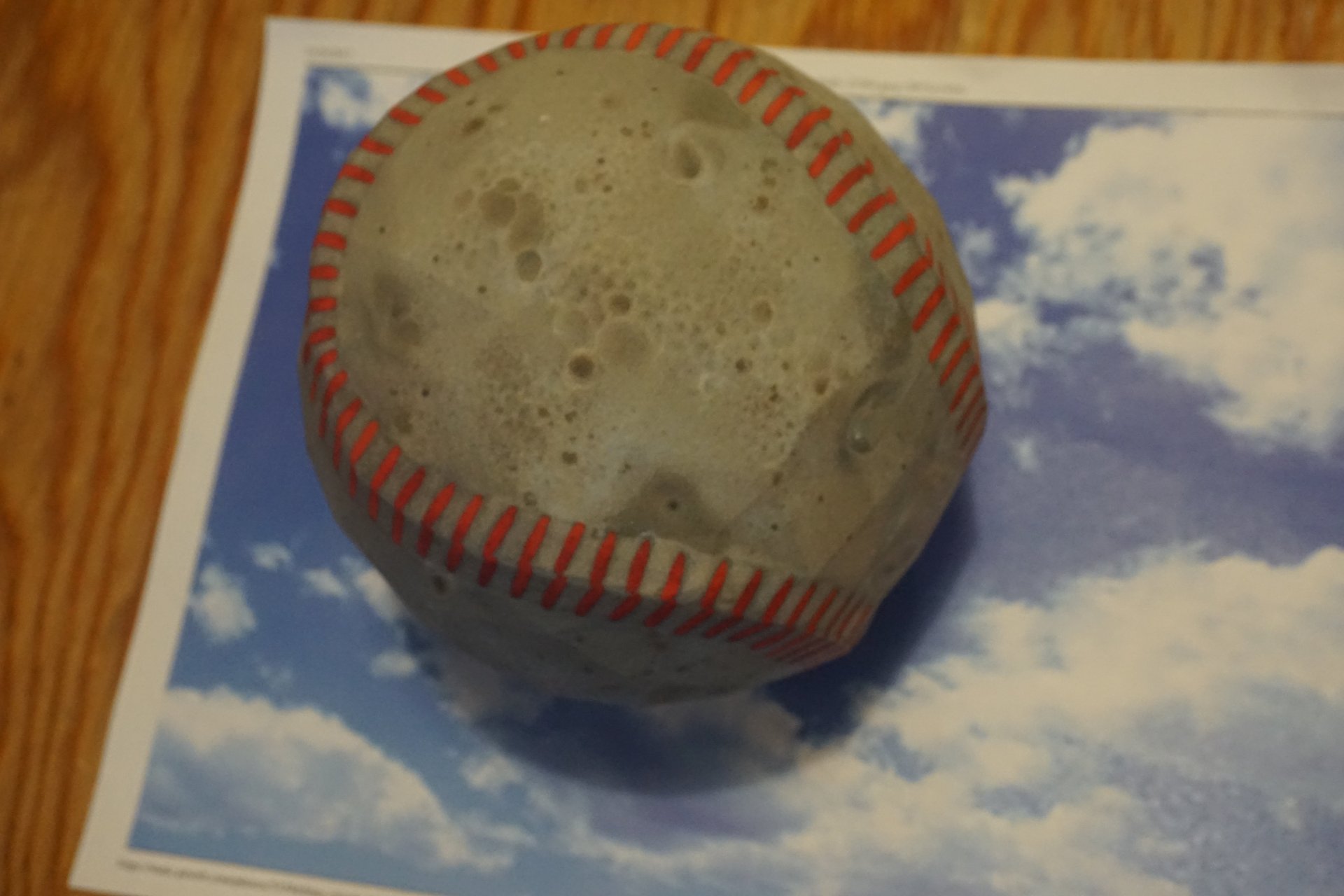

This technique, called Expectation Over Transformation, has been shown to work with nearly 100% accuracy on the 3D models that Athalye and his team have printed, including a turtle and a baseball. The fact that this even works points to the idea that something is broken in the way our artificial intelligence looks at images. The algorithms don’t fully understand what they’re looking at.

“It shouldn’t be able to take an image, slightly tweak the pixels, and completely confuse the network,” he said. “Neural networks blow all previous techniques out of the water in terms of performance, but given the existence of these adversarial examples, it shows we really don’t understand what’s going on.”

The research has its limitations: Now the attackers needs to know the inner workings of the algorithm they’re trying to fool. However, past research has been shown to work on black-box systems, or proprietary algorithms unknown to the attacker. Athalye says the team will pursue that area of research next.

Artificial intelligence is projected to add trillions of dollars to the global economy and revolutionize nearly every industry it touches, but the steady drumbeat of research that finds its flaws suggests there are unknown risks in relying on this nascent technology for critical security and safety purposes.

And for all the research on AI, technology companies driving much of the research have publicly done little to secure the software they sell to customers.

A Facebook spokesperson says the company is exploring securing against adversarial examples, shown through a research paper published in July 2017, but hasn’t implemented anything. Cloud provider Amazon Web Services, which sells image recognition AI through APIs, said its algorithms are resistant to adversarial examples, and they are continuing to improve. AWS adds noise and negative images to its training data to help its algorithms learn to be more robust against the attack. Google, an early pioneer on adversarial examples, declined to comment on whether its APIs and deployed AI are secured, but has recently submitted research to conferences on the topic. Microsoft, which also sells an image recognition API service through its Azure cloud, declined to comment.

“If we don’t manage to find good defenses against these, there will come a time where they are attacked,” Athalye said. “Consider a fraud detection system that uses machine learning, these are certainly in wide use. If you can figure out how to tweak inputs so that fraudulent transactions aren’t caught, that can cause financial damage.”