Facebook isn’t acting on one of its promises to fight fake news

In 2015, Facebook said it would introduce a feature that would let users flag a story in their News Feed as “false news.” In 2016, it also said it was “testing” a feature that would let users mark a post as “fake news,” this time using the term popularized during that year’s US presidential election campaign. But in 2018, when some users tried to curb the rapid spread of disinformation about students who were victims of the Parkland school shooting in Florida, the option was still not available.

In 2015, Facebook said it would introduce a feature that would let users flag a story in their News Feed as “false news.” In 2016, it also said it was “testing” a feature that would let users mark a post as “fake news,” this time using the term popularized during that year’s US presidential election campaign. But in 2018, when some users tried to curb the rapid spread of disinformation about students who were victims of the Parkland school shooting in Florida, the option was still not available.

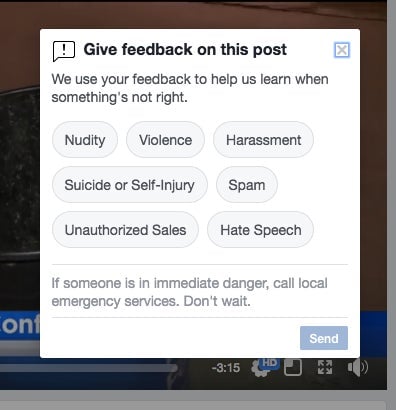

When one Quartz reporter tried to flag a video on Wednesday, Feb. 21, that claimed David Hogg, one of the students who is calling for gun control, was a “crisis actor,” the “Give Feedback” option on the post showed her multiple labels to describe the problem, from “harassment” to “nudity.” It did not show her, however, a label that said it was “false news.”

When I tried to do the same thing, the label did show up, as it did for other, similar video posts, such as on this conspiracy video about Hogg:

When asked about the discrepancy, Facebook said it was still in the process of rolling out the feature widely. That’s three years after the company first announced that it was trying to curtail the spread of disinformation.

It’s not clear when Facebook started considering videos in its false-news reporting. In the initial 2015 announcement, it said the update would apply to “posts including links, photos, videos and status updates.” In 2018, the reporting function hasn’t been rolled out widely across all post types. At the same time, Facebook has stressed multiple times how important it is to fight disinformation on its platform.

In October, during the deadly shooting in Las Vegas, Quartz reported that Facebook users could not report as “fake news” videos that were obviously spreading conspiracy theories, since they were not technically “news” stories. A person familiar with the company’s strategy told Quartz at the time that Facebook was developing a video-reporting function, and that it would be rolled out as soon as possible.

Quartz asked Facebook for more details about which users can report content as “false news,” and what post types they can flag. We will update this story with any comment.

The way that Facebook has dealt with stories that users have reported as false has also changed. In 2016, it announced a partnership with third-party fact-checkers, who would verify whether a post actually contained misinformation, and flag it as “disputed.” When the company found that red flags actually do nothing to deter people from clicking and sharing posts, it replaced them with “Related Articles.” Facebook now shows the same story reported by trusted news sources under the questionable post in a user’s timeline.