What you think you know about the US healthcare system is wrong

Since 1900, the average American lifespan has increased by 30 years, or by 62%. That nugget comes near the beginning of a new report taking stock of the US healthcare system, published in the Journal of the American Medical Association this week, and it’s also pretty much the last piece of good news in it.

Since 1900, the average American lifespan has increased by 30 years, or by 62%. That nugget comes near the beginning of a new report taking stock of the US healthcare system, published in the Journal of the American Medical Association this week, and it’s also pretty much the last piece of good news in it.

The study authors—a combination of experts from Alerion Advisors, Johns Hopkins University, the University of Rochester and the Boston Consulting Group—take a point-by-point look at why healthcare costs so much, why our outcomes are comparatively poor, and what accounts for the growth in medical expenditures.

In the process, they brought to light a number of surprising realities that debunk popular misconceptions about health spending.

Here are some of the juiciest:

The aging population doesn’t account for most medical spending.

Actually, chronic diseases, such as heart disease and diabetes, among people younger than 65 drives two-thirds of medical spending. About 85% of medical costs are spent on people younger than 65, though people do spend more on healthcare as they age.

“Between 2000 and 2011, increase in price (particularly of drugs, medical devices, and hospital care), not intensity of service or demographic change, produced most of the increase in health’s share of GDP,” the authors write.

The biggest-spending disease with the fastest growth rate was hyperlipidemia—high cholesterol and triglycerides—for which spending grew by 14.4% annually between 2000 and 2010.

The US doesn’t have the

best healthcare system in the world.

This is a frequent point that Obamacare opponents make when advocating for the status quo, but in fact, much of the southern US has a life expectancy that’s lower than average for the OECD, a set of industrialized nations that’s commonly used for comparison. And while Americans rate their experiences with the US healthcare system as generally positive, other countries within the OECD are just as satisfied, even though their medical care is much cheaper than ours.

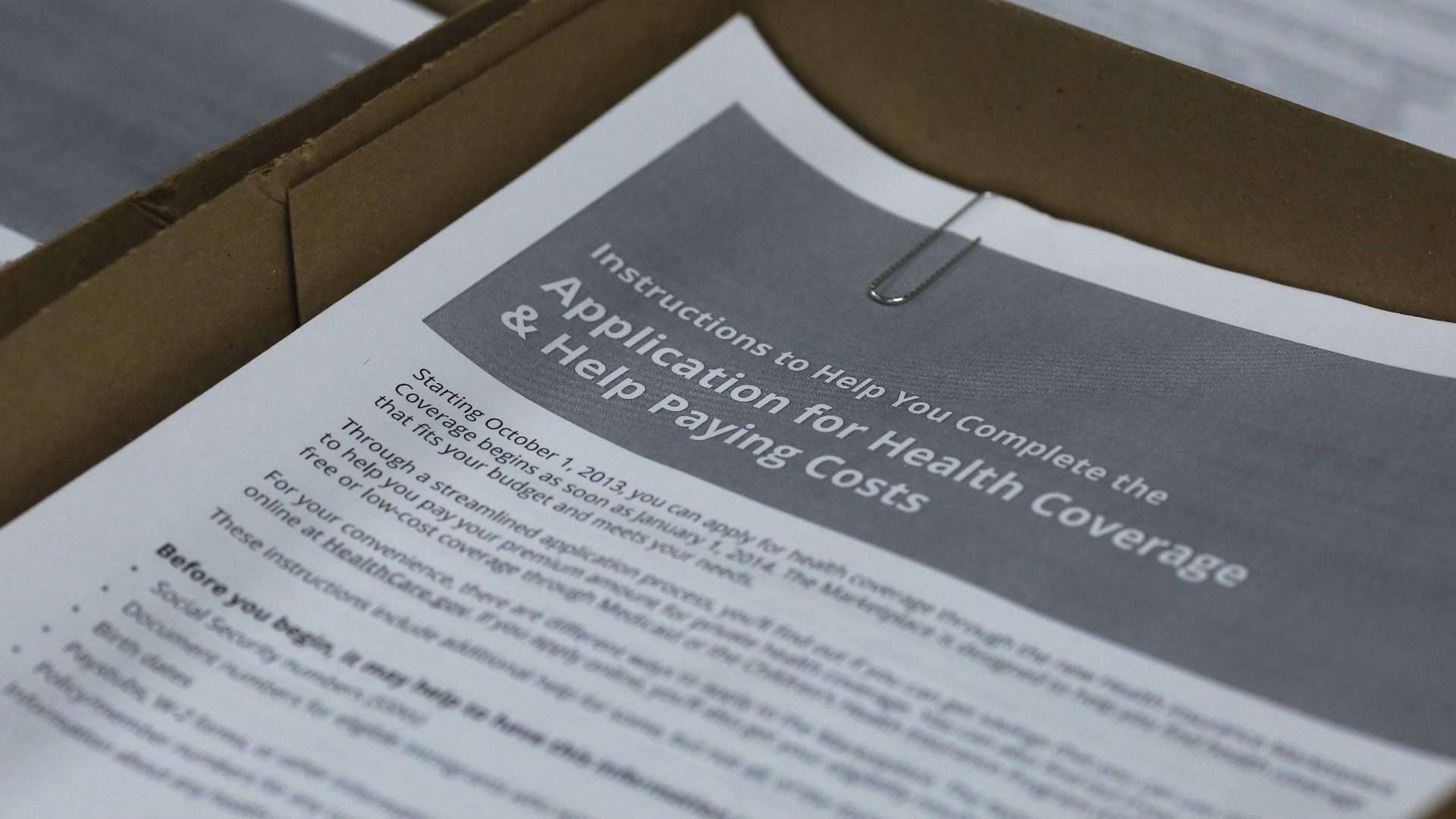

Spending more on IT is not necessarily making our health system more cost-efficient.

Both government and private spending on IT has been ramping up over the last decade, and it now adds up to about 3% of hospital or doctors’ office expenditures.

In short, your doctor now has an iPad! But does that actually help patients?

It’s true that some health systems, such as the VA and Kaiser Permanente, have used things like electronic medical records to better coordinate care (essentially resulting in less paperwork for the patients and better communication among specialists.)

But health IT’s impact on cost reduction is less apparent, the authors note. In the US, billing and administrative costs still account for 13% of healthcare revenue, compared with 6.6% in Canada, to name just one example.

“Information technology investments may have reduced costs from what they would otherwise have been,” the authors note, “and likely only partially account for their enormity, but have not made U.S. system administration efficient by any measure.

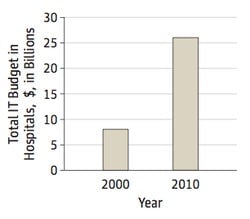

The rate of increase in medical costs has actually slowed.

Healthcare is expensive and getting more so, but since 1970, the rate of yearly increase in healthcare costs has declined, particularly since 2002. The authors partly attribute this decrease to the recession.

Consumers aren’t the ones paying for an increasing share of medical costs.

Though it often may feel like out-of-pocket expenses are growing disproportionately to the cost of healthcare, Medicare, Medicaid and federal employee plans have actually picked up an increasingly larger share of the tab.

Personal out-of-pocket spending declined by half since 1980 (from 23% to 11%). Both commercial payers (insurance companies) and the government (through Medicare and Medicaid), meanwhile, are now responsible for 90% of hospital and physician costs.

Ultimately, the authors conclude that physicians, patients, and insurers all want different things from healthcare. The patient wants to see the best doctors and get the best treatments available, the insurer wants to save money and send the patient to less-expensive doctors, and doctors want to preserve autonomy and ensure they’re getting paid fully for all the services they provide. How we resolve the tension between those different desires will determine what American medicine looks like—and how much it costs—in future years.