Why news organizations need to invest in better linking and tagging

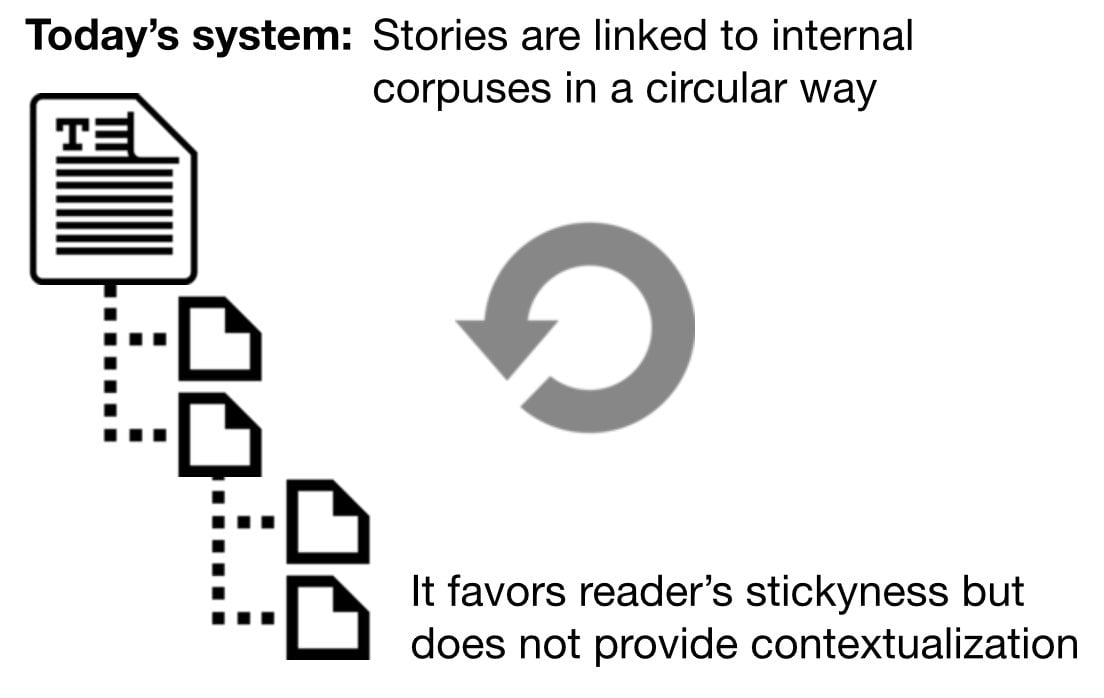

Most media organizations are still stuck in version 1.0 of linking. When they produce content, they assign tags and links mostly to other internal content. This is done out of fear that readers would escape for good if doors were opened too wide. Assigning tags is not exact science: I recently spotted a story about the new pregnancy in the British royal family; it was tagged “demography,” as if it was some piece about Germany’s weak fertility rate.

Most media organizations are still stuck in version 1.0 of linking. When they produce content, they assign tags and links mostly to other internal content. This is done out of fear that readers would escape for good if doors were opened too wide. Assigning tags is not exact science: I recently spotted a story about the new pregnancy in the British royal family; it was tagged “demography,” as if it was some piece about Germany’s weak fertility rate.

Today’s ways of laying out tags and and structuring topics are a mere first step; they are compulsory tools to keep the reader within the publication’s perimeter. The whole mechanism is improving, though. Some publications already use reader data profiling to dynamically assign related stories based on presumed affinities: Someone reading a story about General Electric might get a different set of related stories if she had been profiled as working in legal or finance rather than engineering.

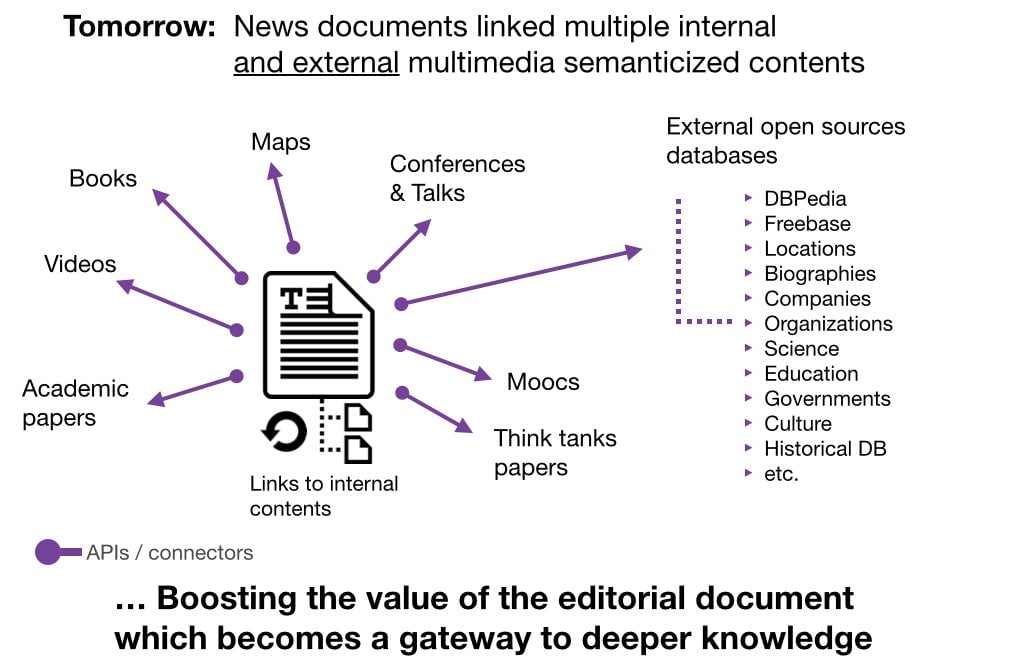

But there is much more to come in that field. Two factors are are at work: APIs and semantic improvements. APIs (Application Programming Interfaces) act like the receptors of a cell that exchanges chemical signals with other cells. It’s the way to connect a wide variety of content to the outside world. A story, a video, a graph can “talk” to and be read by other publications, databases, and other “organisms.” But first, it has to pass through semantic filters. From a text, the most basic tools extract sets of words and expressions such as named entities, patronyms, places.

Another higher level involves extracting meanings like “X acquired Y for Z million dollars” or “X has been appointed finance minister.” But what about a video? Some go with granular tagging systems; others, such as Ted Talks, come with multilingual transcripts that provide valuable raw material for semantic analysis. But the bulk of content remains stuck in a dumb form: minimal and most often unstructured tagging. These require complex treatments to make them “readable” by the outside world. For instance, a untranscribed video seen as interesting (say a Charlie Rose interview) will have to undergo a speech-to-text analysis to become usable. This processes requires both human curation (finding out what content is worth processing) and sophisticated technology (transcribing a speech by someone speaking super-fast or with a strong accent.)

Once these issues are solved, a complete new world of knowledge emerges. Enter “Semantic Culturomics.” The term has been coined by two scholars working in France, Fabian Suchanek and Nicoleta Preda. Here is a short abstract of their paper (thanks to Christophe Tricot for the tip):

Newspapers are testimonials of history. The same is increasingly true of social media such as online forums, online communities, and blogs.

Semantic Culturomics [is] a paradigm that uses semantic knowledge bases in order to give meaning to textual corpora such as news and social media. This idea is not without challenges, because it requires the link between textual corpora and semantic knowledge, as well as the ability to mine a hybrid data model for trends and logical rules. […] Semantics turns the texts into rich and deep sources of knowledge, exposing nuances that today’s analyses are still blind to. This would be of great use not just for historians and linguists, but also for journalists, sociologists, public opinion analysts, and political scientists.

In other words, and viewed through my own glasses, these two scientists suggest to go from this:

To this:

Now picture this: A hypothetical big-issue story about GE’s strategic climate change thinking, published in the Wall Street Journal, the FT, or in The Atlantic, suddenly opens to a vast web of knowledge. The text (along with graphics, videos, etc.) provided by the news media staff, is amplified by access to three books on global warming, two Ted Talks, several databases containing references to places and people mentioned in the story, an academic paper from Knowledge@Wharton, a MOOC from Coursera, a survey from a Scandinavian research institute, a National Geographic documentary, etc. Since (supposedly), all of the above is semanticized and speaks the same lingua franca as the original journalistic content, the process is largely automatized.

Great—but where is the value for the news organization, you might ask? First of all, a trusted publication (and a trusted byline) offering such super-curation to its readers is much more likely to attract a solvent audience: readers willing to pay for a service no one else offers. Second, money-making business-to-business intelligence services can be derived from modern tagging, structuring and linking. Such products would carry great value because they would be unique, based on trust, selection and relevance.

You can read more of Monday Note’s coverage of technology and media here.