Facebook warned people that a popular fake news detector might be “unsafe”

Shortly after Facebook pledged to better detect fake news, the company briefly handicapped a third-party plugin that claims to do just that.

Shortly after Facebook pledged to better detect fake news, the company briefly handicapped a third-party plugin that claims to do just that.

Two weeks ago, web designer Daniel Sieradski created B.S. Detector, a browser extension that alerts users to the presence of unreliable news sources, as a “proof of concept to counter [Facebook CEO] Mark Zuckerberg’s dubious claim that Facebook wasn’t in a position to really deal with fake news,” he tells Quartz. Since then, the plugin has been downloaded and installed about 25,000 times.

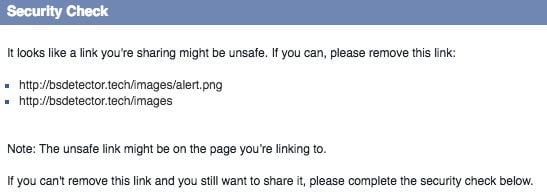

Today, however, some users attempting to share links to the B.S. Detector website on Facebook were interrupted by a warning from the social media company. ”It looks like a link you’re sharing might be unsafe,” a pop-up read when Quartz attempted to share B.S. Detector on Facebook this afternoon. “If you can, please remove this link.”

Facebook appeared to disable this warning on B.S. Detector around 2:30pm ET. The company did not respond to a request for comment.

Sieradski said he thinks Facebook may have been embarrassed by how quickly he threw together B.S. Detector (he says it took an hour) after repeatedly insisting it could not vet news better.

B.S. Detector works by scanning a given webpage for links to external sites, and comparing them against a database he created of domains flagged as unreliable. One classification of unreliability is “extreme bias” Sieradski said, which he described as “sites that are basically so blinded by their own bias that they’re willing to leave out contextual information.” He said he is “refining that list now and looking to partner with media watchdogs to create profiles on the sites we list backed by research and legitimate methodologies instead of just being arbitrary.” B.S. Detector aims to be nonpartisan.

In mid-November, Zuckerberg rejected the notion that Facebook’s team should become “arbiters of truth” and pushed back on reports that fake news had proliferated on the platform. Later that month, Zuckerberg offered a more measured view, saying Facebook took misinformation “seriously,” and outlined steps the company would take to better detect it. Facebook has also banned fake news publishers from its advertising network, in an attempt to stamp out the cottage industry of publishers who were distributing inaccurate and incendiary content to make a quick buck.

But the company has stopped short of pledging to filter all fake news from its platform, though Facebook’s head of artificial intelligence research recently said that was possible. Facebook’s problem with fake news is also arguably a side effect of a bigger problem, which is the “filter bubble” of news that many people live in today. It’s become dangerously easy for people to consume only news and opinions that suit their existing biases, particularly when it comes through Facebook’s like-based feeds.

Meanwhile, Sieradski has found a handful of contributors to help improve his open source project, and says he is also reaching out to media watchdog groups about possible collaborations. “I’ve watched as ideas that used to lurk in corners of the internet have moved into the mainstream, and watched this year as this massive disinformation has resulted in Americans being misinformed,” he said.