A group backed by Google and Microsoft wants to make “nutrition labels” for AI content

The Coalition for Content Provenance and Authenticity hopes to make AI-labeled images as common as HTTPS on URLs

With more than 2 billion voters expected to head to polls in 50 countries this year, the industry group Coalition for Content Provenance and Authenticity (C2PA) wants to be leading in combating deepfakes via the use of metadata and provenance technology, which tracks the origins of an image.

Suggested Reading

The idea is that AI-generated content should have a label like nutrition labels for food, where a consumer is not prohibited from buying a sugary cereal, but can walk into the store and know what’s in it and make their own decision, said Andy Parsons, co-founder of C2PA.

Related Content

Google announced Thursday, Feb. 8 that it is joining the coalition, which the industry hopes will signal to others the importance of labeling AI-made content. The goal of the group, which has 100 members now and “a lot of interest” is to get all media with content credentials.

“If you’re in news consumption mode, you should expect that all of your images, photographs, packages of text, video, etc, would have content credentials proving that something comes from where it purports to be,” he said. “That’s how you know the lock icon [seen with HTTPS on the URL] in your browser started out. Initially, it was the exception. And now it’s so common and expected that you no longer have a lock icon.” By doing so, that could reduce the potential of bad actors.

How tech companies are combating fake images by bad actors

There’s no one way to combat deepfakes. Tech companies have eagerly been ahead of regulators when it comes to finding solutions, whether that’s via disclosures or watermarks. It makes a lot of sense; with elections being so public, they want to make sure they have a good public image.

In 2019, the coalition, which was co-started by Adobe, was born out of the idea of creating a robust kind of content provenance that connects the “ingredients and recipe” to the content. Around that time, a video depicting Nancy Pelosi slurring her words and sounding intoxicated, which was developed using machine-learning algorithms, was circling online, which inspired them to think about marking when something was AI generated or not. In 2021, Adobe, together with companies like Microsoft and the BBC, created open-source implementations aimed at doing so.

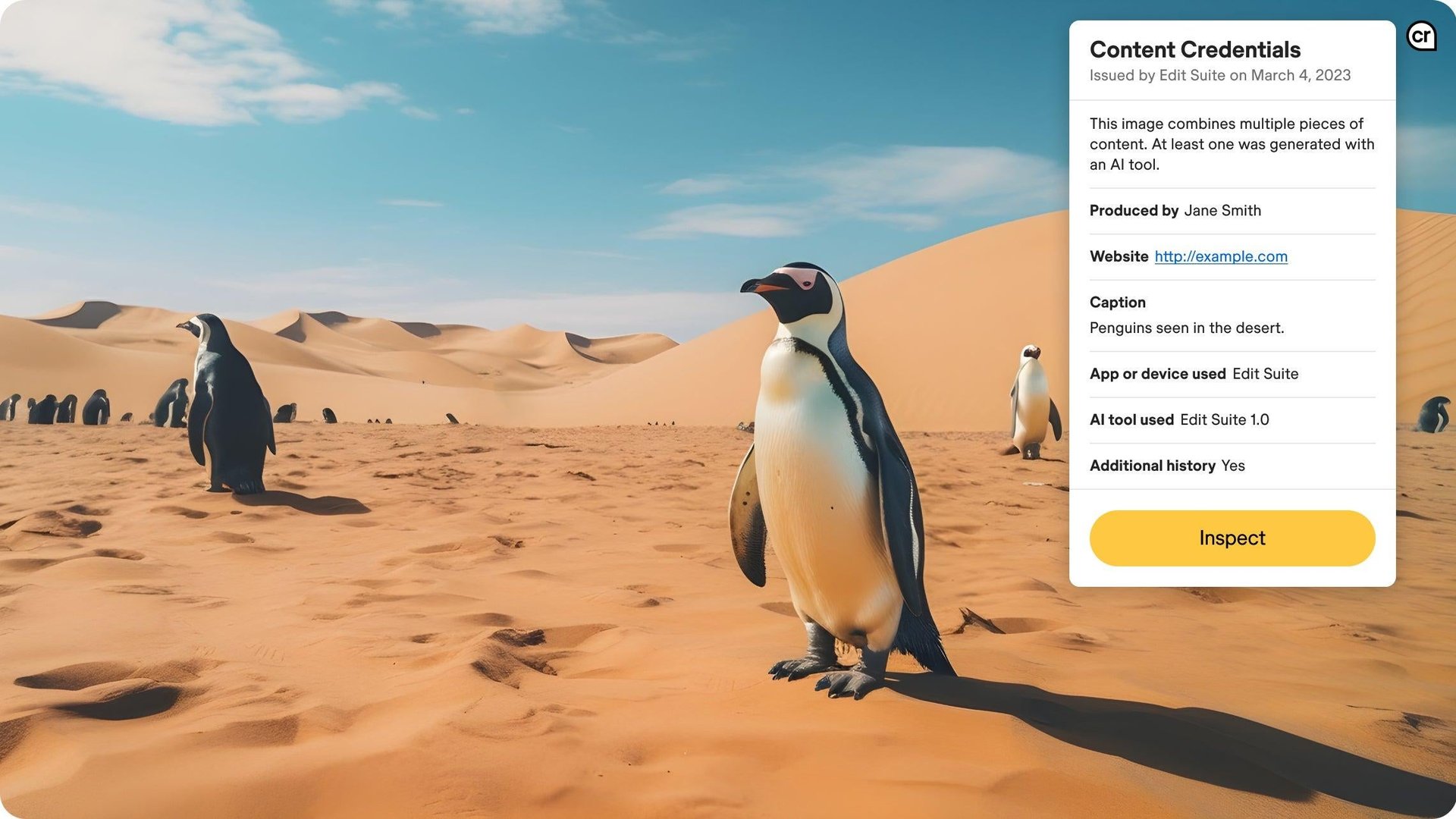

So, how does it work? The C2PA standard is open-sourced tamper evidence, so this is information about what tool, such as AI or a camera, made something, which is effectively attached to an image. The technology is open-sourced, too. If the metadata was intentionally stripped off, it will still be clear in the history and can be recovered via the cloud. On the visual front, consumers see a “CR” symbol on the image which they can click to see the “ingredients.”

But, there’s no hard rule on companies adopting these labels. In 2023, Adobe, which initiated the group, rolled out its generative AI model Firefly, which attaches C2PA’s Content Credentials to any image generation from its generative AI tool Adobe Firefly and certain AI-driven images in Photoshop. Microsoft, after, introduced the use of Content Credentials to label all AI-generated images created via Bing Image Creator. OpenAI also, this week, just announced images generated in ChatGPT include metadata using C2PA specifications.

Google doesn’t have a policy yet on how it is adopting AI-labels with its images. The company also notes that there are multiple ways to combat fake images, including embedding digital watermarks into AI-generated images and audio, political ad disclosures, and YouTube content labels, requiring creators to mark when videos are altered or synthetic.

What’s next

C2PA says it is seeing adoption from camera makers like Sony and Nikon, which are producing cameras that offer authentication technology. Parsons said he would like to see mobile device manufacturers join the coalition and begin to produce AI labels as part of phones, which are then usable by consumers.

Given that generative AI also produces different modes of content such as video, image, and audio, the coalition is also looking at research on what creating an AI label for audio looks like.