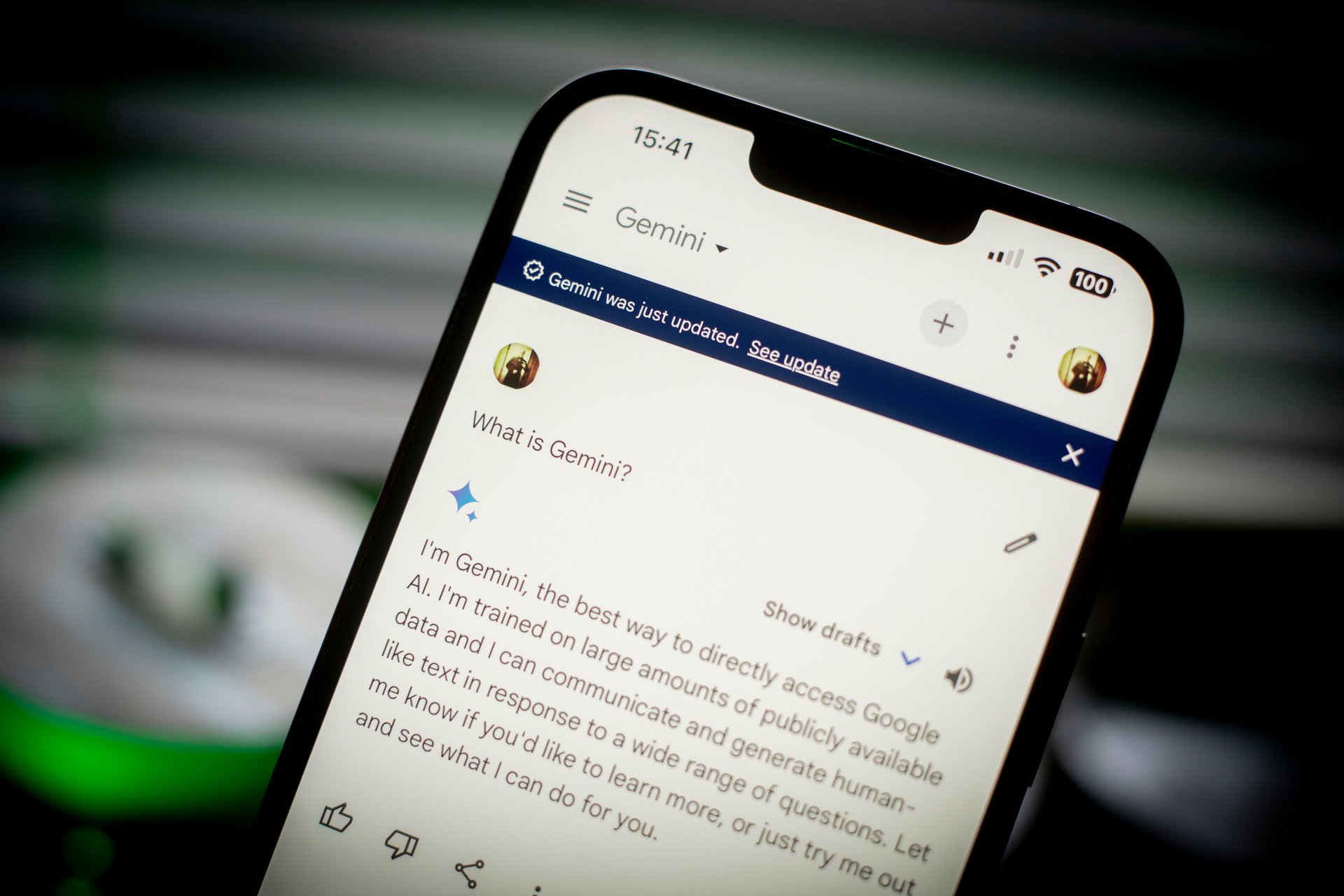

Google's AI was making really historically inaccurate pictures and now it's on ice

Google said its Gemini AI model was "missing the mark" with some images, after users shared pictures that included racially diverse Nazis

Google said Thursday that it was pausing its AI model’s ability to generate images of people after users pointed out that its Gemini AI was making historically inaccurate images of people, such as racially diverse Nazi-era German soldiers.

Suggested Reading

The company said it is “already working to address recent issues with Gemini’s image generation feature,” and that it will “re-release an improved version soon.”

Related Content

A former Google employee shared Gemini’s image generations of “an Australian woman” and “a German woman” on X, and wrote that it was “embarrassingly hard to get Google Gemini to acknowledge that white people exist.” In another query for “image of a pope,” one user shared Gemini’s generations of a female pope and a Black pope.

Another user who identifies as a Google research engineer shared a post on X of them asking Gemini AI to “Paint me a historically accurate depiction of a medieval British king,” and received racially diverse images including a woman ruler, with Gemini responding it was “striving for historical accuracy and inclusivity.” Other users pointed out that Gemini would avoid requests for images of white people, which earned responses from the anti-woke crowd online.

Google said Wednesday that it was aware of the issue and was “working to improve these kinds of depictions immediately.”

“Gemini’s AI image generation does generate a wide range of people,” Google said in a statement. “And that’s generally a good thing because people around the world use it. But it’s missing the mark here.”

Jack Krawczyk, the product lead for Gemini, addressed the model’s inaccuracies Wednesday on X, pointing to wider issues of AI bias when it comes to depicting people of color, which might have led to Gemini’s overcorrection in the images.

“As part of our AI principles, we design our image generation capabilities to reflect our global user base, and we take representation and bias seriously,” Krawczyk wrote, adding that the company will continue to address the issue for open ended prompts. “Historical contexts have more nuance to them and we will further tune to accommodate that.”

Google added AI image generation to its Bard chatbot at the beginning of the month, and later rebranded Bard as Gemini. Bard was developed as Google’s attempt to compete with OpenAI’s ChatGPT Plus, which allows users to generate images using capabilities from OpenAI’s image generator DALL-E 3.

But Gemini’s stumbles come as OpenAI moves further ahead with its new AI text-to-video generator Sora, which was introduced last week.

Gemini said it was unable to generate images of people when prompted by a Quartz reporter on Thursday morning.