Nvidia has a 'very big' new AI chip called Blackwell. Here's what to know

Nvidia CEO Jensen Huang announced the AI chipmaker's highly anticipated new processor at the company's GPU Technology Conference

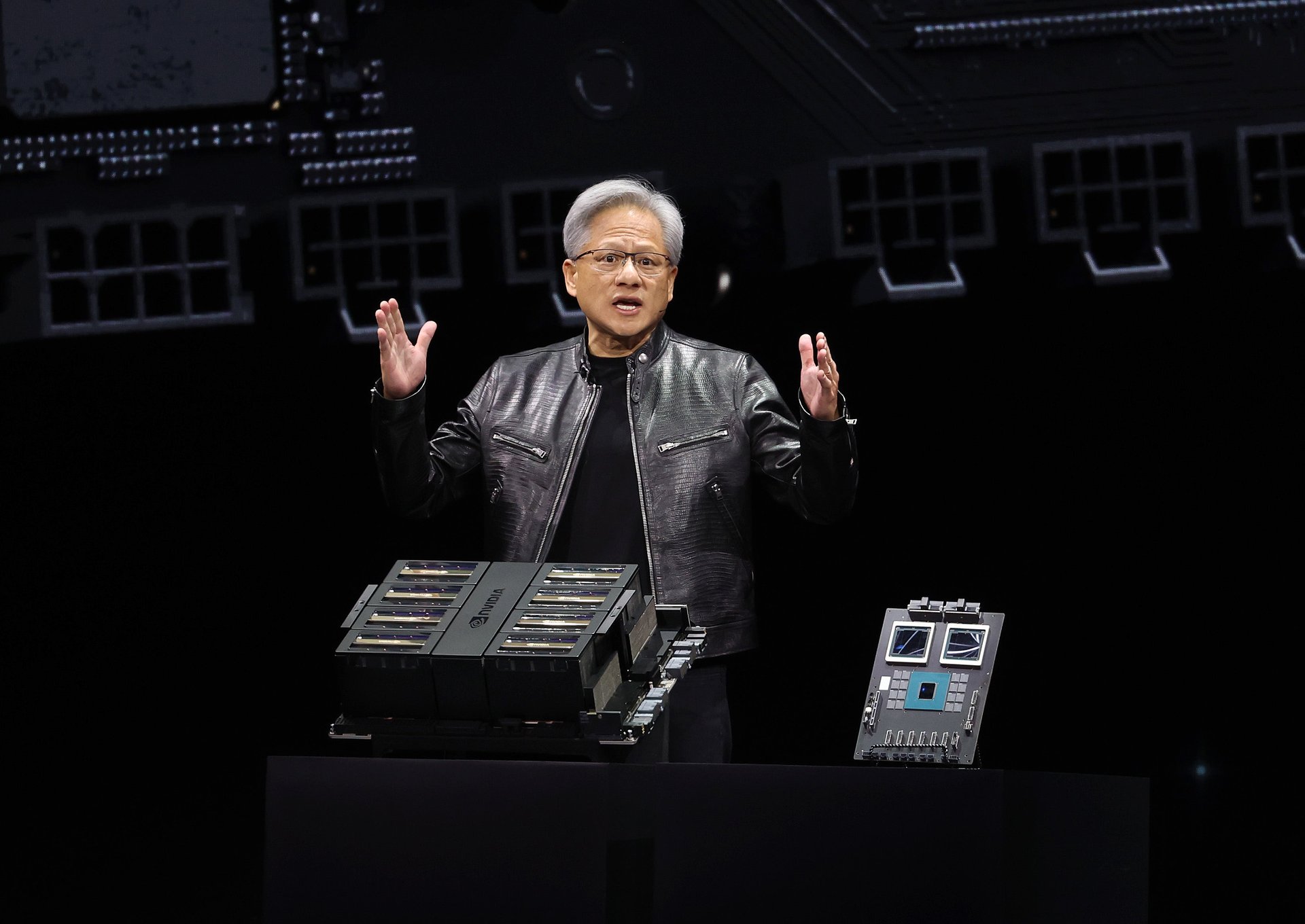

Nvidia, the company responsible for the most sought-after hardware in the world, unveiled an even more powerful next-generation version of its AI chip Monday, at a conference dubbed the “Woodstock of AI” by employees and analysts.

Suggested Reading

Nvidia CEO Jensen Huang announced the AI chipmaker’s highly anticipated new processor, Blackwell, saying tech giants like Microsoft and Google are already preparing for its arrival. Huang announced the new Blackwell chip at Nvidia’s annual GPU Technology Conference, or GTC, in San Jose, California.

Related Content

Here’s what to know about Nvidia’s new AI chip.

What is Nvidia’s new Blackwell AI chip?

The Blackwell computing platform includes the new B200 chip, made up of 208 billion transistors. It will be faster and more powerful than its predecessor, the hugely in-demand, $40,000 H100 chip named after computer scientist Grace Hopper, which is behind some of the world’s most powerful AI models. The Blackwell platform is named after mathematician David Blackwell, who was the first Black scholar inducted into the National Academy of Sciences.

“Hopper is fantastic, but we need bigger GPUs,” Huang said, referring to graphics processing units, as AI chips are know,

“So ladies and gentlemen,” Huang said, “I’d like to introduce you to a very big GPU.”

The B200 will also come in a few options for Nvidia’s customers, the company said, including as part of the Nvidia GB200 Grace Blackwell Superchip — which connects two of the B200 GPUs with a Nvidia Grace CPU, or central processing unit.

How fast is Blackwell, and what does it mean for AI?

Nvidia says Blackwell is 2.5 times faster than Hopper in training AI (feeding AI models data to improve their performance). And it’s five times faster than its Hopper architecture at inference, the process by which AI models can draw conclusions from new data.

The new Blackwell computing platform will be 25 times more energy efficient than its predecessor, Nvidia said.

“The amount of energy we save, the amount of networking bandwidth we save, the amount of wasted time we save, will be tremendous,” Huang said. “The future is generative… which is why this is a brand new industry. The way we compute is fundamentally different. We created a processor for the generative AI era.”

Huang said the world needs “bigger GPUs” as AI models become larger and more powerful. So its new Blackwell computing platform, which connects its new Blackwell chips into products such as the superchip and the company’s GB200 NVL72, will give AI developers more computing power to train AI models on multimodal data, which includes images, graphs, charts, and even video.

What companies want to buy Nvidia’s Blackwell chips?

The world’s top tech companies are already lined up for Nvidia’s new chip. Google parent Alphabet, Meta, Microsoft, OpenAI, and Tesla are among the firms Nvidia said are expected to adopt Blackwell soon.

OpenAI CEO Sam Altman said Blackwell “will accelerate our ability to deliver leading-edge models,” while Tesla CEO Elon Musk, who launched an OpenAI rival xAI, said that “there is currently nothing better than Nvidia hardware for AI.”

Microsoft and Meta are already Nvidia’s largest customers for its H100 chips — both spending $9 billion on the chips in 2023. Nvidia’s success with its H100 chip saw it become the first chipmaker to reach a $2 trillion market cap. It even edged out its customer, Amazon, to become the third-most valuable company in the world. It also beat Wall Street’s expectations for fourth-quarter earnings, reporting revenues of $22 billion — up almost 270% from the previous year.

Nvidia stock is up 80% so far this year, and up 236% over the last 12 months.

Nvidia’s new Blackwell chip will likely hold off any threats to its business from some customers developing chips of their own — including Amazon, which has worked on two chips called Inferentia and Tranium, and Google, which has been working on its Tensor Processing Units.