What if at the dawn of the industrial revolution in 1817 we had known the dangers of global warming? We would have created institutions to study man’s impact on the environment. We would have enshrined national laws and international treaties, agreeing to constrain harmful activities and to promote sound ones—for the good of humanity. If we had been able to predict our future, the world as it exists 200 years later would have been very different.

In 2017, we are at the same critical juncture in the development of artificial intelligence—except, this time, we have the foresight of seeing the horizon’s dangers.

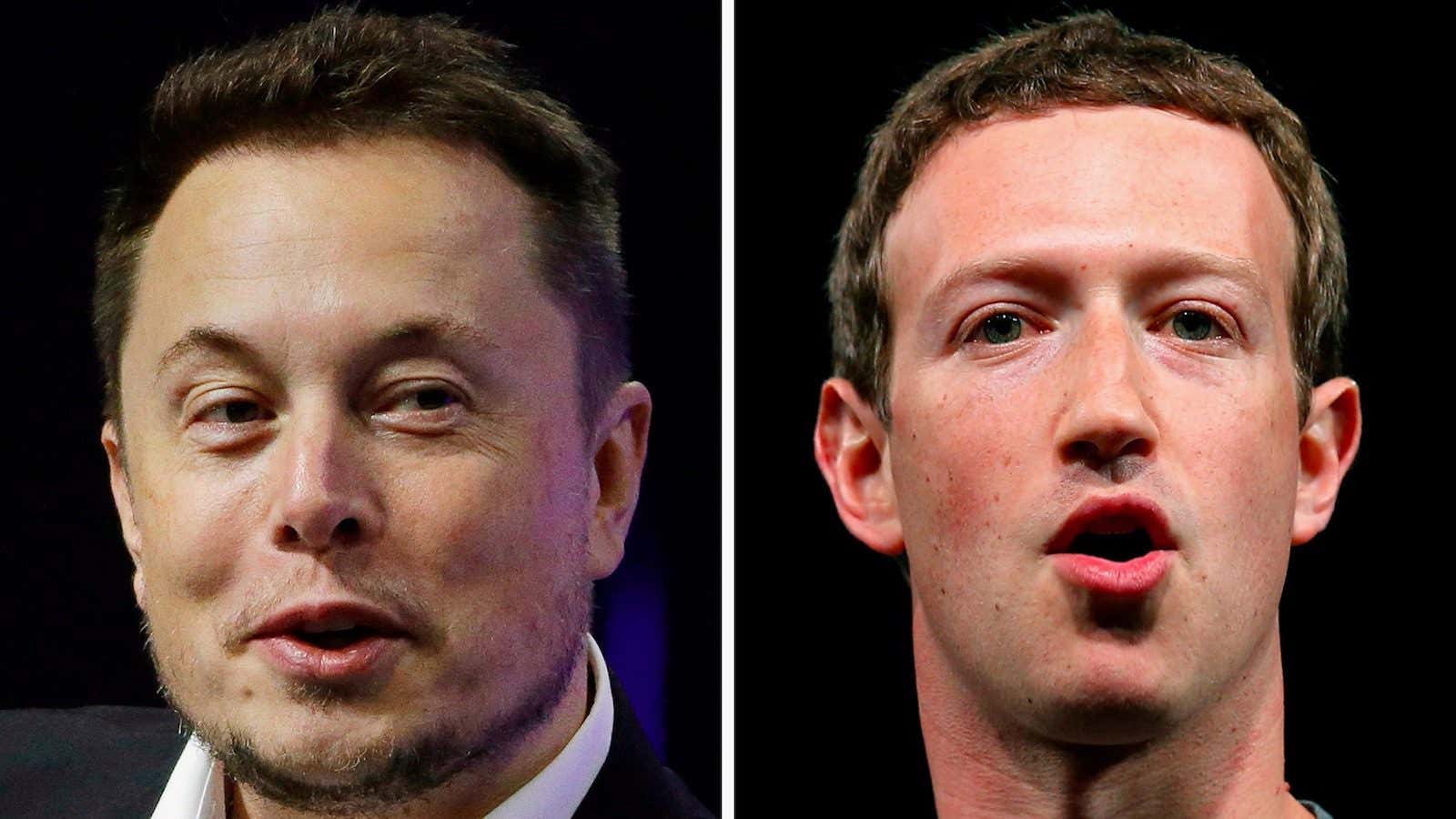

“AI is the rare case where I think we need to be proactive in regulation instead of reactive,” Elon Musk recently cautioned at the US National Governors Association annual meeting. “AI is a fundamental existential risk for human civilization…but until people see robots going down the street killing people, they don’t know how to react.”

However, not all think the future is that dire, or that close. Mark Zuckerberg responded to Musk’s dystopian statement in a Facebook Live post. “I think people who are naysayers and try to drum up these doomsday scenarios—I just, I don’t understand it,” he said while casually smoking brisket in his backyard. “It’s really negative and in some ways I actually think it is pretty irresponsible.” (Musk snapped back on Twitter the next day: “I’ve talked to Mark about this. His understanding of the subject is limited.”)

So, which of the two tech billionaires is right? Actually, both are.

Musk is correct that there are real dangers to AI’s advances, but his apocalyptic predictions distract from the more mundane but immediate issues that the technology presents. Zuckerberg is correct to emphasize the enormous benefits of AI, but he goes too far in terms of complacency, focusing on the technology that exists now rather than what might exist in 10 or 20 years.

We need to regulate AI before it becomes a problem, not afterward. This isn’t just about stopping shady corporations or governments building autonomous killer robots in secret underground laboratories: We also need a global governing body to answer all sorts of questions, such as who is responsible when AI causes harm, and whether AIs should be given certain rights, just as their human counterparts have.

We’ve made it work before: in space. The 1967 Outer Space Treaty is a piece of international law that restricts the ability of countries to colonize or weaponize celestial bodies. At the height of the Cold War, and shortly after the first space flight, the US and USSR realized an agreement was desirable given the shared existential risks of space exploration. Following negotiations over several years, the treaty was adopted by the UN before being ratified by governments worldwide.

This treaty was employed many years before we developed the technology to undertake the actions concerned as a precautionary measure, not as a reaction to solve a problem that already existed. AI governance needs to be the same.

Laying down the law

In the middle of the 20th century, science-fiction writer Isaac Asimov wrote four Laws of Robotics.

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey the orders given to it by human beings, except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

- A robot may not harm humanity, or, by inaction, allow humanity to come to harm.

Asimov’s fictional laws would arguably be a good basis for an AI-ethics treaty, but he started in the wrong place. We need to begin by asking not what the laws should be, but who should write them.

Some federal and private organizations are making early attempts to regulate AI more systematically. Google, Facebook, Amazon, IBM, and Microsoft recently announced they have formed the Orwellian-sounding “Partnership on Artificial Intelligence to Benefit People and Society,” whose goals include supporting best practices and creating an open platform for discussion. Its partners now include various NGOs and charities such as UNICEF, Human Rights Watch, and the ACLU. In September 2016, the US government released its first ever guidance on self-driving cars. A few months later, the UK’s Royal Society and British Academy, two of the world’s oldest and most respected scientific organizations, published a report that called for the creation of a new national body in the UK to “steward the evolution” of AI governance.

These kinds of reports show there is a growing consensus in favor of oversight of AI—but there’s still little agreement on how this should actually be implemented beyond academic whitepapers circulating governmental inboxes.

In order to be successful, AI regulation needs to be international. If it’s not, we will be left with a messy patchwork of different rules in different countries that will be complicated (and expensive) for AI designers to navigate. If there isn’t a legally binding global approach, some tech companies will also try to operate their businesses from wherever the law is the least restrictive, just as they do already with tax havens.

The solution also needs to involve players from both the public and private sector. Although the tech world’s Partnership on Artificial Intelligence plans to invite academics, non-profits, and specialists in policy and ethics to the table, it would benefit from the involvement of elected governments, too. While the tech companies are answerable to their shareholders, governments are answerable to their citizens. For example, the UK’s Human Fertilization and Embryology Authority is a great example of an organization that brings together lawyers, philosophers, scientists, government, and industry players in order to set rules and guidelines for the fast-developing fields of fertility treatment, gene editing, and biological cloning.

The AI police

Creating institutions and forming laws are only part of the answer: The other big issue is deciding who can and should enforce them.

For example, even if organizations and governments can agree which party should be liable if AI causes harm—the company, the coder, or the AI itself—what institution should hold the perpetrator to the crime, police the policy, deliver a verdict, and cast a sentence? Rather than create a new international police force for AI, a better solution is for countries to agree to regulate themselves under the same ethical banner.

The EU manages the tension between the need to set international standards and the desire of individual countries to set their own laws by setting directives that are “binding as to the result to be achieved,” but leave room for national governments to choose how to get there. This can mean setting regulatory floors or ceilings, like a maximum speed limit, for instance, by which member states can then set any limit below that level.

Another solution is to write “model laws” for AI, where experts from around the world pool their talents in order to come up with a set of regulations that countries can then take from and apply as much or as little as they want. This is helpful to less-wealthy nations as it saves them the cost of developing fresh legislation, but at the same time respects their autonomy by not forcing them to adopt all parts.

* * *

The world needs a global treaty on AI, as well as other mechanisms for setting common laws and standards. We should be thinking less about how to survive a robot apocalypse and more about how to live alongside them—and that’s going to require some rules that everyone plays by.