Do our faces show the world clues to our sexuality?

Last week, The Economist published a story around Stanford Graduate School of Business researchers Michal Kosinski and Yilun Wang’s claims that they had built artificial intelligence that could tell if we are gay or straight based on a few images of our faces. It seemed that Kosinski, an assistant professor at Stanford’s graduate business school who had previously gained some notoriety for establishing that AI could predict someone’s personality based on 50 Facebook Likes, had done it again; he’d brought some uncomfortable truth about technology to bear.

The study, which is slated to be published in The Journal of Personality and Social Psychology, drew plenty of skepticism. It came from those who follow AI research, as well as from LGBTQ groups such as Gay and Lesbian Advocates & Defenders (GLAAD).

“Technology cannot identify someone’s sexual orientation. What their technology can recognize is a pattern that found a small subset of out, white gay and lesbian people on dating sites who look similar. Those two findings should not be conflated,” Jim Halloran, GLAAD’s chief digital officer, wrote in a statement claiming the paper could cause harm exposing methods to target gay people.

On the other hand, LGBTQ Nation, a publication focused on issues in the lesbian, gay, bisexual, transgender, queer community, disagreed with GLAAD, saying the research identified a potential threat.

Regardless, reactions to the paper showed that there’s something deeply and viscerally disturbing about the idea of building a machine that could look at a human and judge something like their sexuality.

“When I first read the outraged summaries of it I felt outraged,” said Jeremy Howard, founder of AI education startup fast.ai. “And then I thought I should read the paper, so then I started reading the paper and remained outraged.”

Excluding citations, the paper is 36 pages long, far more verbose than most AI papers you’ll see, and is fairly labyrinthian when describing the results of the authors’ experiments and their justifications for their findings.

Kosinski asserted in an interview with Quartz that regardless of the methods of his paper, his research was in service of gay and lesbian people that he sees under siege in modern society. By showing that it’s possible, Kosinski wants to sound the alarm bells for others to take privacy-infringing AI seriously. He says his work stands on the shoulders of research happening for decades—he’s not reinventing anything, just translating known differences about gay and straight people through new technology.

“This is the lamest algorithm you can use, trained on a small sample with small resolution with off-the-shelf tools that are actually not made for what we are asking them to do,” Kosinski said. He’s in an undeniably tough place: Defending the validity of his work because he’s trying to be taken seriously, while implying that his methodology isn’t even a good way to go about this research.

Essentially, Kosinski built a bomb to prove to the world he could. But unlike a nuke, the fundamental architecture of today’s best AI makes the margin between success and failure fuzzy and unknowable, and at the end of the day accuracy doesn’t matter if some autocrat likes the idea and takes it. But understanding why experts say that this particular instance is flawed can help us more fully appreciate the implications of this technology.

Is the science good?

By the standards of the AI community, how the authors conducted this study was totally normal. You take some data—in this case it was 15,000 pictures of gay and straight people from a popular dating website—and show it to a deep-learning algorithm. The algorithm sets out to find patterns within the groups of images.

“It couldn’t be more standard,” Howard said of the authors’ methods. “Super standard, super simple.”

Once the algorithm has analyzed those patterns, it should be able to find similar patterns on new images. Researchers typically set a few images aside from the data the algorithm is taught with in order to test it and make sure it’s actually learning patterns between people in general and not just those specific people.

There are two important parts here: the algorithm and the data.

The algorithm that Kosinski and Wang used is called VGG-Face. It’s a deep-learning algorithm custom-built for working with faces, which means the original authors of the software, a group from the highly-regarded Oxford Vision Lab, went through a lot of pains to make sure it focuses on the face and not a face’s surroundings. It’s been shown to be great at recognizing people’s faces across different images and even finding people’s doppelgängers in art.

It’s important to focus only on the face because deep-learning algorithms have been shown to pick up on biases in the data they analyze. When they’re looking for patterns between data, they pick up all kinds of other patterns that may not be relevant to the intended task but affects the outcome of the machine’s decision. A paper late last year tried to prove a similar algorithm could tell if someone was a criminal from their face—it was later shown that the original data for “innocent” people were filled with businessmen wearing white collars. The algorithm thought if you wore a white collar you were innocent.

The job of the AI researcher has flipped from about 10 years ago. Before deep learning became the norm, researchers had to specifically tell algorithms what patterns to find: look for the distance between the eyebrow and nose, the lips and chin, etc. They called it feature engineering. Now the process is more akin to whittling down distractions for machines, like those pesky white collars. But since this new method is reductive, and it’s maddeningly difficult to know what patterns your algorithm will find in the first place, it’s much harder to make sure that every distraction is actually gone.

Here’s where all that background becomes important: a main claim of the authors is that their algorithm works well because VGG-Face, the algorithm, doesn’t really consider the person’s facial expression or pose that heavily. Rather, it should focus on permanent features like the length of the nose or width of the face, because those stay consistent when recognizing a person.

The authors say this is necessary because, since the pictures are from a dating site, gay and straight people might use different facial expressions, camera angles, or poses to seem attractive based on the sex they’re trying to attract. It’s an idea backed by sociologists, and if your claim is that facial structure is different for gay people and straight people, then you definitely don’t want something as fleeting as a facial expression to confuse that.

But some AI researchers doubt that VGG-Face is actually ignoring expression and pose, because the model isn’t being used for its intended use, to simply identify people. Rather, it’s being used to find complex patterns between different people. Tom White, a computational design professor at the Victoria University School of Design, says that, in his experience, VGG-Face is sensitive enough to facial expressions that you can actually use it to only tell the difference between them. Using the same parameters as in the paper, he ran his own quick experiment, and found that the VGG-Face algorithm trained on pictures of people making a “sad” face could identify the emotion “sad” elsewhere.

In short: if emotion can be detected in his test, it can be detected in Kosinski’s.

Howard, the fast.ai founder, says there might be a reason for this. The VGG-Face algorithm that anyone can download and use was originally built to identify celebrity faces; think about it, who do we have more easily-accessible photos of than celebrities?

But when you want the algorithm to do something besides tell Ashton Kutcher from Michael Moore, you have to scrape that celebrity knowledge out. Deep-learning algorithms are built in layers. The first layer can tell the shape of a head, the second finds the ears and nose, and the details get finer and finer until it narrows down to a specific person on the last layer. But Howard says that last layer, which has to be removed, can also contain knowledge connecting different pictures of the same person by disregarding their pose.

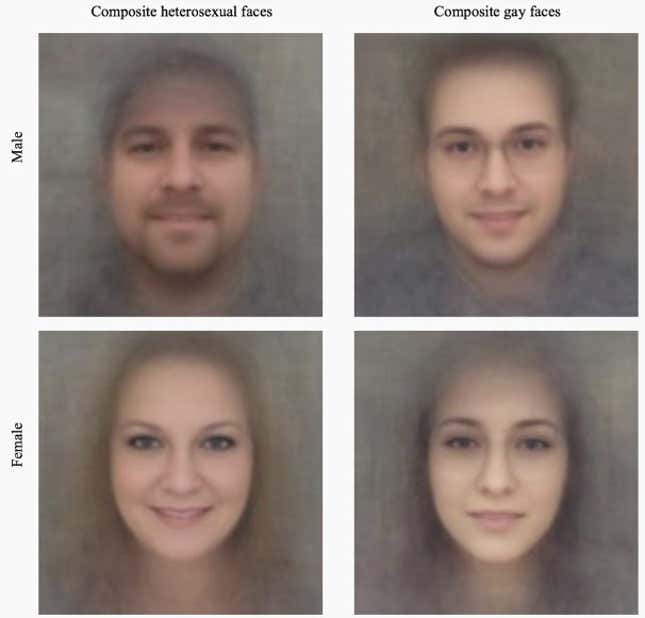

The authors themselves note that confounding factors snuck into the algorithm through the data. For instance, they found that gay and straight men wore different facial hair, and gay women were more prone to wear baseball caps.

This is just one critique: we don’t know for sure that this is true, and it’s impossibly difficult to find out given the information provided in the paper or even if you had the algorithm. Kosinski doesn’t claim to know all the ways he might be wrong. But this potential explanation based on the test of another AI researcher throws doubt into the idea that VGG-Face can be used as a perfect oracle to detect something about a person’s facial features while ignoring confounding details.

The second aspect of this research, apart from the algorithm, are the data used to train the facial-recognition system. Kosinski and Wang write that they gathered roughly 70,000 images from an undisclosed US dating website. Kosinski refuses to say whether he worked with the dating site or was allowed to download images from it—he’ll only say that the Stanford Internal Review Board approved the research.

However, the paper doesn’t indicate that Kosinski and Wang had permission to scrape that data, and a Quartz review of major dating websites including OKCupid, Match.com, eHarmony, and Plenty of Fish indicate that scraping or using the sites’ data for research is prohibited by the various Terms of Service.

A researcher using a company’s data would usually reach out for a number of reasons; primarily to ask permission to use the data, but also because a modern internet company routinely gathers information about its users from data on its site. The company could have disclosed technological or cultural biases inherent in the data for researchers to avoid.

Either way, it’s unclear how images of people taken from dating websites and sorted only into gay and straight categories accurately represent their sexuality. Images could be misleading because people present themselves in a manner they think will attract their targeted sex, meaning a higher likelihood of expressions, makeup, and posing. These are impermanent features, and the authors even note that makeup can interfere with the algorithm’s judgment. The authors also assume that men looking for male partners and females looking for female partners are gay, but that’s a stunted, binary distillation of the sexual spectrum sociologists today are trying to understand.

“We don’t actually have a way to measure the thing we’re trying to explain,” says Philip N. Cohen, a sociologist at the University of Maryland, College Park. “We don’t know who’s gay. We don’t even know what that means. Is it an identity where you stand up and say ‘I am gay,’ is it an underlying attraction, or is it a behavior? If it’s any of those things, it’s not going to be dichotomous.”

Cohen says that whatever the measure, sexuality is not an either/or, as the study suggests. To only measure in terms of gay or straight doesn’t accurately reflect the world, but instead forces a human construct onto it—a hallmark of bad science.

To that, Kosinski says the study was conducted within the confines of what users reported themselves to be looking for on these dating sites—and it comes back to the point that someone using this maliciously wouldn’t split hairs over whether someone was bisexual or gay.

What about the numbers?

Despite the 91% accuracy reported in other news outlets, that number only comes from a very specific scenario.

The algorithm is shown five pictures each of two different people who were looking for the same or opposite sex on the dating site, and told that one of them is gay. The algorithm then had 91% accuracy designating which one of those two people was more likely to be gay.

The accuracy here has a baseline of 50%—if the algorithm got any more than that, it would be better than random chance. Each of the AI researchers and sociologists I spoke with said the algorithms undeniably saw some difference between the two sets of photos. Unfortunately, we don’t know for sure what the difference it saw was.

Another experiment detailed in the paper calls the difference analyzed between gay and straight faces into further question. The authors selected 100 random people from a bigger pool of 1,000 people in their data. Estimates from the paper put roughly 7% of the population as gay (Gallup says 4% identify as LGBT as of this year, but 7% of millennials), so from a random draw of 100 people, seven would be gay. Then they tell the algorithm to pull the top 100 people who are most likely to be gay from the full 1,000.

The algorithm does it; but only 43 people are actually gay, compared to the entire 70 expected to be in the sample of 1000. The remaining 57 are straight, but somehow exhibit what the algorithm thinks are signs of gayness. At its most confident, asked to identify the top 1% of perceived gayness, only 9 of 10 people are correctly labeled.

Kosinski offers his own perspective on accuracy: he doesn’t care. While accuracy is a measure of success, Kosinski said he didn’t know if it was ethically sound to create the best algorithmic approach, for fear someone could replicate it, instead opting to use off-the-shelf approaches.

In reality, this isn’t an algorithm that tells gay people from straight people. It’s just an algorithm that finds unknown patterns between two groups of people’s faces who were on a dating site looking for either the same or opposite sex at one point in time.

Do claims match results?

After reading Kosinski and Wang’s paper, three sociologists and data scientists who spoke with Quartz questioned whether the author’s assertion that gay and straight people have different faces is supported by the experiments in the paper.

“The thing that [the authors] assert that I don’t see the evidence for is that there are fixed physiognomic differences in facial structure that the algorithm is picking up,” said Carl Bergstrom, evolutionary biologist at the University of Washington in Seattle and co-author of the blog Calling Bullshit.

The study also heavily leans on previous research that claims humans can tell gay faces from straight faces, indicating an initial benchmark to prove machines can do a better job. But that research has been criticized as well, and mainly relies on the images and perceptions humans hold about what a gay person or straight person looks like. In other words, stereotypes.

“These images emerge, in theory, from people’s experience and stereotypes about gay and straight individuals. It also shows that people are quite accurate,” Konstantin Tskhay, a sociologist who conducted research on whether people could tell gay from straight faces and cited in Kosinski and Wang’s paper, told Quartz in an email.

But since we can’t say with total certainty that the VGG-Face algorithm hadn’t also picked up those stereotypes (that humans see too) from the data, it’s difficult to call this a sexual-preference detection tool instead of a stereotype-detection tool.

Does the science matter?

This kind of research, like Kosinski’s last major research on Facebook Likes, falls into a category close to “gain of function” research.

The general pursuit is creating dangerous situations to understand them before they happen naturally—like making influenza far more contagious to study how it could evolve to be more transmittable—and it’s extremely controversial. Some believe this kind of work, especially when practiced in biology, could be easily translated into bioterrorism or accidentally create a pandemic.

For instance, the Obama administration paused work on GOF research in 2014, citing that the risks needed to be examined more before enhancing viruses and diseases further. Others say the risk is worth having an antidote to a bioterrorism attack, or averting the next Ebola outbreak.

Kosinski got a taste of the potential misuse with his Facebook Like work—much of that research was directly taken and translated into Cambridge Analytica, the hyper-targeting company used in the 2016 US presidential election by the Cruz and Trump campaigns. He maintains that he didn’t write Cambridge Analytica’s code, but press reports strongly indicate its fundamental technology is built on his work.

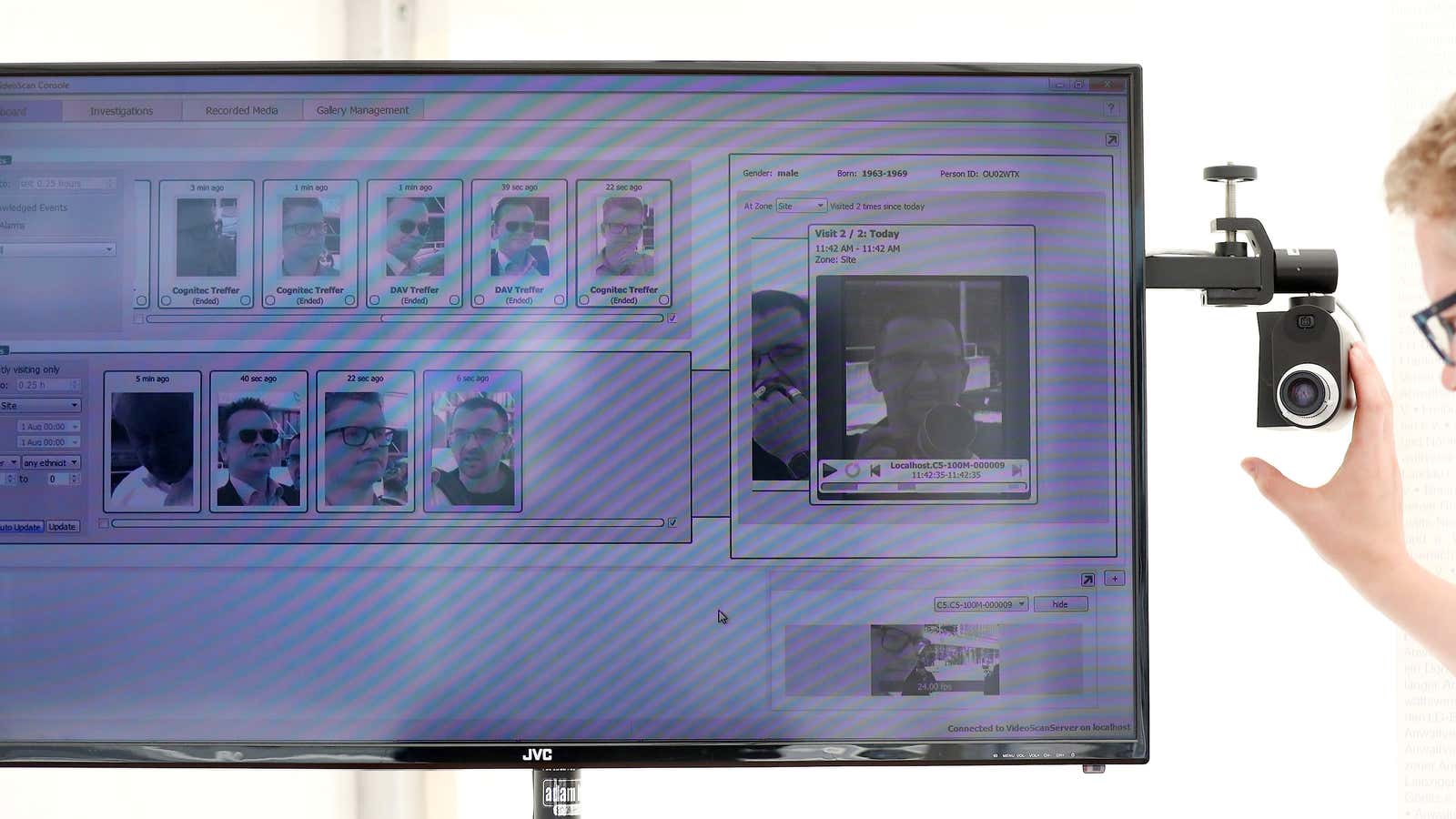

He maintains that others were using hypertargeting technology before Cambridge Analytica, including Facebook itself—and other people are using facial recognition technology to target people, like police targeting criminals, now.

On Twitter, within the paper’s text, and on the phone, Kosinski skirts the line between provocateur and repentant scientist. He insists to journalists that his work is sound and we should be fearful of AI’s implications, while exposing his inner desire for it to be wrong. He’s Paul Revere, but one who published papers on the best ways for the British to attack.

“It’s not ultimate proof,” he says. “It’s just one study, and I hope there will be more studies conducted. I hope studies will not only focus on seeing whether it replicates in other places, but also potentially other types of scientists will look at it, legal scholars and policy makers … and engineers in computer scientist departments, and say, ‘What can we do to make these predictions as difficult as possible?'”

Correction: An earlier version contained a quote from Bergstrom which stated Kosinski and Wang did not cite research which linked facial morphology and sexuality. Kosinski and Wang did cite two papers in their research, and Bergstrom clarified after publication that he was referring to 3D scans. A clause was also added giving context to the number of false positives in the research.