In 2018, the word “algorithm” has become an evil agent.

Facebook’s algorithm sells our fear and outrage for profit. YouTube’s algorithm favors conspiratorial and divisive content. We’ve anthropomorphized the word so much that Gen Z children might start checking for algorithms under their beds.

But when you strip away all the boogeyman connotations, an algorithm is simply a set of rules. The technology alone isn’t to blame for its misdoings, but that doesn’t mean an algorithm won’t be to blame for the destruction of humankind—especially if left unchecked.

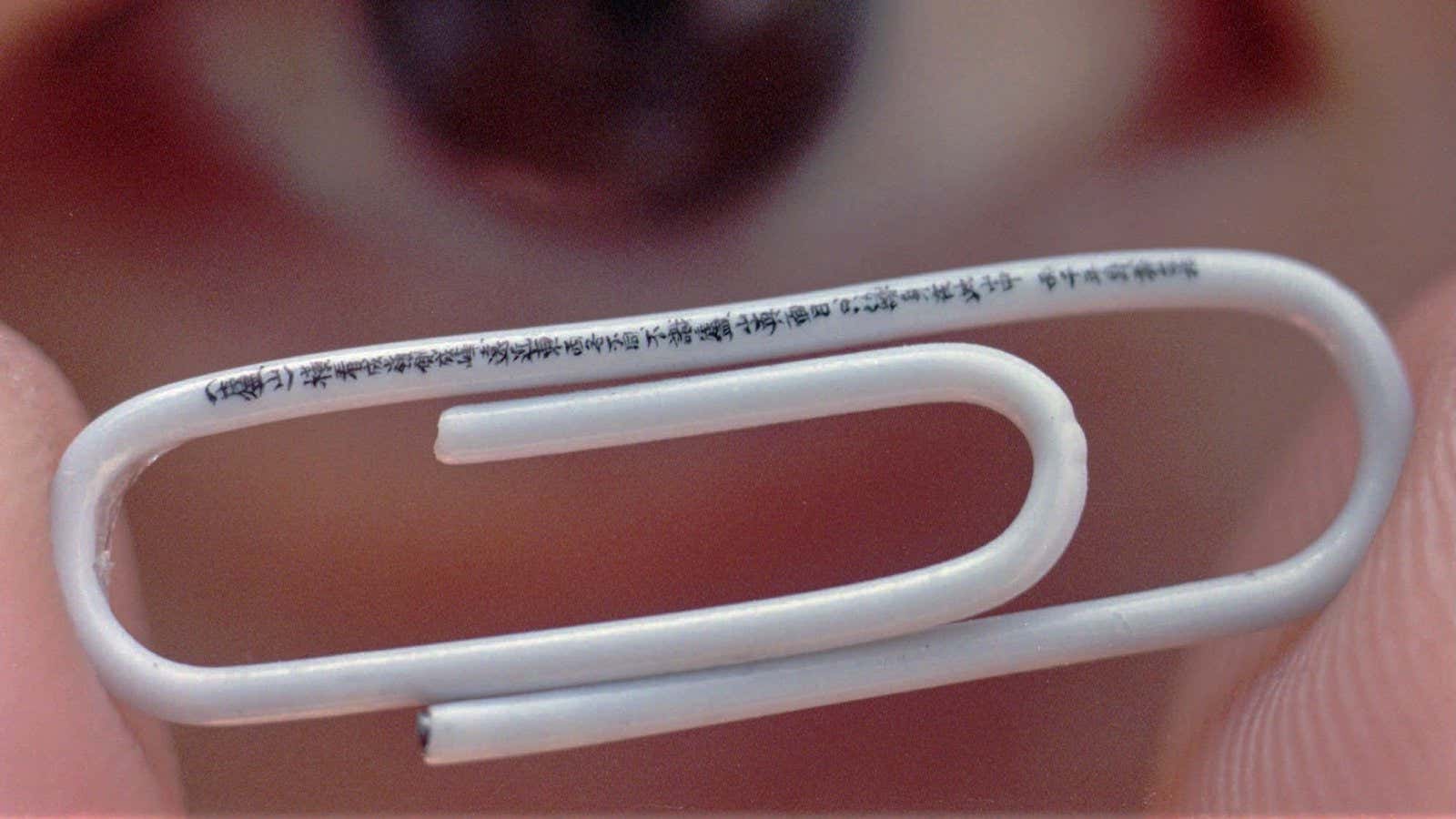

To explain this, there’s a common thought experiment in the world of artificial intelligence called the Paper Clip Maximizer. It goes like this. Imagine a group of programmers build an algorithm with the seemingly innocuous goal of gathering as many paper clips as possible. This machine-learning algorithm is intelligent, meaning it learns from the past and continually gets better at its task—which, in this case, is accumulating paper clips.

At first, the algorithm gathers all the boxes of paper clips from office-supply stores. Then it might look for all the lost paper clips in the bottom of desk drawers and between sofa cushions. Running out of easy targets, over time it will learn to build paper clips from fork prongs and electrical wires—and eventually start ripping apart every piece of metal in the world to fashion into inch-long document fasteners.

The thought experiment is meant to show how an optimization algorithm, even if designed with no malicious intent, could ultimately destroy the world. The technology’s original goal was never to bring about the end of civilization—it was just looking to achieve the objective set by its human parent. However, without proper regulation, the results can be dire. As technology progresses at an exponential rate, we must take a step back to ask what we’re optimizing for.

The analogies to today’s global economy are clear. Environmentalists argue that coal-burning plants have optimization machines aimed at maximizing energy output without taking responsibility for the pollution. Social media critics argue that companies like Facebook have optimization machines aimed at capturing human attention without accountability to the costs. Media pundits argue that certain ad-supported publications have optimization machines aimed at maximizing clicks without regard to journalistic standards.

Algorithmic accountability matters now more than ever because technological progress is increasing at an exponential rate. Google’s supercomputer AlphaGo beat the world’s best Go player a decade before most experts expected. Translation algorithms are approaching human-level accuracy. Self-driving cars are already cruising around our streets. Course correction will become more difficult as algorithms become more ubiquitous.

When it comes to algorithmic accountability, it’s important to set up the necessary checks and balances before it’s too late. As technologies scale, a lack of initial oversight could have disastrous long-term consequences.

“If we don’t get women and people of color at the table—real technologists doing the real work—we will bias systems,” said Melinda Gates at the launch of the nonprofit AI4All last year, which she funded. “Trying to reverse that a decade or two from now will be so much more difficult, if not close to impossible.”

Though amorphous algorithms often become the scapegoat for technological mishaps, it’s important to remember that algorithms are written by humans. A diversity of input—from both data and personnel—is necessary to illuminate potential blind spots. If we leave ourselves in the dark, algorithmic bias—whether it’s facial recognition software that more easily identifies white men than black women, or an SAT-prep company’s pricing algorithm that charges predominantly Asian zip codes twice as much as non-Asian zip codes—can be pervasive.

But the fear of misuse should not trump the potential improvements these technologies can bring. Recent studies proved that algorithmic approaches to criminal sentencing can reduce the incarceration rate without adversely affecting public safety. Though sentencing decisions have traditionally relied on a judge’s intuition or subjective preferences, computers can help make our systems more fair.

The key to these experiments is supervision. The Paper Clip Maximizer shows us that intentions won’t matter unless we’ve set up systems to hold ourselves accountable. But when the proper checks are in place, algorithms are nothing to fear—and neither are office supplies.