Newly-spotted code on a Facebook website suggests that the platform is preparing to take a leap forward in transparency around how political ads are targeted to its users. Facebook denies any change is afoot.

Microtargeting on Facebook allows progressive voting rights groups to show their ads only to racial minorities. Or a pro-nuclear energy group to choose vegans as its target audience. In 2016, Russian operatives attempted to divide the American population by directing Facebook to show racially divisive messages, for instance, only to African-Americans. Such targeting power is built into Facebook’s design. In fact, a recent Senate Intelligence Committee report said Russian meddlers had used the Facebook platform “exactly as it was engineered to be used.”

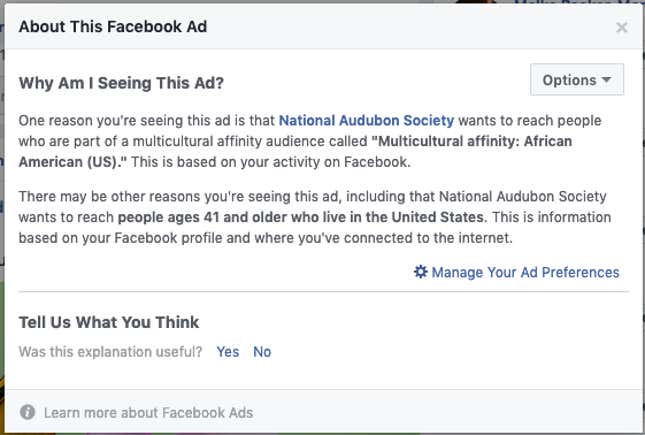

Yet this information about how each ad is targeted is available only to people who see one and then click on an obscure button. It’s known on the platform as “Why am I seeing this?” or “WAIST,” and Facebook has consistently refused to disclose WAIST info for individual ads to the broader public.

But that information appears to be part of an upcoming redesign of Facebook’s political ad transparency website, according to Quartz’s review of the code already public on that site.

Facebook has denied it, however. Spokesperson Tom Channick told Quartz, “We are not considering adding targeting parameters to the ad library at this time.”

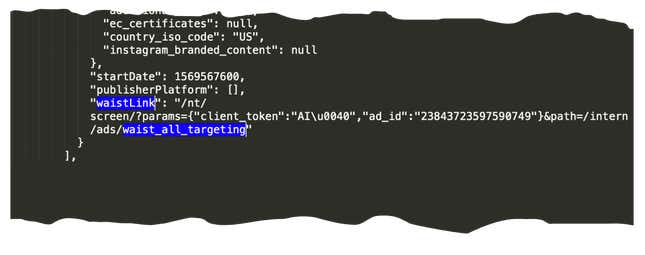

But earlier this month, the JavaScript code inside Facebook’s ad library site included several references to a button for WAIST.

And the data stream that powers ad library pages included a new “waistLink” category and a link to a non-public page that includes “waist_all_targeting” in the URL.

Channick characterized the appearance of references to “WAIST” as “a bug in the Ad Library that is now fixed.” After his response, the code of the ad library site was not changed, except to remove references to the word “waist” and to replace them with the word “link.” The link previously labeled “waistLink” was replaced with blank text. Facebook did not respond to a question asking what “WAIST” referred to if not “Why am I seeing this?”.

If a change is coming, it might not be for the public at large. In the code, the tag “[T&C Only]” suggests it may be available to people who have agreed to some set of terms and conditions. Other portions of the code–but not the references to “WAIST”–were designated “FB-Only” or appeared to be limited to regulators.

There’s also new code in the ad library that hints at more powerful search tools, too, potentially letting researchers filter their searches to recently-seen ads, ads viewed by a certain number of people, ads seen by geography, and ads seen on Facebook’s other apps–WhatsApp, Instagram, its Audience Network, and Facebook Messenger. (This was confirmed today in an announcement by Facebook.)

Also new: If you know how to construct the URL, you can link to an individual ad in the library, one of many changes researchers have been requesting for some time.

Microtargeting information is valuable for advertisers and their competitors, so Facebook has incentive to guard it. But it’s also important for researchers seeking to understand the opaque strategic choices advertisers make–particularly for political and issue advertising, which remain under the specter of foreign election interference or attempts to dissuade people from voting. Researchers and journalists (including this one) have sought access to the data for years and the platforms haven’t given a clear answer why they won’t publicize it.

Annie Levene, a partner at Democratic digital strategy firm Rising Tide Interactive, cautions that the availability of more detailed targeting information, if it occurs, won’t necessarily shed a huge amount of light on the actions of political campaigns as opposed to bad actors. “A lot of the more sophisticated political targeting is going to be done through uploading custom lists” of individual voters, she says. In those cases, Facebook doesn’t directly know why campaigns are targeting those people.

Facebook may also be preparing to share more targeting information with individual users. While people often see vague explanations, like “One reason you’re seeing this ad is that Democratic Party wants to reach people interested in Barack Obama,” some users have started to see more information, according to code and screenshots reviewed by Quartz. The new versions list multiple interest segments an advertiser chose to target with their ad.

“This was our main critique beforehand, that Facebook was not giving all the reasons that advertisers” had selected to target, said Oana Goga, a researcher at the Université Grenoble Alpes in France, who was part of a team that exposed the incompleteness of Facebook’s explanations.

Kari Chisholm, a Democratic political consultant and president of Mandate Media, applauds Facebook’s efforts at transparency. But he says that “it would be a mistake for Facebook to reveal targeting information that campaigns use in a competitive way. That’ll just encourage campaigns to engage in tactics to disguise their moves, fake out opponents, and distract the public. At best, that’s a waste of time and money; at worst, it could lead to unethical behavior.”

Let us know if you know more.

This story is from Quartz’s investigations team. Here’s how you can reach us with feedback or tips:

Email (insecure): [email protected]

Signal (secure): +1 929 202 9229

Secure Drop (secure & anonymous): qz.com/tips