Internet and technology experts see voice recognition as the next big thing for “smart,” internet-connected devices, according to a report released today by Pew Research Center. A survey of more than 1,600 people identified as technology builders and analysts found that most expected voice recognition and speech synthesis to be the computer interface of the near-future.

Those surveyed were skeptical of Google Glass’s acceptance in society. “Speech synthesis and voice recognition will trump glass,” John Markoff, senior writer for the Science section of the New York Times, wrote in his survey response. “We will talk to our machines, and they will speak to us.”

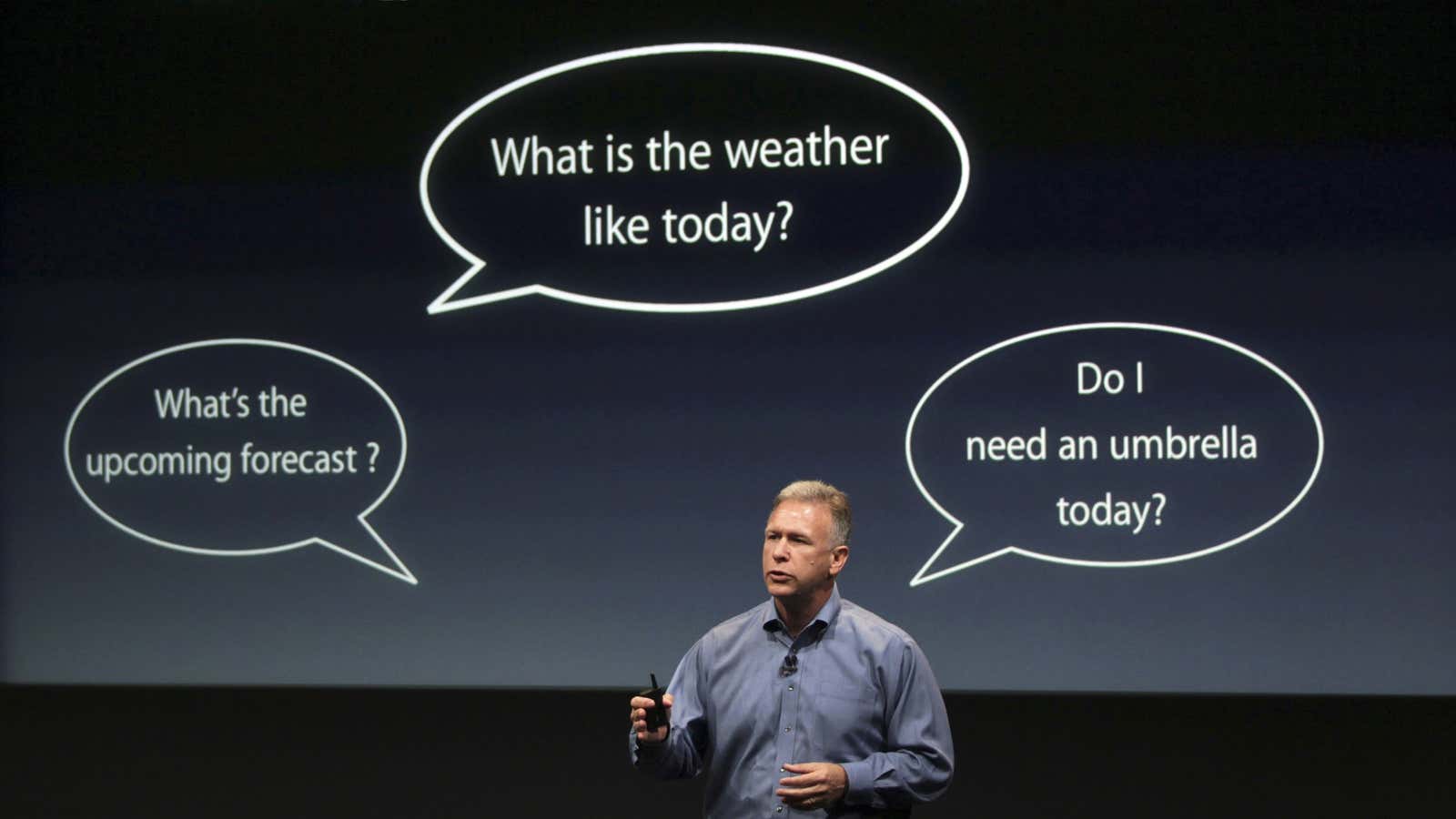

Although many have high hopes for a world of smart audibles, our race to create simpler devices today could be setting us up for a frustratingly complex future. “We will soon have hundreds of computer-powered devices that we can command with just our voices,” Amy Webb, a digital media futurist and the CEO of strategy firm Webbmedia Group, wrote in her survey response. “Our phones, our clocks, our cars. Unfortunately, the future won’t materialize as it did in Star Trek, where a single galactic federation of developers and linguists contributed to a gigantic matrix of standard human-machine language to build a Universal Translator.”

In the real world, she wrote, those developing voice interfaces can’t even agree on what to call them, let alone on a standard protocol to be shared between devices. Each corporation and startup develops its own method of voice-control. “This will result in our having to know myriad voice commands,” Webb wrote. “or in effect, having to learn how to speak different computer dialects.”

Webb expanded on this idea in a recent piece for Slate: Without standardized commands, her daughter is left yelling “OK!” (which turns on Google Glass) to activate a device that instead responds to “hello.”

Right now, it seems like a great problem to have: Devices you can control with a word, and no two of them the same. And one would hope that voice recognition will soon be “smart” enough to interpret intuitive commands—and to know which device you mean to control. Perhaps one day we’ll have a single operating system running all of our technology, allowing us to talk to one device and control them all. But without that Star Trek-esque communicator, we’re left with the audio equivalent of a pile of incompatible remotes next to the couch. We’d better hope that developers—if not machines—can learn to talk amongst themselves.