Every profession needs a metric to gauge performance. For a scientist, that currency is citations—a count of how many times other researchers mention his or her work. It is used for internal reviews, funding applications, journal rankings, ego massaging, and all sorts of activities that shape the business of science.

But the assumption that the greater the number of citations, the better the science has always been suspect. A mere count does not indicate whether other researchers are saying positive or negative things about the cited research.

In the first large-scale attempt to understand whether citations are truly a useful metric, a group of American and Canadian economists decided to quantify the proportion of “negative citations” in scientific literature. Their analysis, published in the Proceedings of the National Academy of Sciences, includes 760,000 citations in 16,000 studies published in the Journal of Immunology.

To be sure that that their analysis is accurate, they got help from immunologists to classify some 15,000 citations as negative or positive. This was based on the context in which the reference was cited. After feeding phrases such as ”…the data therefore contrast with reports that…” or “…this conclusion appeared inconsistent with…” into an algorithm, they automated the analysis for remaining citations.

They found that only 2.4% of all citations are negative and only 7.1% of 150,000 papers that were cited received negative citations. Interestingly, it was papers that received the greatest number of citations—the current proxy for quality—that also received the greatest number of negative citations (even though positive citations in most cases outnumbered negative ones).

A lot of scientists will breath a sigh of relief. The data seems to show that citation count can stand as a proxy for quality in most cases. Then a lot of them will scratch their heads. For, if that is the case, why do the most cited papers also receive the most negative citations?

Alexander Oettl, an economist at Georgia Institute of Technology who led the study, has an explanation. “Scrutiny should be directed towards the most important ideas so that they can be further refined,” he told Nature. “Low-impact ideas receive less scrutiny, as overturning a trivial or minor finding does not drastically shift the scientific frontier.”

After all, only a small proportion of science will shape the future and needs to be criticized. But is criticizing only 7.1% of all research enough? We don’t know. Oettl and his colleagues are planning to expand their analysis to other areas of science to see if the proportion stands.

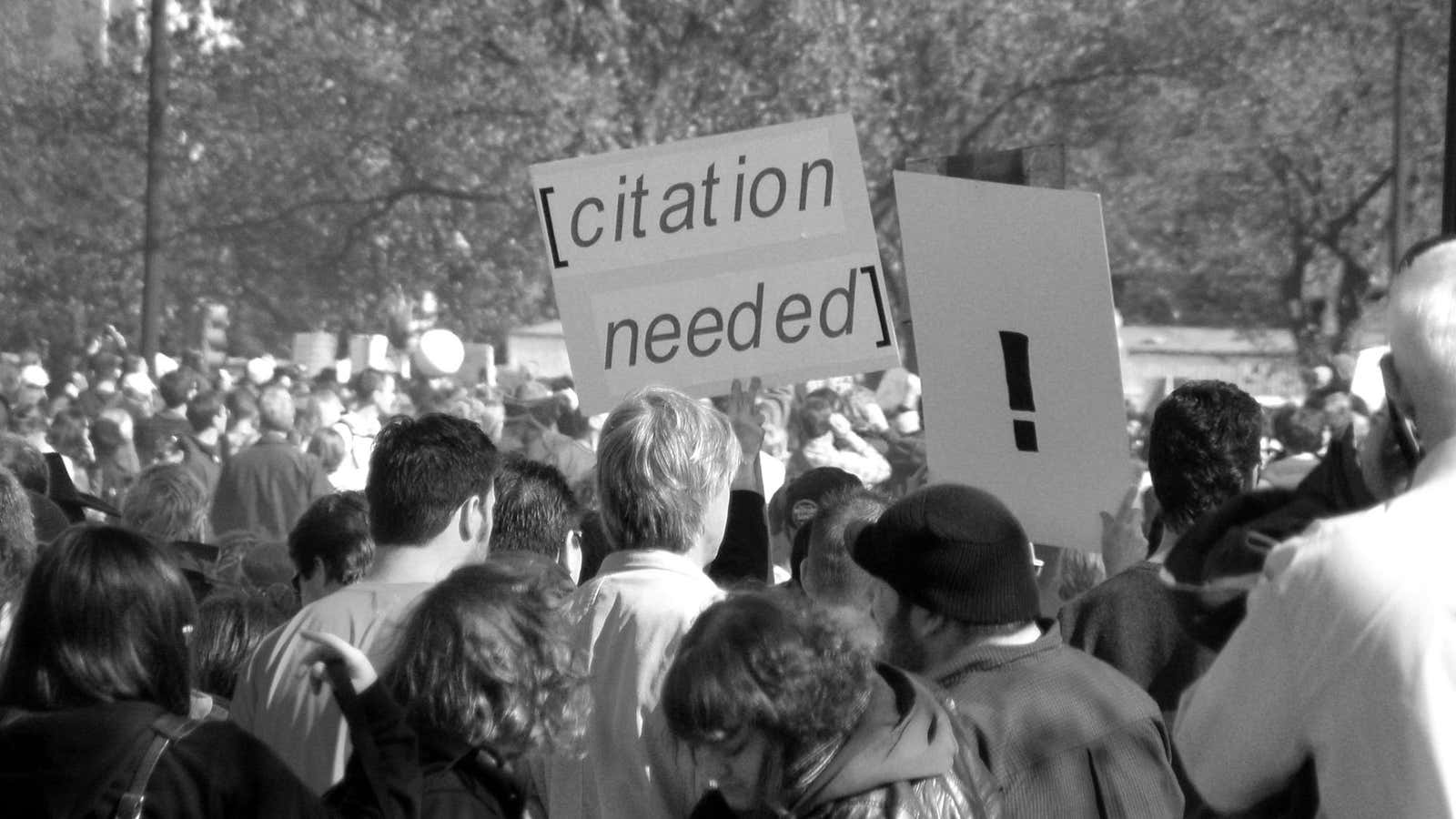

In the past decade, perverse incentives that shape their career have made it difficult for scientists to follow science’s core tenet of self-correction. But such flaws are increasingly being exposed, and the first step to fixing science is to count when it goes wrong.

Edited image by Dan4th Nicholas on Flickr used under CC-BY 2.0 license.