The meteorite that exploded in southern Russia last month and the near-miss asteroid that passed by the same week have more than a few of us pondering humanity’s potential extinction. But to Oxford philosopher Nick Bostrom, these are mere trifles. Asteroids, global warming, or even a super volcano that would take us 100,000 years to recover from “would be a brief pause on cosmic time scales,” Bostrom tells Aeon’s Ross Andersen, who recently profiled him and some of his peers at the university’s Future of Humanity Institute, an article that is worth reading in full.

These experts devote their lives to thinking about the risks that could not merely kill billions but put paid to humanity once and for all. And what really scares them is us—more specifically, what our primitive primate brains are capable of creating. Here’s what gives a human extinction theorist the heebie-jeebies, in increasing order of existential dread:

The Bomb

Nuclear weapons were the first human technology capable of wiping out the human race. It’s not mushroom cloud explosions or radiation that would do it, but rather the resulting nuclear winter, which would potentially create so much dust that the whole planet’s climate is shifted and all of its crops are destroyed. But cheer up: Even then it might not be enough to wipe out every last one of us, and even the risk of a Cold-War-style holocaust seems to be receding for the moment.

Biological Warfare—and Accidents

Scarier are biological weapons, especially once advances in DNA manipulation put DIY plagues within the reach of any apocalyptic cult with a bit of imagination. A disastrous biological event doesn’t even have to be intentional, as Andersen notes: “Imagine an Australian logging company sending synthetic bacteria into Brazil’s forests to gain an edge in the global timber market. The bacteria might mutate into a dominant strain, a strain that could ruin Earth’s entire soil ecology in a single stroke, forcing 7 billion humans to the oceans for food.” Note to hypothetical Aussie miners: Don’t do that.

Artificial Intelligence

It seems so innocuous the way that software keeps getting better—fixing our typos, optimizing our high-frequency stock trades, beating us at Jeopardy and chess. But when an artificial intelligence (AI) becomes just a tiny bit smarter than the humans that created it, things could get catastrophically unpredictable, very quickly.

Here’s Andersen again:

Bostrom told me that it’s best to think of an AI as a primordial force of nature, like a star system or a hurricane — something strong, but indifferent. If its goal is to win at chess, an AI is going to model chess moves, make predictions about their success, and select its actions accordingly. It’s going to be ruthless in achieving its goal, but within a limited domain: the chessboard. But if your AI is choosing its actions in a larger domain, like the physical world, you need to be very specific about the goals you give it.

Any safeguards you might think of carry their own unintended consequences. Design an AI with empathy? It might decide that you are happiest with a non-optional intravenous drip of heroin, and do whatever it takes to give it to you. Design an AI to answer questions and crave the reward of a button being pushed? It might figure out a way to dupe you into building a machine that presses the button at the AI’s command. Then, Bostrom explains, “It quickly converts a large fraction of Earth into machines that protect its button, while pressing it as many times per second as possible. After that it’s going to make a list of possible threats to future button presses, a list that humans would likely be at the top of.”

The scenarios basically end up with humans trying to keep a super-intelligent AI in a super-secure prison, and hoping that they’ve thought of everything. They will probably forget something.

The ‘Cosmic Omen’

The above threats may be a subset of a bigger problem. Russian physicist Konstantin Tsiolkovsky noticed in the 1930s that there just weren’t many aliens around. Enrico Fermi made a similar observation 20 years later, and the paradox that bears his name amounts down to a question: “Where are they?”

Robin Hanson, a research associate at the Future of Humanity Institute, “says there must be something about the universe, or about life itself, that stops planets from generating galaxy-colonizing civilizations.” Maybe intergalactic space travel is basically impossible. Or, more ominously, it may be that any sufficiently advanced civilization creates nukes, or biological weapons, or artificial intelligences that lead to its demise. Or it could be something that hasn’t occurred to us yet, or that we are not yet capable of conceiving. But the absence of aliens leads Hanson to a few troubling conclusions:

It could be that life itself is scarce, or it could be that microbes seldom stumble onto sexual reproduction. Single-celled organisms could be common in the universe, but Cambrian explosions rare. That, or maybe Tsiolkovsky misjudged human destiny. Maybe he underestimated the difficulty of interstellar travel. Or maybe technologically advanced civilisations choose not to expand into the galaxy, or do so invisibly, for reasons we do not yet understand. Or maybe, something more sinister is going on. Maybe quick extinction is the destiny of all intelligent life.

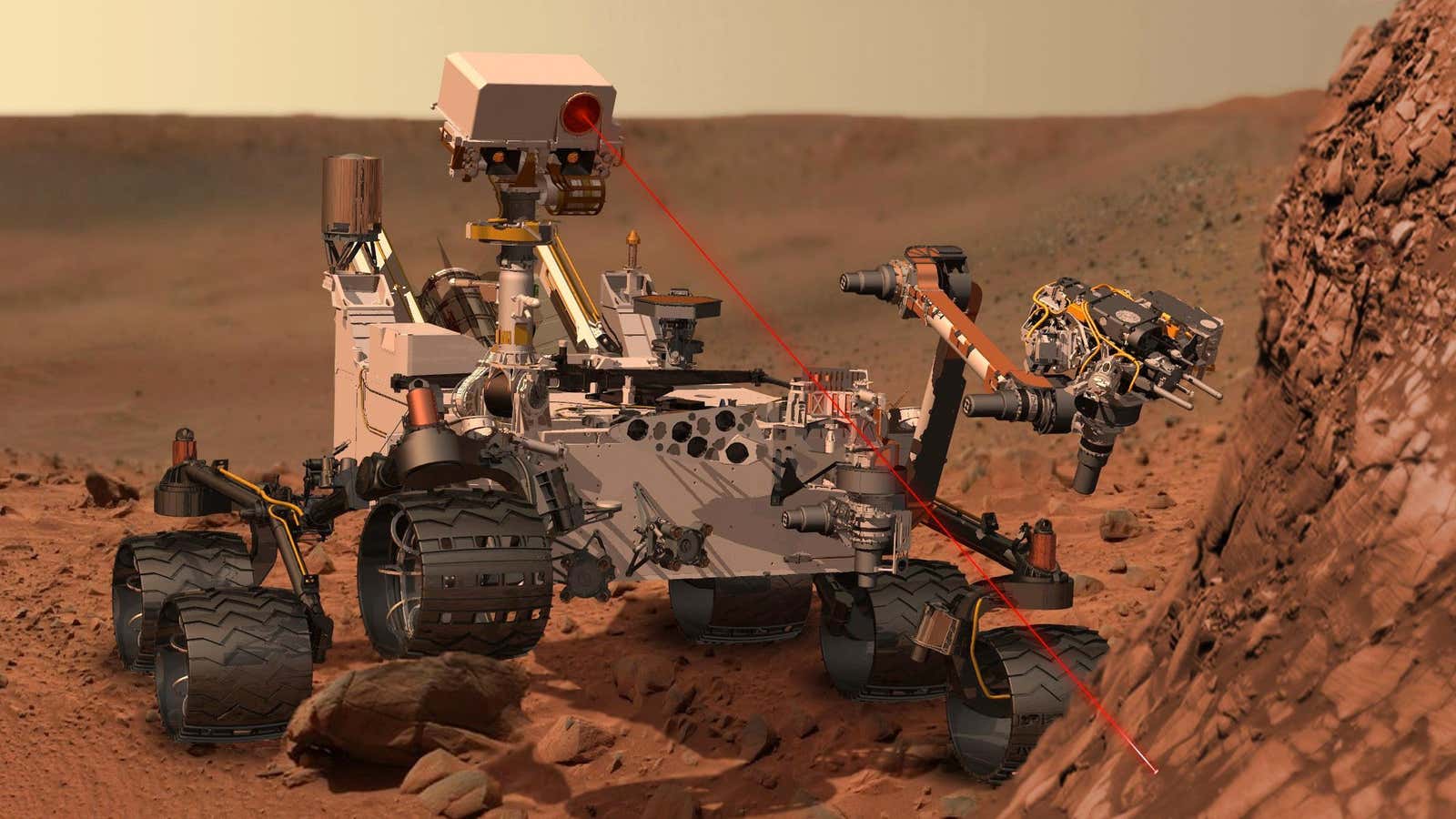

For this reason, Bostrom hopes that NASA’s Curiosity rover doesn’t find life on Mars. Because then, perhaps the reason we haven’t met advanced civilizations is that they never existed, not that they self-destructed. The thought that life on Earth is unique would be a lonely one, but for a philosopher of human extinction, much more comforting.