Our lives are ruled by laws—but not necessarily the ones we think.

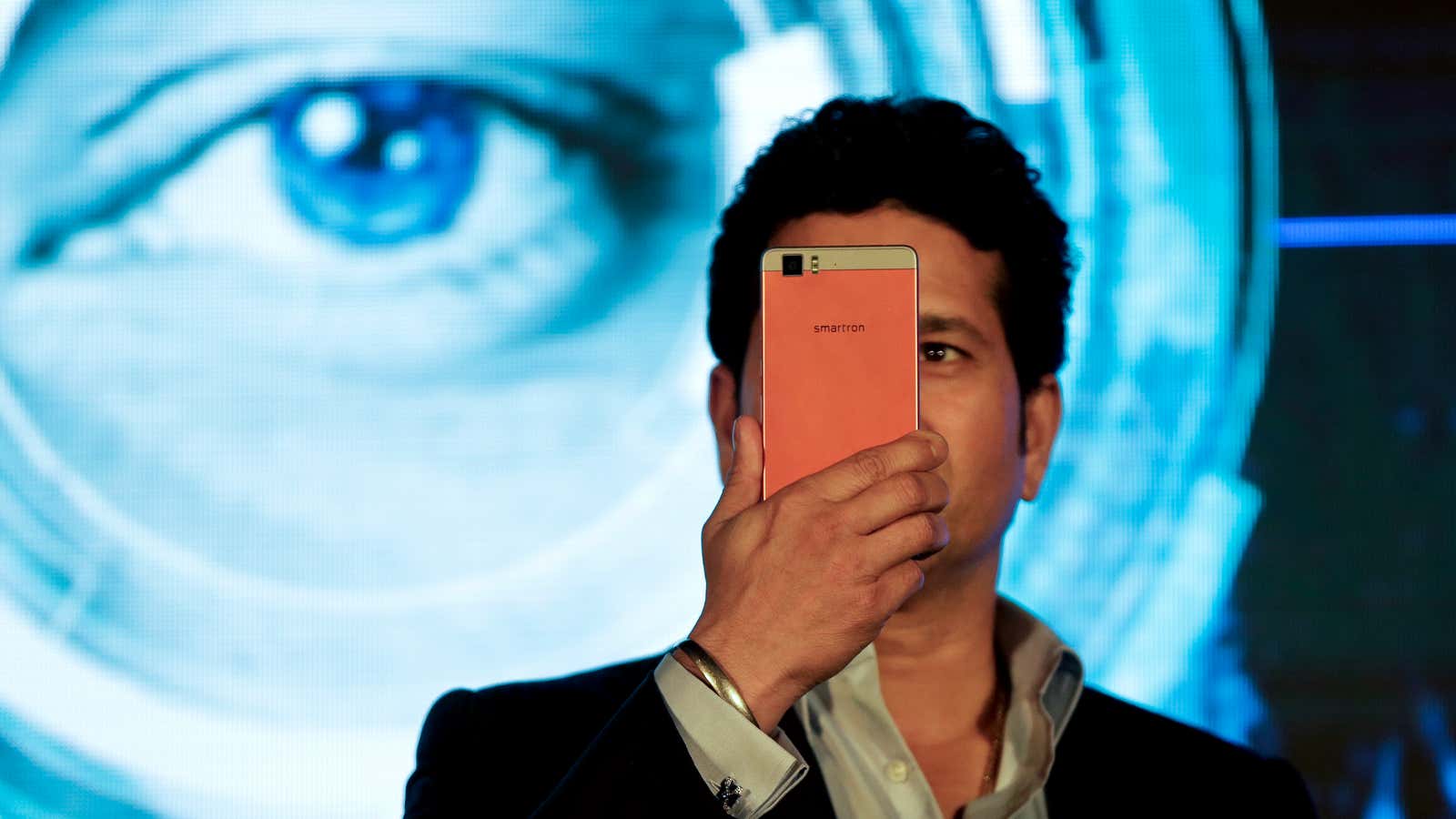

Consider the spread of the smartphone, which will soon become “the first universal tech product,” according to Benedict Evans of the venture capital firm Andreessen Horowitz. Driving the widespread adaptation of the smartphone is Moore’s Law, which holds that computing power gets cheaper and faster roughly every 18-20 months. Also predictive of this eventuality is Metcalfe’s Law, which says that networks become exponentially more valuable as they add users. When mobile phones are both cheap and ubiquitous, no one can afford not to have them.

These laws dictate how and why technology scales. But they don’t tell us anything about the character of technology itself. For this we must turn to the more obscure Conway’s Law, which holds that our technology is infused with the essence of its creators.

A bit of background: In the late sixties, programmer Melvin Conway noticed that the way technology teams were structured had a direct impact on the technology they created. “Any organization that designs a system will produce a design whose structure is a copy of the organization’s communication structure,” he wrote. For example, a product constructed by four separate teams will have four parts. That’s why open source mobile operating systems like Google’s Android, which can be built by people scattered around the globe, tend to be both more flexible and messier than closed systems like Apple’s iOS.

But what seemed like a modest observation in the era of mainframe computing means something entirely different in an era when roughly eight billion people will soon be tapping away at their screens. Conway was interested in how people replicated their organizational structures in the technology they created. But if you widen the lens of his law, you start to see the larger implications. We imagine that our devices are logical and impersonal, but tech’s human creators actually build things in their own image. It’s difficult to see or quantify human influences. They are the ghosts in the machine, invisibly affecting everything we do..

So the logical question is, who are the people who are creating our technology?

We know from workforce surveys that tech companies have a significant diversity problem. According to self-reported statistics, in 2015, the overall workforces at Yahoo, Facebook, Google, and Apple were between 62-70% male (the engineering and technical workforce skews even more heavily male) and 50-60% white. While we can’t say how exactly technologies carry those biases, the effects are nonetheless clear: The web is an often unfriendly and sometimes dangerous place for women.

Take #GamerGate, a coordinated attempt to harass women in the video gaming world. Or watch this YouTube video of horrified men reading real comments sent by male readers to female journalists. Much of this is attributable to user behavior or blind spots in how we build, but it’s equally true that the homogeneity of our tech workforce consistently fails to create systems to prevent such abuse.

Another clear illustration of this failure to foresee how our technology will play out came in March, when Microsoft launched the artificial intelligence chatbot Tay. The chatbot was designed to be a literal child of the internet that could mimic and learn from online behavior. Tay, which was styled by its creators as an 18-year-old girl, quickly became racist, transphobic, and misogynistic, all thanks to the internet community with which it interacted and learned from. The experiment ended within 24 hours, thanks in part to the social-media users who intentionally taught the bot to offend.

Did Melvin Conway predict this? Certainly not. Computing in the late 1960s was an elite tool, accessible to a small number of engineers and programmers. This is a radical extension of his law. Yet he considered his observation a sociological truth about systems design more broadly and welcomed new interpretations.

Savvy companies have understood Conway’s Law for years and structured their teams using the “reverse Conway” in order to find the right amounts of speed and flexibility. What we haven’t done is discuss it in terms of diversity.

We often think of the lack of diversity as something that prevents us from finding the best and most profitable ideas. In fact, the stakes are much higher. We’re encoding our biases, however subtly, in a universal technology. We can decry incidents like #GamerGate or the perversion of AI chatbots and ask why they keep happening. But, in coder speak, they’re a feature, not a bug. Our tech is just following the law.