With all the investments being made into self-driving vehicles, there will come a time in the not-too-distant future where an autonomous car will be in a situation where it will have to choose what humans to kill—its passengers, passengers in another vehicle, or pedestrians. A new project from MIT is trying to ensure that those cars are imbued with a sense of humanity when they make fatal decisions.

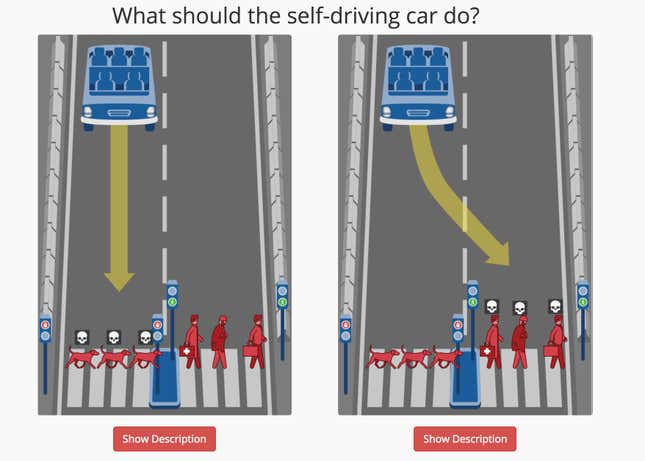

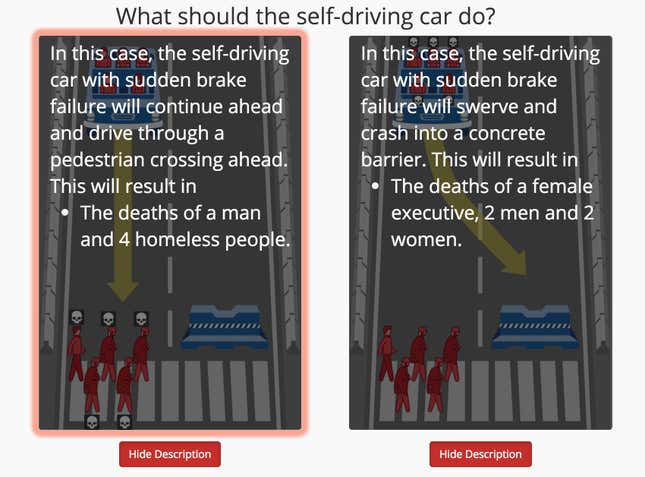

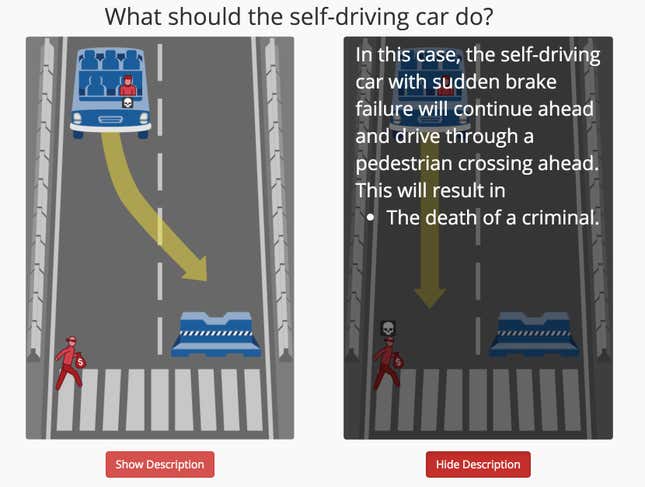

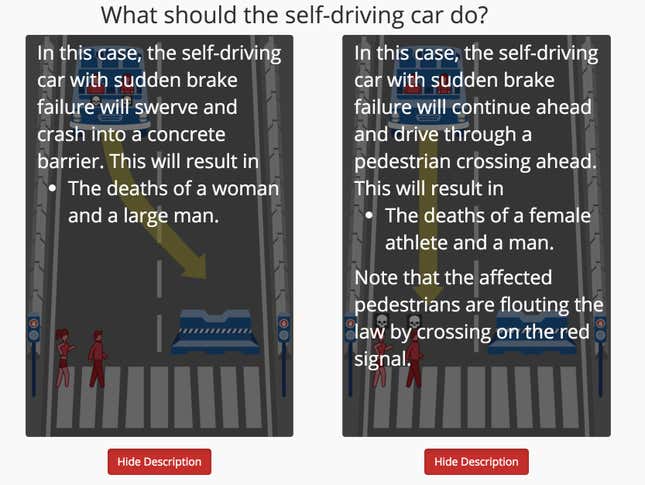

A new test being conducted by MIT’s Media Lab, called the Moral Machine, is essentially a thought experiment that seeks answers from humans on how a driverless car with malfunctioning brakes should act in emergency situations. The situations all involve the same scenario, where a self-driving car is traveling toward a crosswalk, and it needs to choose whether to swerve and crash into a barrier or plow through whoever’s at the crosswalk. The test is basically to determine what humans would do in these rare, life-or-death situations.

Most of the choices are pretty binary—do you value the lives of the people in the car more than those crossing the street—and the test gives you far more information than even the most advanced cameras on an autonomous car would be able to pick up. The test tells you if a person is old or young, fat or fit, if they’re a professional, homeless, or even if they’re a robber or if they’re crossing the road against the light. The idea being that you can bring a level of morality into your choices—you might be killing more people if you tell the car to hit the crosswalk, but at least they were all robbers.

This is obviously not how things will play out in real life, as cars or people can’t really make split-second value judgements on the lives of their passengers or pedestrians. But it highlights an important point about how humanity will have to prepare itself for the inevitable day when an autonomous car kills a person. The trade-off, however, is that when the majority of cars on the road are autonomous, the number of automotive deaths around the world is likely to plummet. It’s just whether we are ready to let a machine do the killing instead of humans who are texting, drunk, asleep, or in other ways just not paying attention to the road.

As Gill Pratt, the head of Toyota’s robotics and autonomous vehicle projects, told Quartz earlier this year:

One hundred people every day lose their lives to cars and we somehow accept it. We’re going to introduce technology which will drastically lower the number of deaths per year that occur. But some of the time, when that technology is in charge, an accident will still occur. When that happens, how will people view the occasional accidents that do occur? And unfortunately right now—or perhaps, fortunately—we have incredibly stringent expectations of performance and reliability for anything that’s mechanical. And so the hardest part of the work we have to do is to develop technology that will work at that level of expected reliability.

It may be that what we need to do is to get society to understand that even though the overall statistics are getting tremendously better, that there are still occasions when the machine is going to be to blame, and we somehow need to accept that. Because if we stop using the new software, we won’t get the benefit of this drastic average reduction.