Google's Gemini Live will now be able to access your camera and screen

Google is debuting the much anticipated video capabilities for its AI chatbot later this month

Google (GOOGL) is debuting long-awaited video capabilities for the company’s AI chatbot Gemini Live later this month.

Suggested Reading

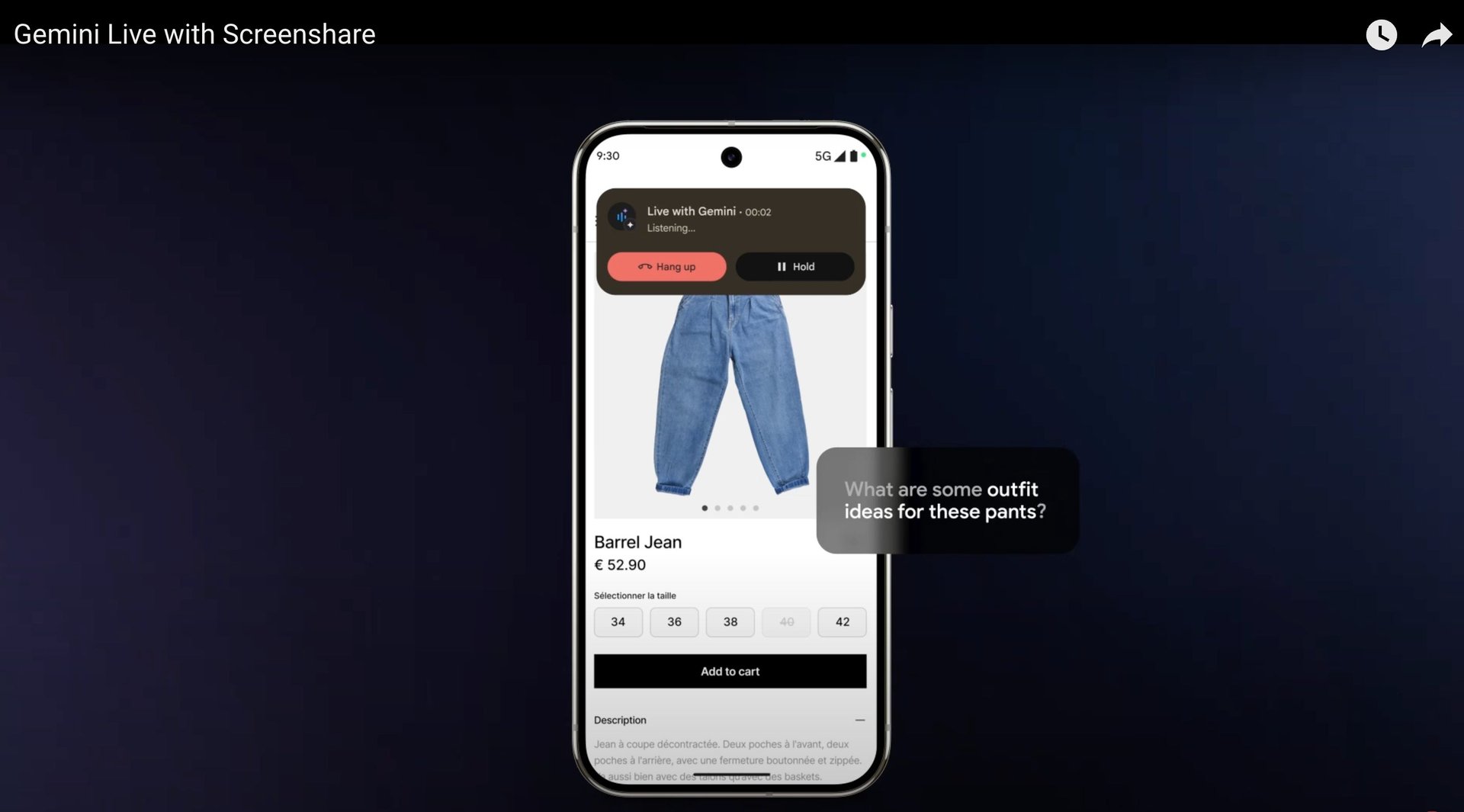

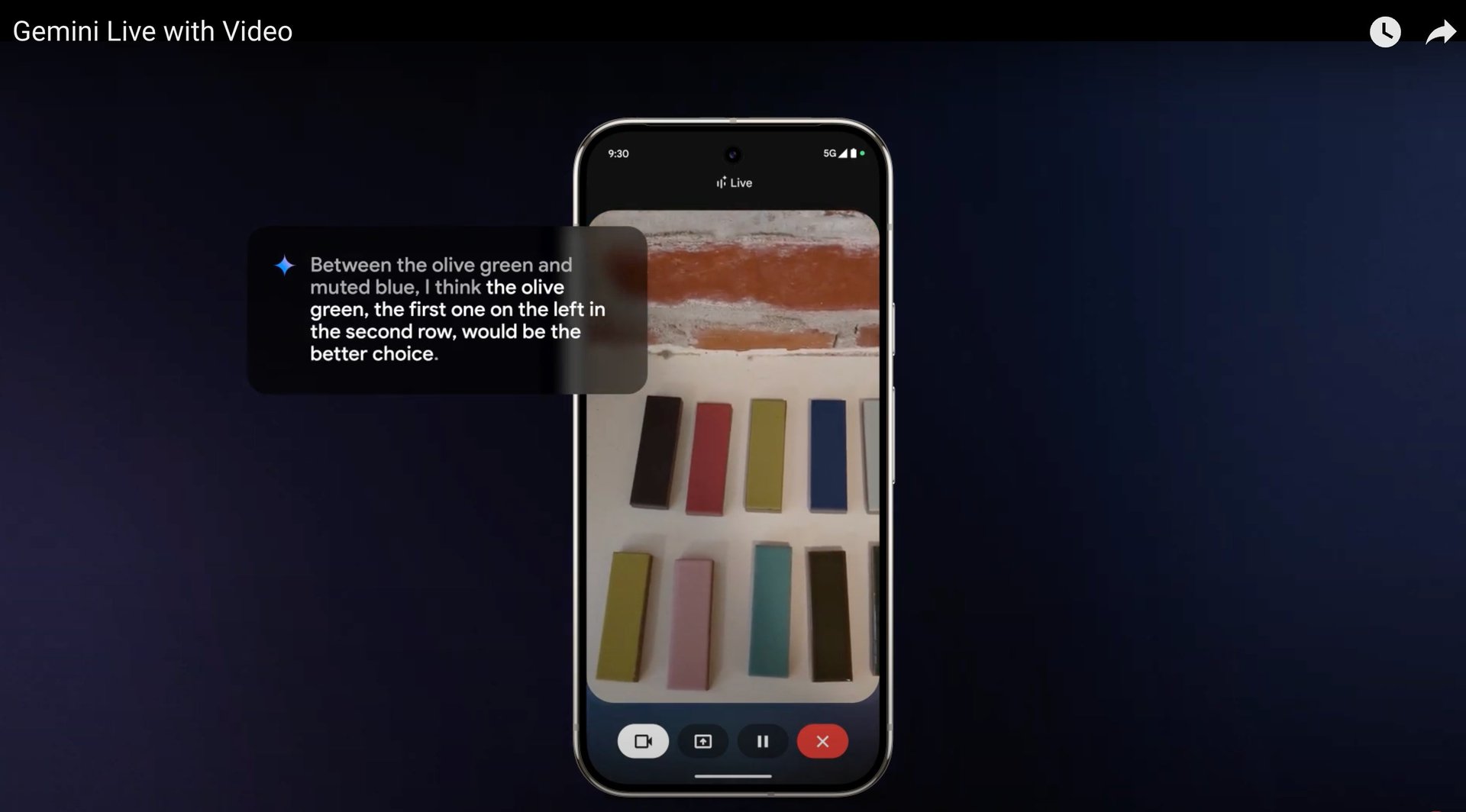

Going beyond just text and image input, select users will be able to use their Android device’s camera or share their screen with Gemini Live to ask the chatbot questions about what appears in the live video feed. In the video demonstrations shared by the company, the new and advanced Gemini Live is shown giving style advice via screen share while online shopping, and interior design advice as the camera moves around the room.

Related Content

The new features will only be available for Gemini Advanced subscribers with the $20-a-month Google One AI Premium plan.

Google debuted the updates on Monday at the Mobile World Congress 2025 in Barcelona, Spain, but the tech giant has been teasing the new features for almost a year now.

Google first started hinting at advanced video capabilities at the Google I/O conference in May 2024, where the tech giant demoed Project Astra, a research prototype of a multimodal AI assistant that could process video input to give you answers on the environment around you, even going as far as to remind you where you last placed something.

At the conference in May 2024, Google executives shared a vision for this universal AI assistant that also included the ability to see through smart glasses, although Monday’s announcement has not detailed any additional product launches.

The unveiling of the latest capabilities comes on the heels of the December introduction of Gemini 2.0, Google’s most capable AI agent model, and a new Gemini feature called Deep Research, which can compile research reports on behalf of users.