I’ve found my way up a long, winding, alder and sycamore-lined road to the top of a hill in California’s Portola Valley, but now I’m stumped. Before me are a couple locked gates and behind them a massive complex that used to be a tank repair facility. It seems like an odd place to put a tank facility and an even odder place to put a startup. But supposedly this is where I will find Luminar, run by 22-year-old Austin Russell, who’s been operating in stealth mode for the last five years.

Russell’s technology could change how self-driving cars perceive the world and he’s promised me a tour and a demonstration, if I can figure out how to get beyond these massive, locked gates. I’m wondering if I’m in the right place. A black Dodge Challenger drives up; a man steps out and asks if he can help me. Now I’m certain this can’t be the office of an optics startup, but instead the headquarters for some international drug cartel that is about to disappear me for stumbling upon them.

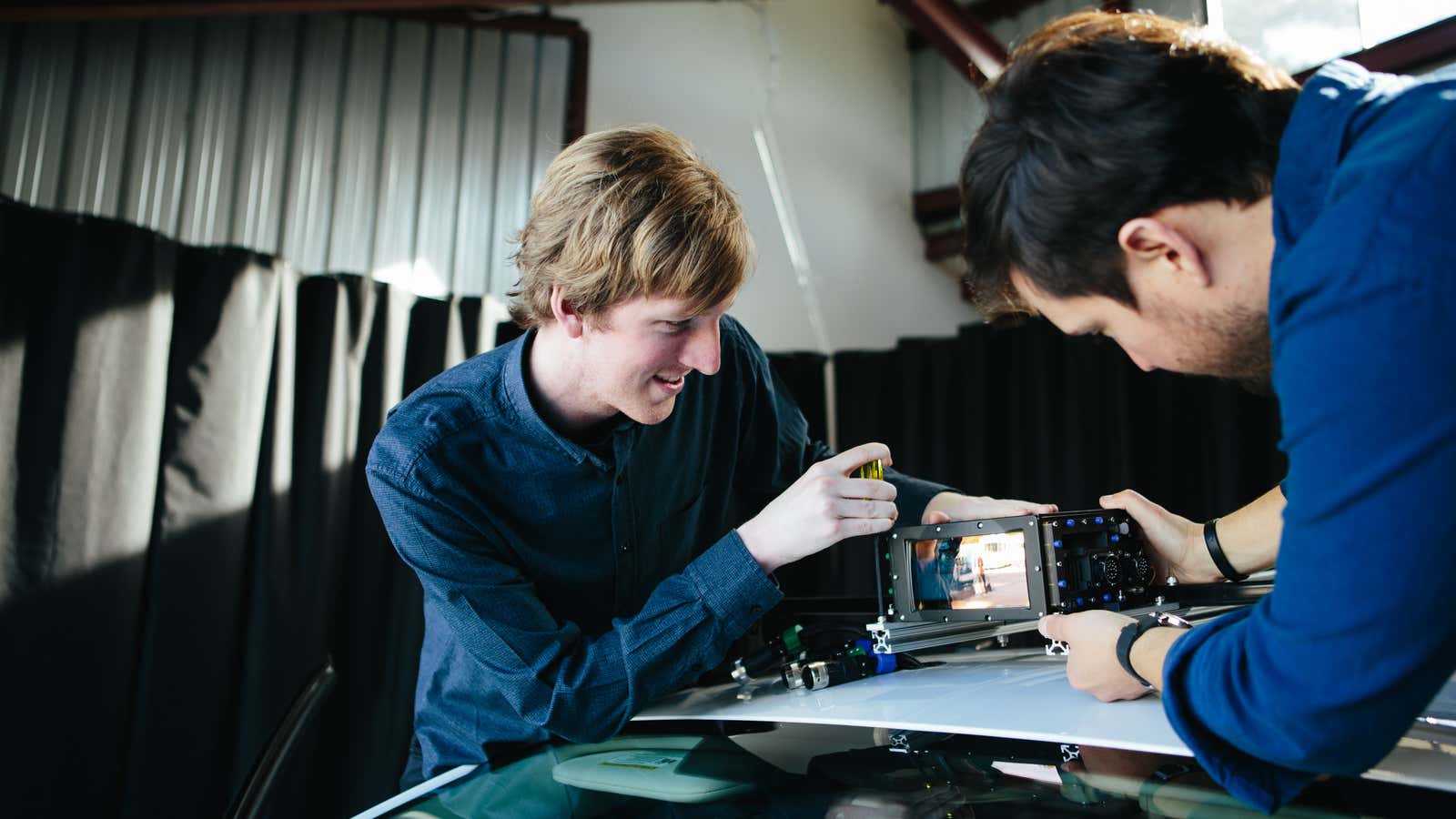

I say I’m looking for Luminar and the man smiles and says, “Oh yeah, it’s just up this hill!” He opens the gate and I drive up to clearing. There’s a facility that looks like an auto-repair garage, a hill with something that looks like a target for blasting with a tank, and of course, an old tank. Towards me walks Russell. He is tall, with shaggy blonde hair, a smile constantly slapped across his face. He looks far more like someone you’d find surfing his days away down the road in Santa Cruz, than someone running a five-year-old company that hit everyone’s radar in April after getting $36 million in venture money from Canvas Ventures, GVA Capital and 1517 Fund, among others.

He invites me into the tank factory.

We are still in the infancy of the self-driving car revolution. Just about every car company and Silicon Valley giant has said that at some point soon, we will be able to press a button on our phones, or tell Siri, to call us a car, and within minutes, one will drive itself to our front door, and whisk us away to our destination without a single other human involved.

Right now, the technology that’s powering this research has been cobbled together from other industries, and it is expensive. The Lidar (short for “Light Detection and Ranging,” a laser rangefinding system) technology that most cars use to see the road, was first used in the 1960s to measure clouds and the surface of the moon. It’s been used in surveying and mapping streets for Google Maps—which led to Google testing it in cars. No fully autonomous systems have yet proven themselves consistently in the vast majority of normal driving situations, and some semi-autonomous systems have been partly connected to deaths when they couldn’t fully understand what was ahead of them on the road.

The self-driving cars of tomorrow will need to be able to perceive the world with about as much information and as easily as we do or they’re not likely to revolutionize how we get around. When it comes to seeing the world, few things see as clearly as Russell’s machine.

I first met Russell when he came to visit New York in the spring. He started coding when he was about nine years old and became interested in optics and photonics and how they could be used by robots. Instead of finishing high school, he worked at the Beckman Laser Institute, an optics technology research facility at the University of California, Irvine. He won a $100,000 Thiel fellowship—the ones offered by PayPal founder, Facebook investor, and Trump acolyte Peter Thiel—and founded Luminar in 2012 to explore how to map the world in three dimensions. Russell had no driver’s license then. He now has a team of 200 working for him at the former tank factory, and another facility in Orlando, Florida, with decades of experience in optics and laser radar systems.

In New York, Russell showed me a box about the size of an original CD player, a marked departure from the giant, spinning buckets we’re accustomed to seeing on the top of every self-driving car being tested today. Those Lidar systems, built by companies like Velodyne, use multiple lasers to scan the horizon to determine how far away objects in front of them are. To get a complete sense of the world, these devices are constantly spinning, providing a decent 360-degree view of what surrounds the vehicle they’re perched on.

While demand for Velodyne and others’ Lidar systems has skyrocketed as more companies develop autonomous machines, the underlying technology hasn’t advanced much since the earliest tests of self-driving cars over a decade ago. Those spinning buckets contain a set of 64 lasers and sensors that relay information from every surface. Each laser hits back to a computer that processes the data and builds what looks like a wireframe map of the world, which the car uses to perceive what’s around it.

The buckets aren’t without problems. The 64 lasers contain 64 potential points of failure and the buckets are not only big, but very expensive. Velodyne’s top-of-the-line system costs around $85,000. Its smaller systems containing fewer lasers cost between $8,000 and $32,000, but they provide a far less complete picture of the world.

Russell’s CD-like box appears far simpler, which of course, makes me wonder how, and if, it works. It has an all-glass front and contains a single laser that is refracted multiple times to provide the same basic mapping structure as a traditional Lidar system, but with fewer moving parts. While he won’t tell me its cost, Russell suggests that its simplicity should make it cheaper when Luminar starts producing at scale.

He says it’s built entirely of homemade, in-house parts, even down to the indium-gallium-arsenide chips it uses to power the sensors—nothing is off the shelf here—and claims it’s 40 times more powerful than his competitors, has 50 times more resolution than their systems, and can make out a black car or tire (or something equally non-reflective) on the road 200 meters (656 ft) away with ease. Traveling at 75 mph on the freeway, his system has the resolution to translate into a picture that would give a self-driving car a full seven seconds to respond to something it’s approaching.

To prove it, Russell offers a demo unlike those you usually see in the back of a self-driving Uber or onstage at a TED talk. “No one ever shows raw data,” he says. Most demos show a post-processed image, after the powerful computers in the back of a self-driving car have taken the raw information, run it against their training models and guessed at what they’re seeing. Russell shows me a video of the raw feed from a competitor’s machine, then compares it to what his box can do. The Luminar box shows an explosion of color, with the rainbow representing how close every object is to the sensor as the car it’s mounted on moves through the world.

It’s impressive and far more detailed. It looks like the Rainbow Road from Mario Kart. But it’s just a video on a screen. I tell him I’d like to see it in real life and he says I just have to swing by the compound next time I’m in California.

Two months later, I find myself in Portola Valley, a town along the mountain ridges of Silicon Valley that happens to be the perfect location for a company trying to reinvent how self-driving cars see the world. Its roads are squirrely and steep, and the eight-lane Interstate 280 down the bottom of the hill is perfect for speed runs.

Russell quickly shows me around the facility’s main building, the garage worksite where a range of cars from Europe, Asia, and the US sit. You can hardly tell that they’ve been altered, but the white Tesla closest to me has a Luminar box inserted into its front grille where the license plate would normally be.

“Everyone’s trying to throw more and more software at what’s essentially a hardware problem,” Russell says. “You see some of these big companies hiring thousands of software engineers to try to make sense out of just a few points that are out there, and to do a lot of guessing.”

Up on the hill, I can see a large black target I thought was for tanks. Russell says it’s actually a very dark black facade that his team uses to calibrate how well Luminar’s sensors can detect objects that reflect next-to-no light.

He asks me if I want to go for a ride.

We climb into the white Tesla. I sit in the back seat, as the passenger seat has been removed to make room for a massive flat screen that shows the output of Luminar’s Lidar. As the car idles, I can see the world on the screen pretty much as it usually appears, except in a zany technicolor palette. I can see leaves on trees; I can see the bushes behind trees that I can’t see with my own eyes; I can see the black of the impenetrable target. This is what the car sees.

We pull away, and drive down the hills. Luminar’s system shows the tops of trees well below us, and curves in the road before we approach them—it’s literally seeing around corners. Russell wants to show me how far the system can see while traveling at speed down the freeway, but as we pull on, we’re stuck in stopped traffic. Instead, he shows me how the system can change its vantage point: He moves it up and down, giving us a bird’s eye view of the traffic far off in the distance, and then pulls in close to see the details on the cars right in front of us. It’s like controlling the camera angles in a videogame.

Luminar’s system emits laser light on a different frequency than most of its competitors (1550 nanometers, compared with 905 nanometers), and using proprietary technology developed by Russell and his team, it can emit 68 photons for every one that its competitors emit. That essentially equates to far more information coming back into the sensors from the light it sends out, leading to a far more detailed picture. And because the light is being emitted at multiple angles, it can create views that make it seem like the car is viewing the world from hundreds of feet above.

We get back on the freeway in the other direction, and Russell shows me what the system can see traveling at speed, and it’s just as detailed as what the human eye could perceive traveling at the same speeds. It might not be as beautiful—the real-world mountains of the Santa Clara Valley are a sight to behold on a clear day—but the rainbow vision of Luminar’s system seems just as useful information for a self-driving car system as my own eyes would be.

Luminar announced in April, along with its funding, that 100 of its boxes were being tested in the wild, and that it would eventually start manufacturing 10,000 units later this year at its Orlando facility. The company has been working privately with four partners, but on Sept. 27, it announced that Toyota Research Institute (TRI), the division of the Japanese automaker that deals with robots and self-driving cars and is run by the former head of the DARPA Robotics Challenge, will be publicly testing Luminar’s technology in its new autonomous vehicles. It’s a bold move for one of the world’s largest carmakers to be using technology from such a young and untested company, but perhaps a testament to what Russell and his team have built.

“The level of data fidelity and range is unlike anything we’ve seen and is essential to be able to develop and deliver the most advanced automated driving systems,” James Kuffner, the TRI’s chief technology officer, said in a statement.

Luminar’s system isn’t the only one being developed to get away from those big spinning barrels atop most self-driving cars in test. Lvl5 wants to use systems trained on data from the cameras of the our smartphones. Quanergy and LeddarTech have raised millions of dollars for similar “solid state” Lidar systems (ones with fewer moving parts than the traditional spinning systems), but they’ve yet to show they can see objects at the distance, or the level of clarity, that Luminar has.

“Their tech is amazing,” Oliver Cameron, the founder and CEO of the self-driving car startup Voyage, told Quartz. “I got their demo one year ago, and it was mind-blowing.” Russell does express a level of clarity both in his product and vision that’s hard to ignore.

“We see ourselves as a similar type of a model to ‘Intel Inside,’ powering fully autonomous cars,” Russell says, adding that the company wants to work with every automaker in much the same way that companies like Bosch, Delphi, or Bridgestone tires, have parts in many different companies’ vehicles.

As I drive out of Luminar’s complex atop that hard-to-find hill, I get lost.

There’s no cell signal and I forget which steep road I was supposed to go down. I get trapped in some weird one-way system of roads that should be far too steep for families to be building houses on. I don’t feel comfortable behind the wheel. I can’t see beyond the giant trees and fear that I’m going to disintegrate my rental car’s brakes, or hit another car coming around a blind corner, and knock both of us off the mountain cliff. I think about the system I just saw that could see the roads like it had known them forever and turns before they were made. I think about a time when our cars know more than we do.

I think about getting off this hill in one piece, in peace.