Does anyone not like Biden's new guidelines on AI?

Most Americans support the initiative, according to a survey by the AI Policy Institute

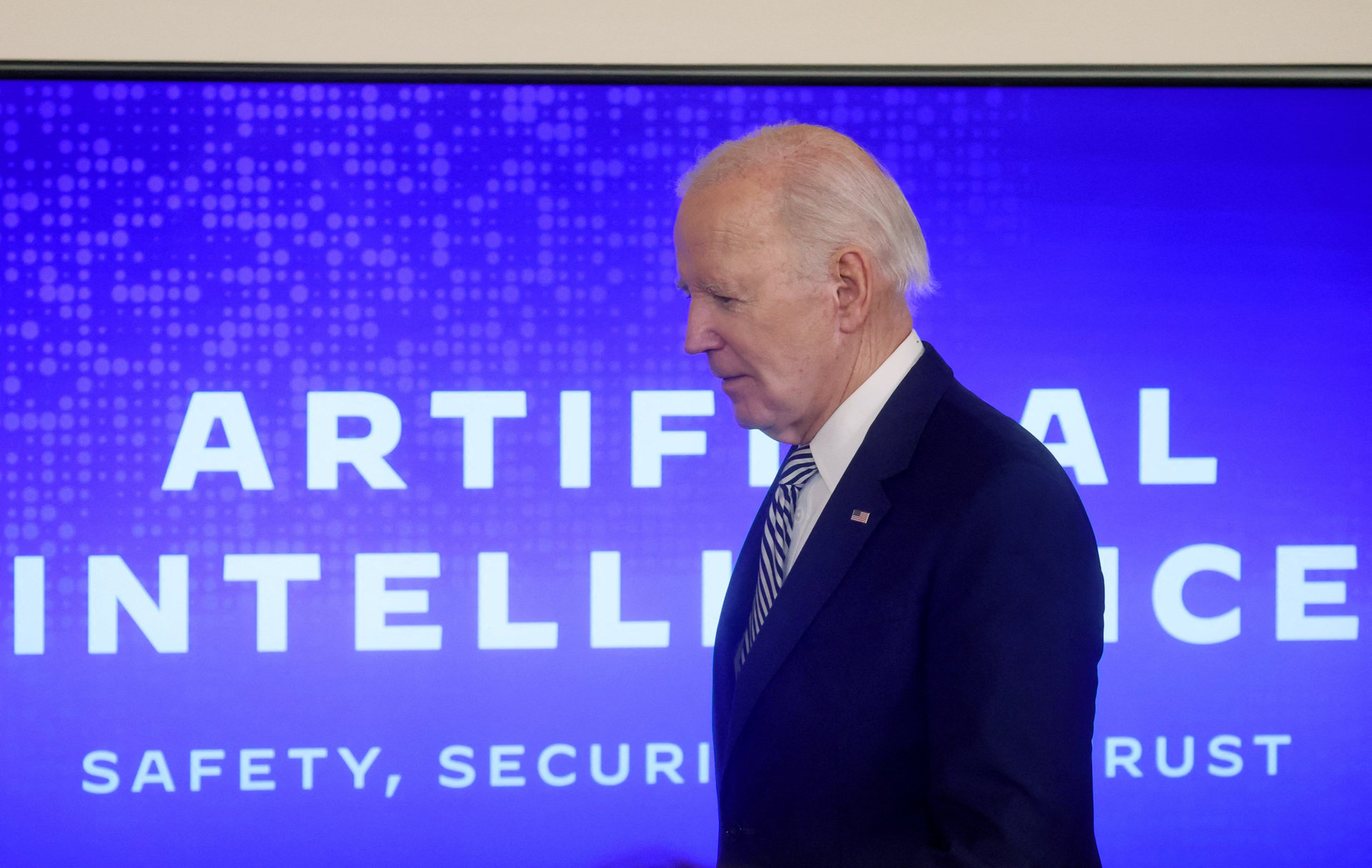

US president Joe Biden’s sweeping executive order to set guidelines for artificial intelligence has been widely applauded by the industry, while 68% of Americans approve of the initiative, according to AI Policy Institute’s latest survey.

Suggested Reading

The order directs several government agencies to establish rules and guidelines, report on their progress, and create funding for AI research. It also pushes tech companies to test and evaluate their AI systems and report their findings to the government before making them public, to mitigate AI bias, and to watermark AI-generated content.

Related Content

“Seeing such a heavy emphasis on testing and evaluating AI systems seems good—you can’t manage what you can’t measure,” posted Jack Clark, co-founder of Anthropic, the company behind Amazon Bedrock’s chatbot Claude, on X.

“Scale is pleased to see that they recognize the importance of rigorous test and evaluation prior to AI deployment,” said a spokesperson for Scale AI, a provider of datasets used to train AI.

One driving force behind the executive order is to promote competition while the US plays regulatory catch-up with other nations, after years of Congressional hearings with Big Tech CEOs that saw the industry call for government regulation.

Biden signed the order on Monday (Oct. 30), just ahead of vice president Kamala Harris’ planned attendance at UK prime minister Rishi Sunak’s AI summit in London next week.

Is it possible to regulate AI?

Regulating AI is like “nailing jell-o on the wall,” wrote W. Russell Neuman, a professor of media technology at New York University, in a Boston Globe op-ed last month. “AI is the application of a set of mathematical algorithms, and you can’t regulate math,” Neuman added.

However, he’s pleased with this order. “I was delighted that they didn’t do wrong; their approach is good,” Neuman told Quartz.

He said some members of Congress have an impulse to directly regulate and license AI, limiting innovation and creativity, but the Biden order avoided that, restricting the scope to foundational large language models (LLMs).

LLMs is are AI algorithms trained on massive datasets to summarize, translate, predict words based on context, and generate text. The big four LLM creators are OpenAI (with Microsoft), Meta, Alphabet (Google), and Anthropic, now heavily backed by Amazon.

Singling out foundational LLMs is significant because the onus would be on those companies to test and evaluate their models, along with “red-teaming,” or hacking them, to identify issues they would then share with the US government.

That’s a good start, but experts say the fact that AI evolves so rapidly makes it tough to regulate.

“While the US government can potentially regulate some aspects of AI, such as how these platforms are developed, what purposes they are developed for, and the intentional use of training data, we will still face many grave challenges,” said Chris Pierson, CEO of BlackCloak, a digital protection company. Pierson is also a former member of the US Department of Homeland Security’s privacy and cybersecurity subcommittee.

Pierson is particularly worried that malicious actors and foreign adversaries could steal the technology, or remove safeguards and use it for criminal purposes.

“The AI platforms themselves could also self-evolve past certain restrictions unless they are carefully monitored and controlled,” he added.

Doubts over AI talent and copyright

Biden’s order encourages the government to hire AI talent and push for training staff at all levels and areas, a move praised by a former US president.

“[W]e need to convince more talented people to work in government, not just the private sector,” wrote Barack Obama on his blog.

However, luring talent away from industry will be challenging, given the high salaries that workers in the AI field command. The median US compensation for AI-focused software engineers is $247,200, according to career consulting firm Levels.fyi. Earlier this year, Netflix offered a salary of $900,000 for an AI product manager.

“Another important factor here is the culture of government, which is vastly different from the private sector—particularly when we’re talking about high-tech, innovative, fast-moving fields like AI,” said Karim Hijazi, CEO of Vigilocity, a cybersecurity firm. Hijazi is also a former contractor for the US intelligence community.

While some passionate professionals might “forego short-term financial benefits,” Hijazi said, the government’s naturally conservative approach isn’t “motivating sophisticated talent.”

The executive order also seeks to prevent worker displacement arising from AI, but it falls short on copyright of intellectual property used for training generative AI models, one of actors’ union SAG-AFTRA’s sticking points in its ongoing strike negotiations.

Still, the union posted its support for the order on X. “For strong, safe & responsible AI development & use, it is imperative that workers & unions remain at the forefront of policy development,” it said. “We look forward to working together for a human centered approach.”

What’s next for AI regulation?

With the majority of Americans and the tech industry supportive of AI regulation, the next year will reveal whether Biden can succeed in establishing meaningful oversight.

“My meta-takeaway from the full text of the Executive Order on AI is that in the next 6-12 months there will be an unfathomable number of guidelines, rulemaking processes, reports, whitepapers, working groups, public comments, panels, and whatnot,” Arvind Narayanan, director of Princeton University’s Center for Information Technology Policy, posted on X.

As for NYU’s Neuman, he hopes the initiative will help drive momentum to pass a current Congressional bill that would provide $1 billion in funding a year for AI research and the establishment of a National AI Research Resource (NAIRR).

Enacting and adopting a concrete AI framework for regulating AI is still a long way off.

“This EO [executive order] is the beginning of a conversation only,” BlackCloak’s Pierson said. “The degree to which we are successful in tackling AI’s benefits and mitigating potential pitfalls is dependent on starting these conversations now, within a common rubric.”